Subject: Reverse-engineering an airspeed/Mach indicator from 1977

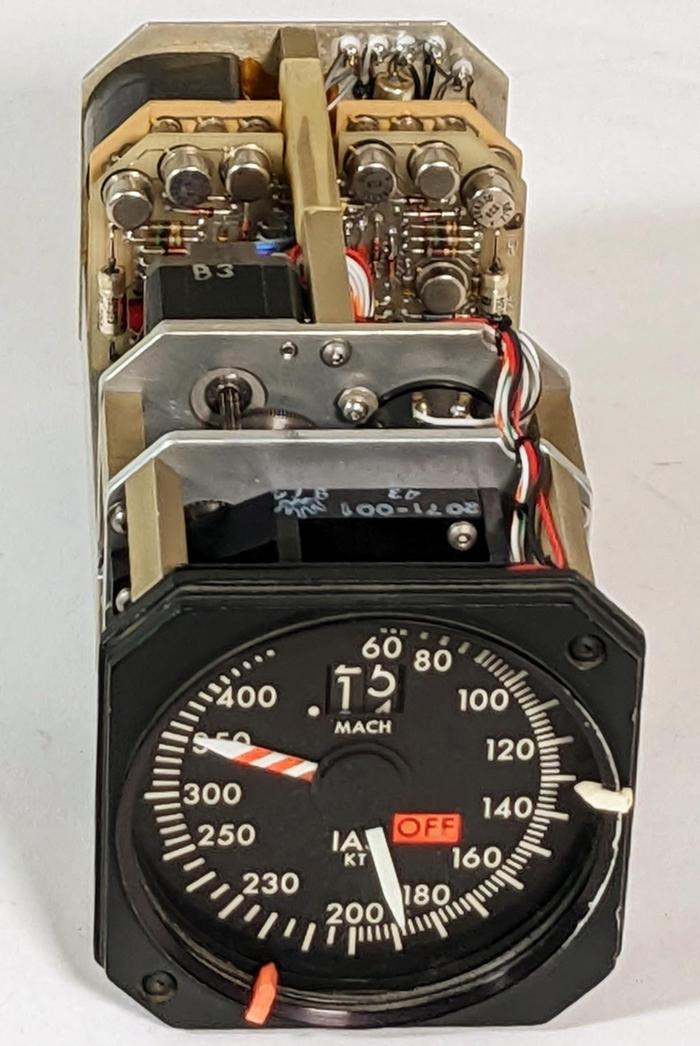

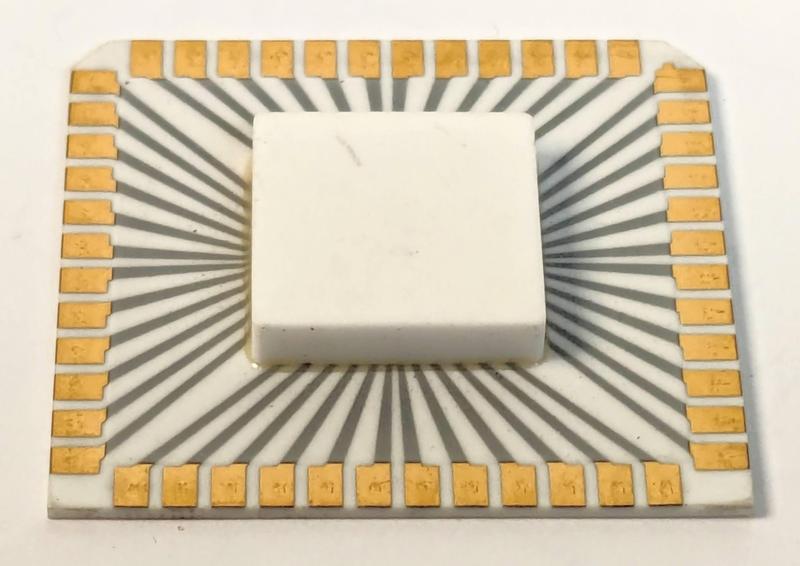

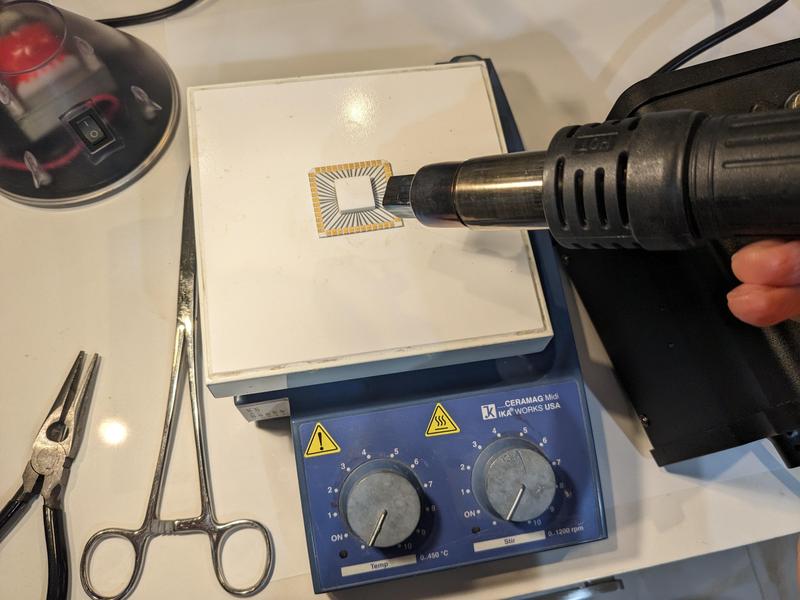

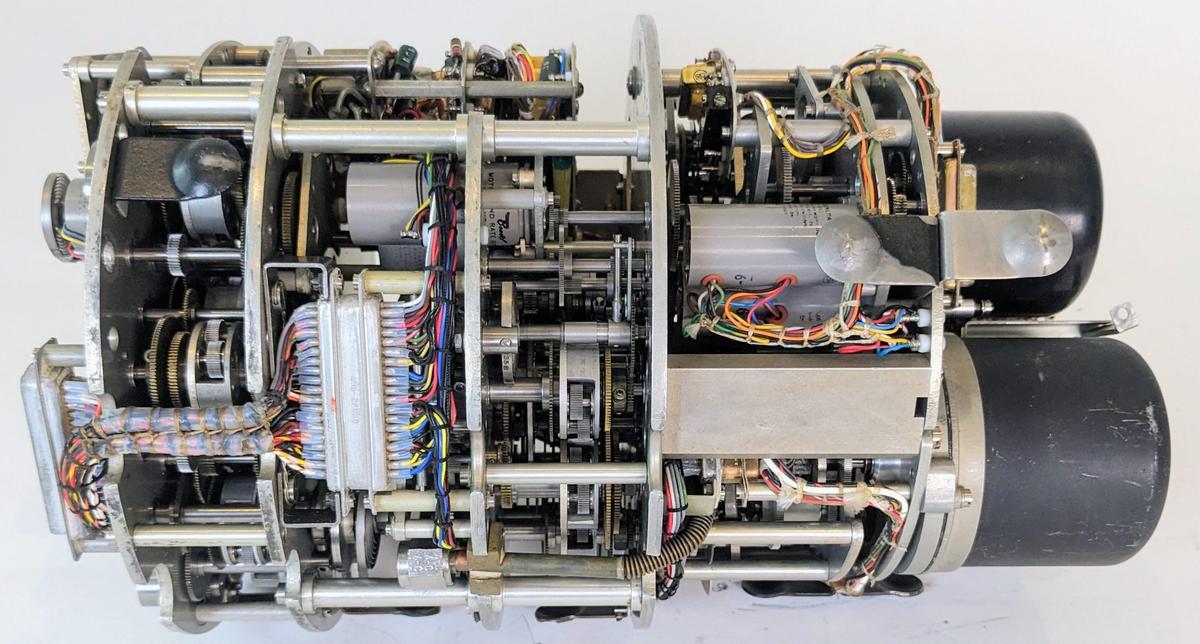

How does a vintage airspeed indicator work? CuriousMarc picked one up for a project, but it didn't have any documentation, so I reverse-engineered it. This indicator was used in the cockpit panel for business jets such as the Gulfstream G-III, Cessna Citation, and Bombardier Challenger CL600. It was probably manufactured in 1977 based on the dates on its transistors.

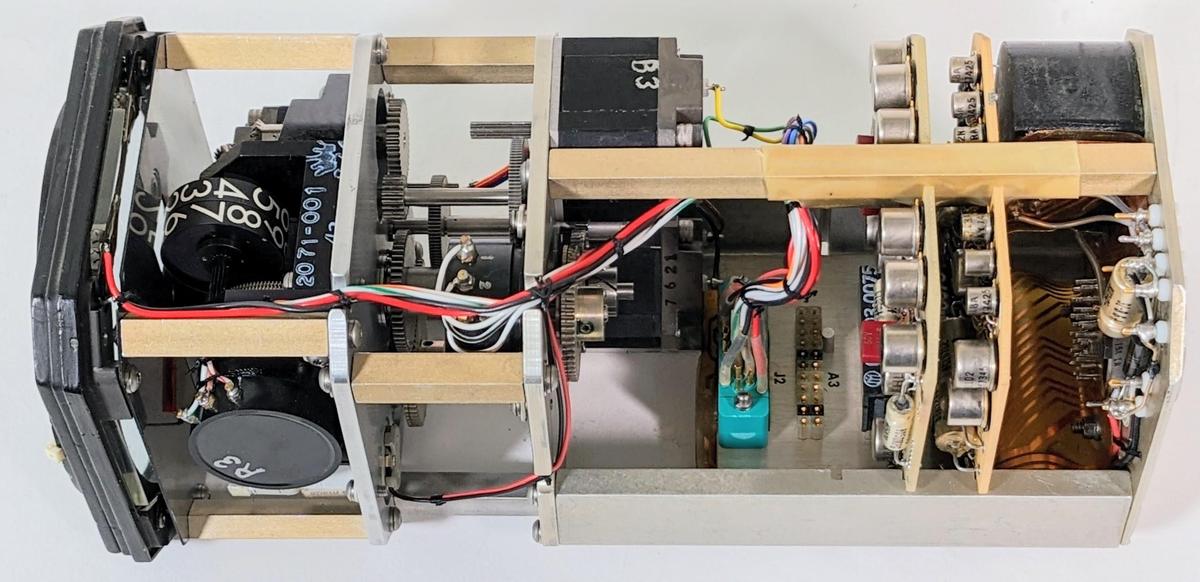

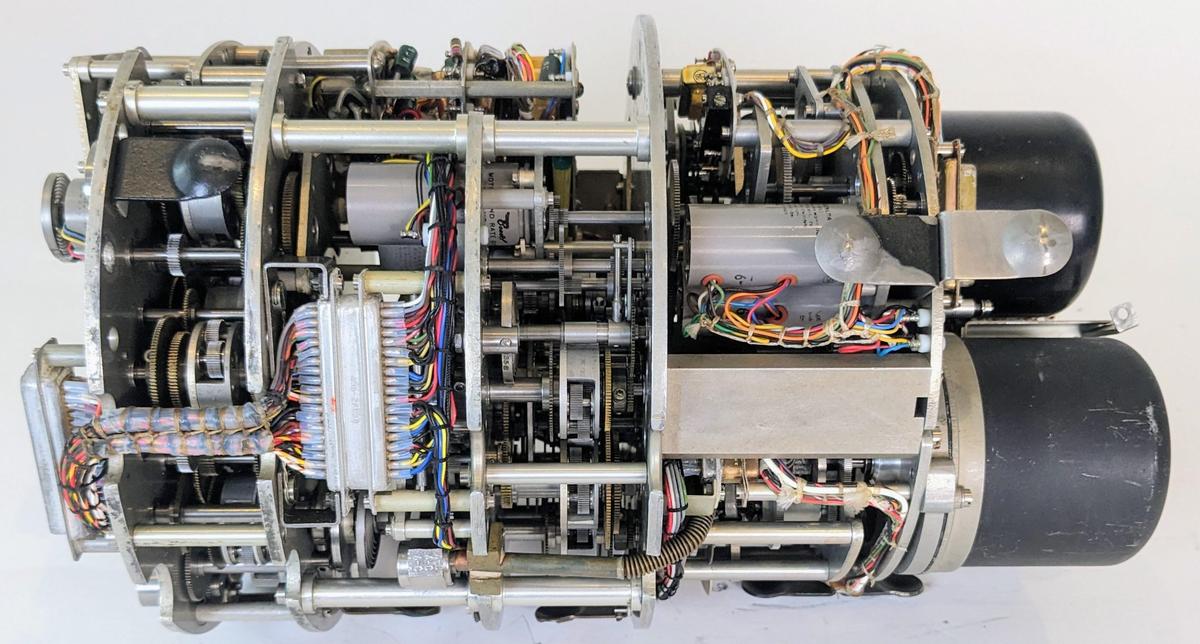

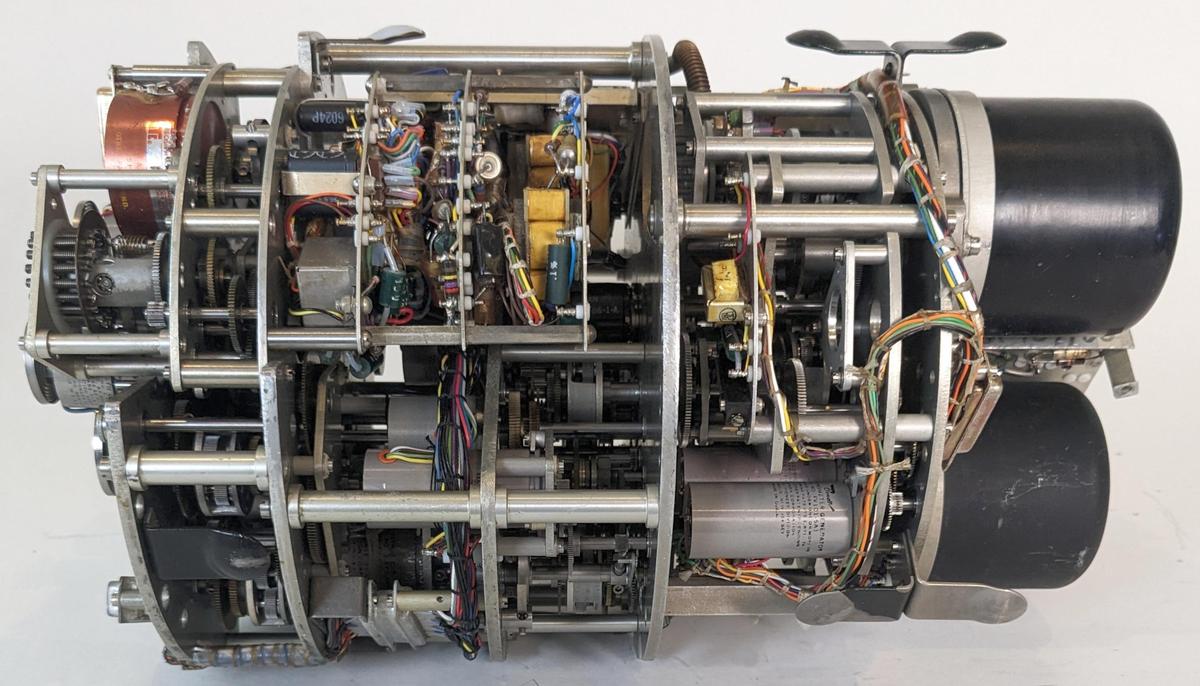

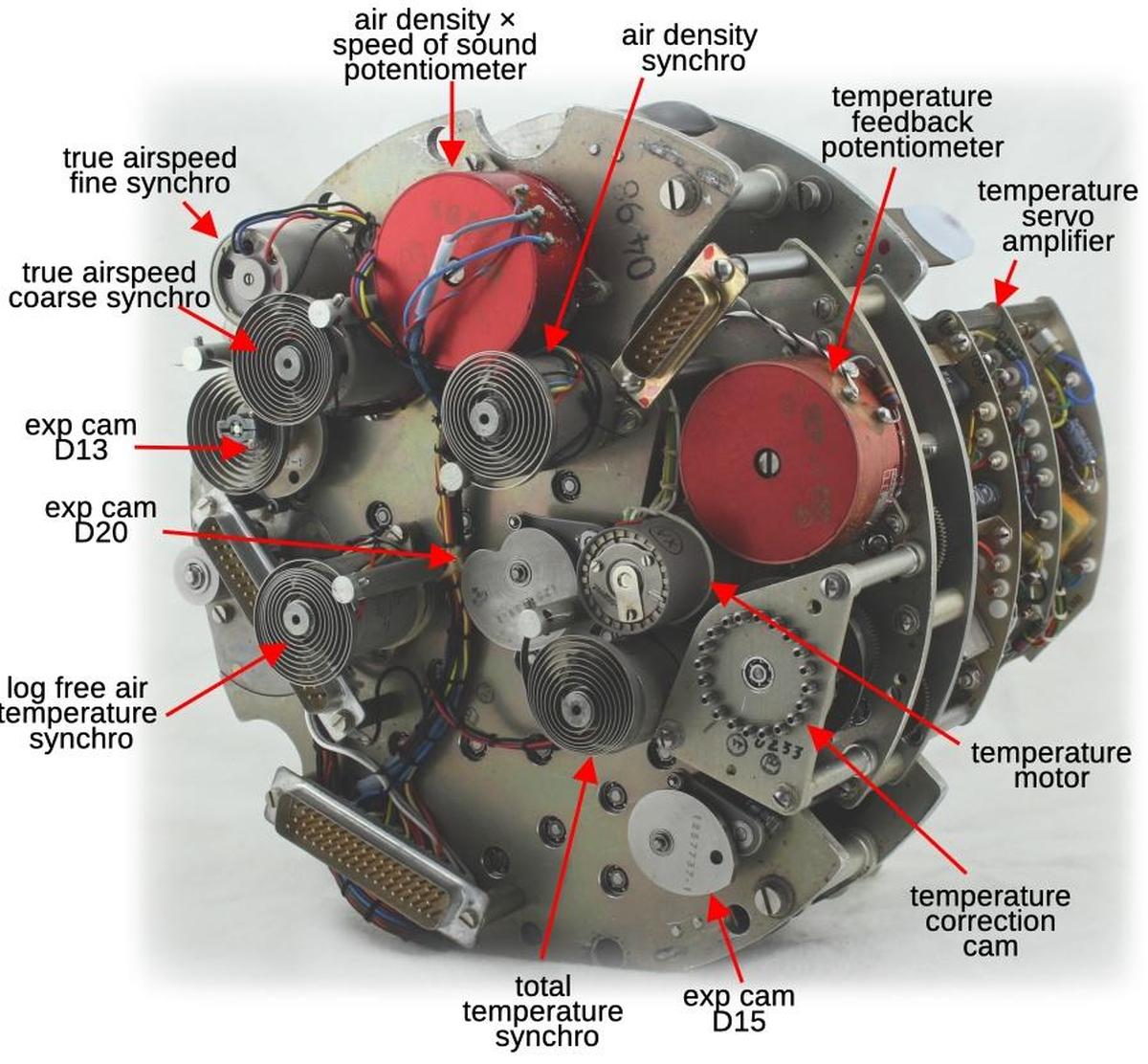

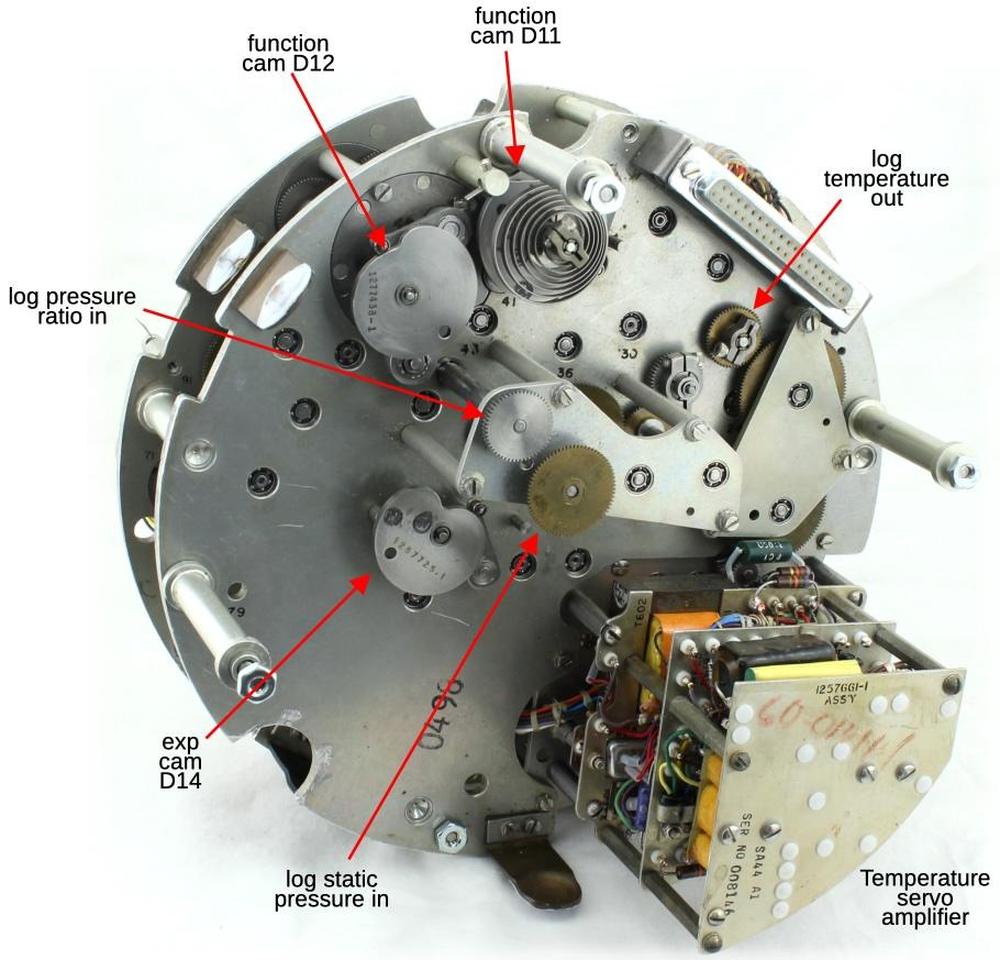

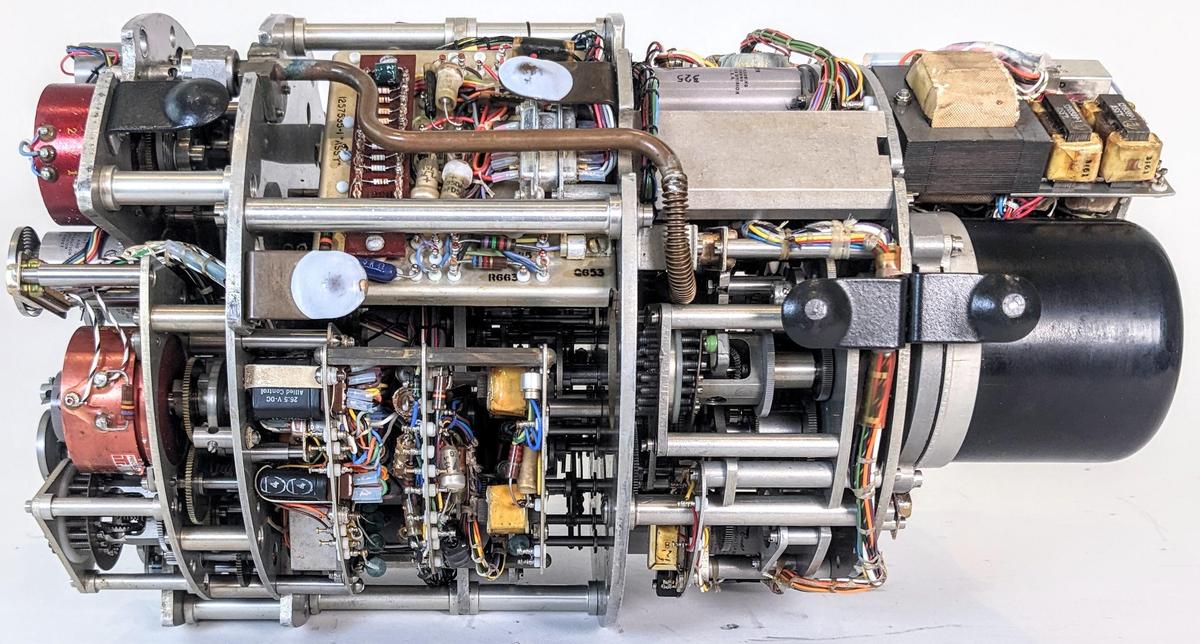

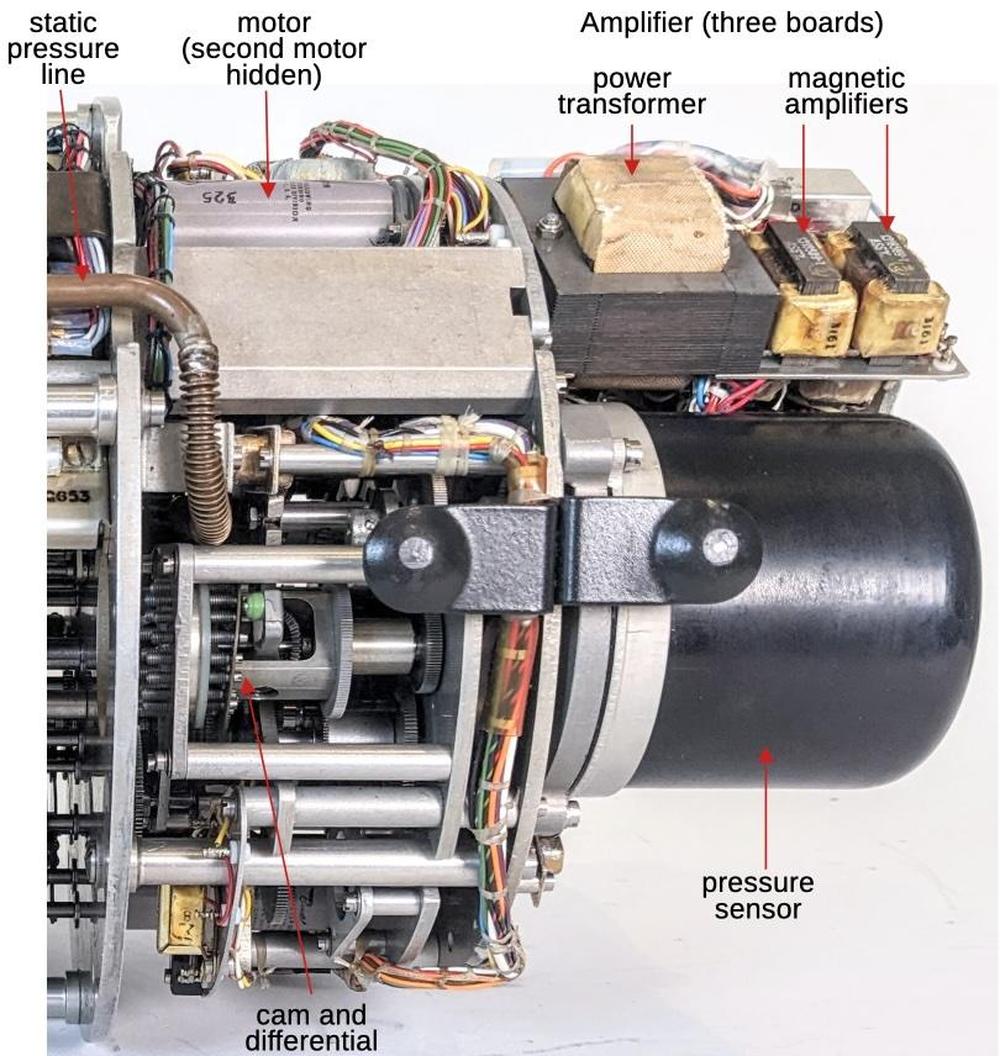

You might expect that the indicators on an aircraft control panel are simple dials. But behind this dial is a large, 2.8-pound box with a complex system of motors, gears, and feedback potentiometers, controlled by two boards of electronics. But for all this complexity, the indicator doesn't have any smarts: the pointers just indicate voltages fed into it from an air data computer. This is a quick blog post to summarize what I found.

The dial has two rotating pointers: the white pointer indicates airspeed in knots while the striped pointer indicates the maximum airspeed (which varies depending on altitude). The "digital" indicator at the top shows Mach number from 0.10 to 0.99, implemented with rotating digit wheels. When the unit is operating, the OFF indicator flag switches to black. The flag switches to a bright VMO warning if the pilot exceeds the maximum airspeed.1 On the rim of the dial, two small markers called "bugs" can be manually moved to indicate critical speeds such as takeoff speed.

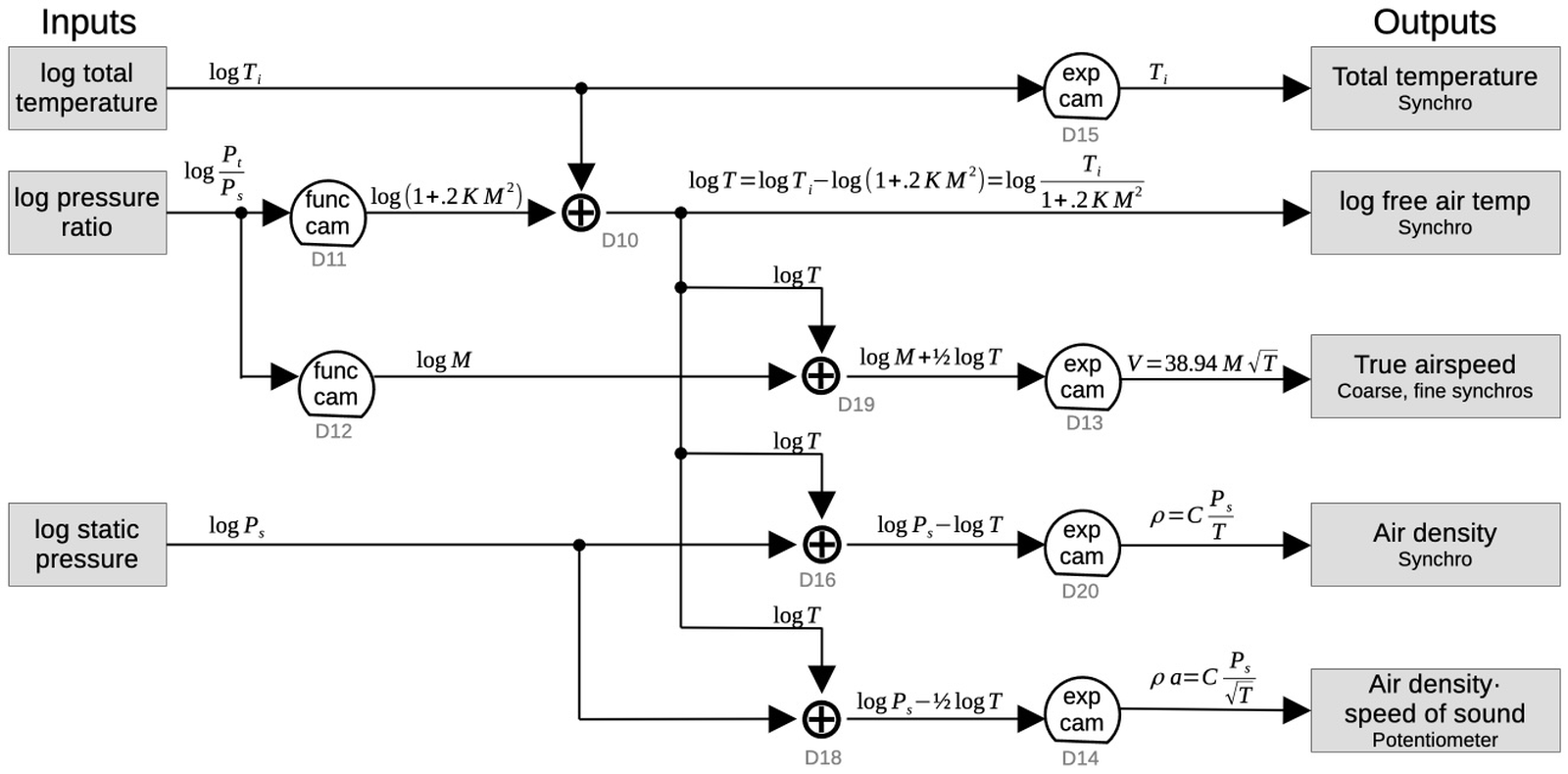

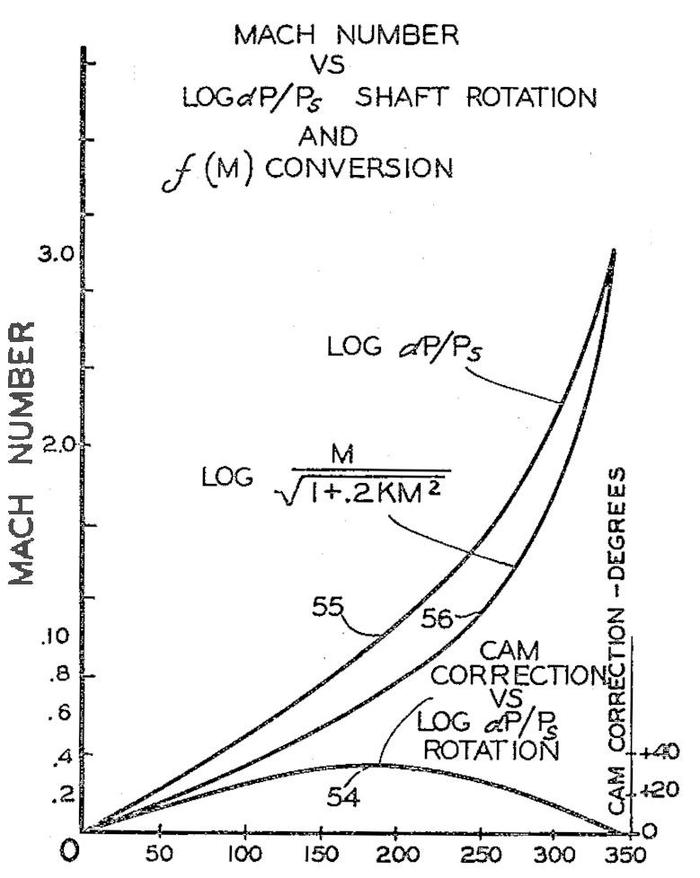

In use, the indicator is connected to a Sperry air data computer and receives voltage signals to control the dial positions.3 The air data computer measures the static and dynamic air pressure from pitot tubes and determines the airspeed, Mach number, altitude, and other parameters. (These calculations become nontrival near Mach 1 as air compresses and the fluid dynamics change.) Since we didn't have the air data computer or its specifications, I needed to figure out the connections from the computer to the display.

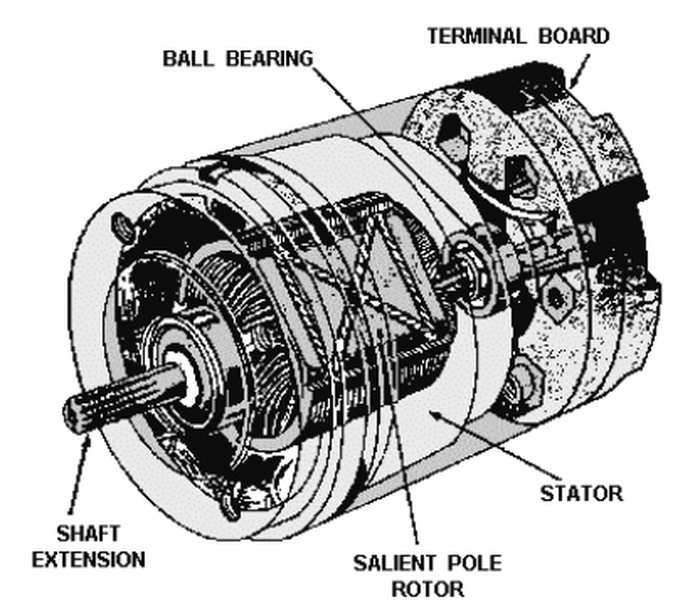

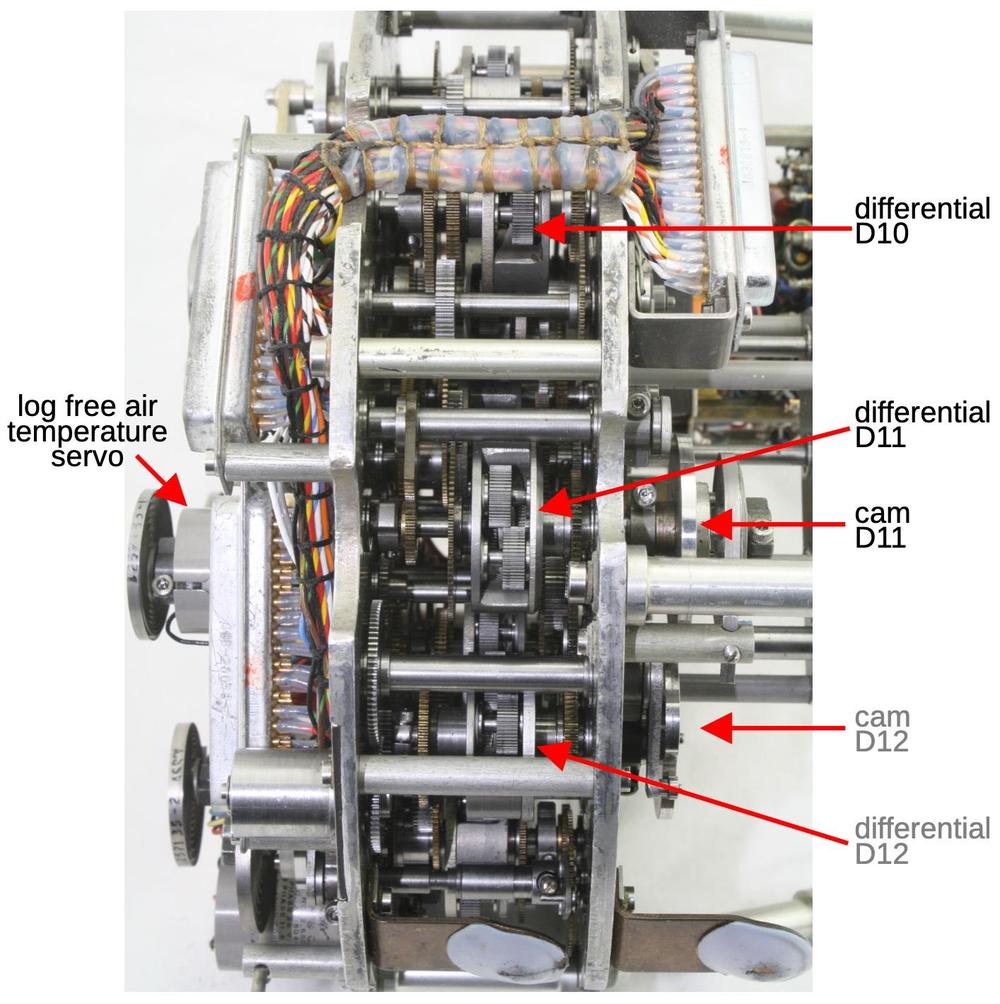

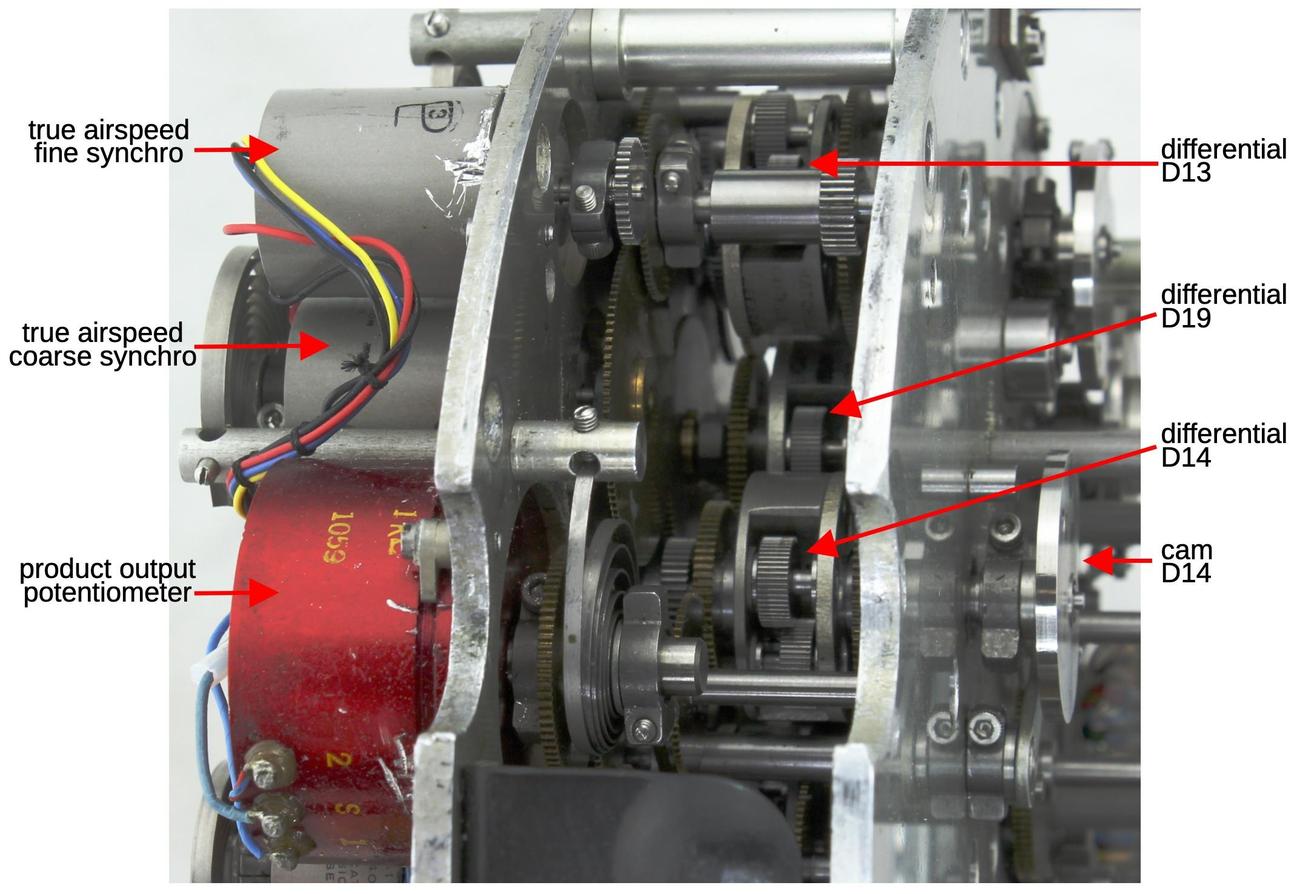

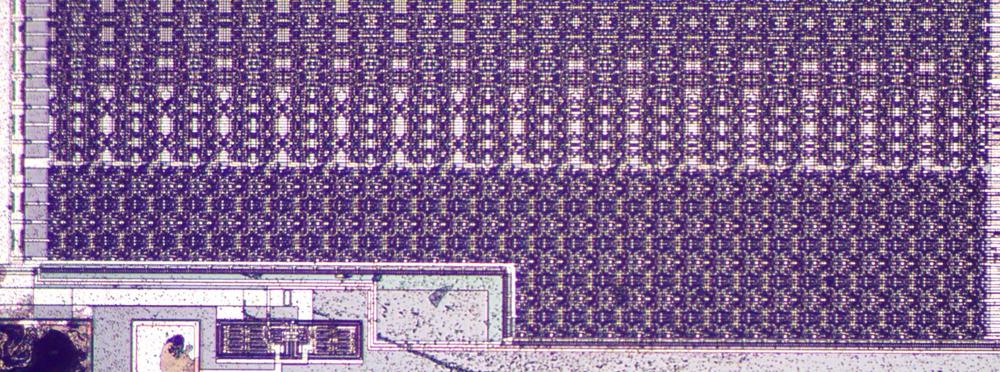

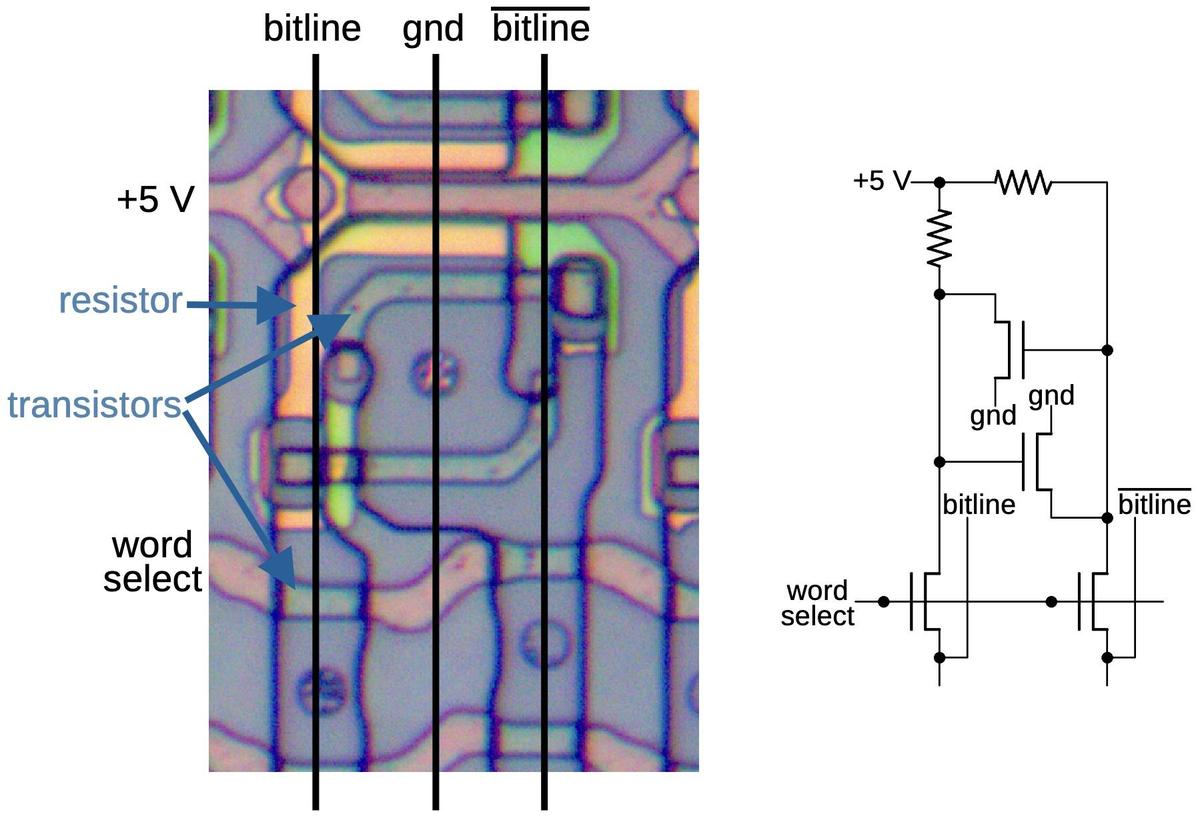

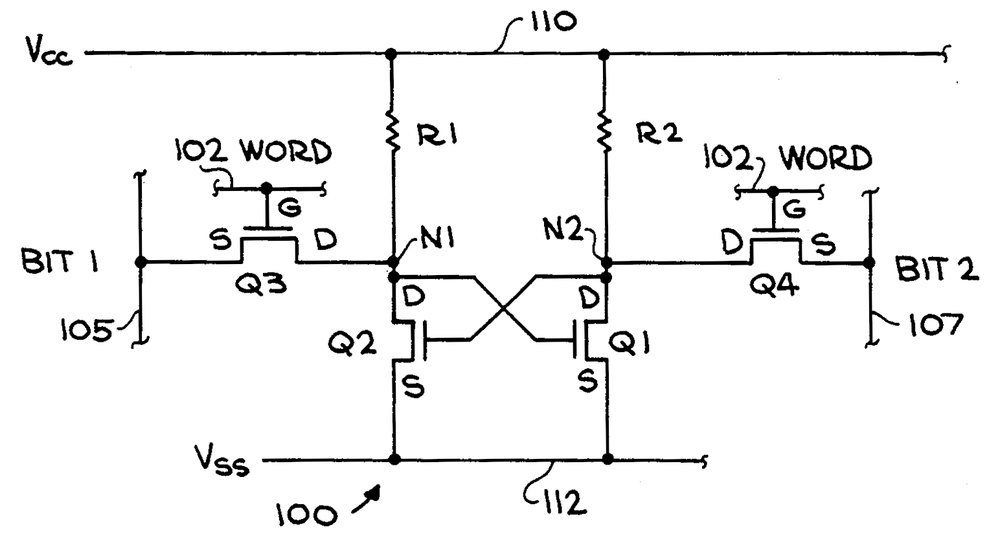

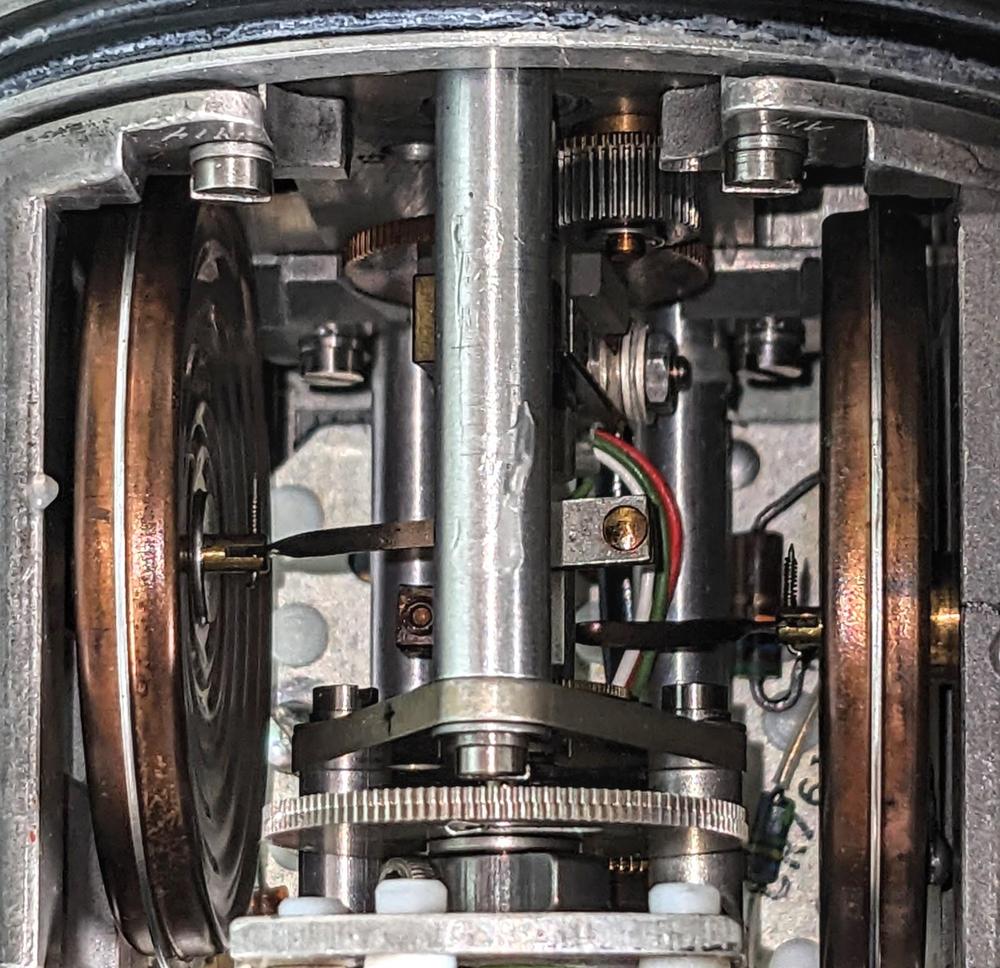

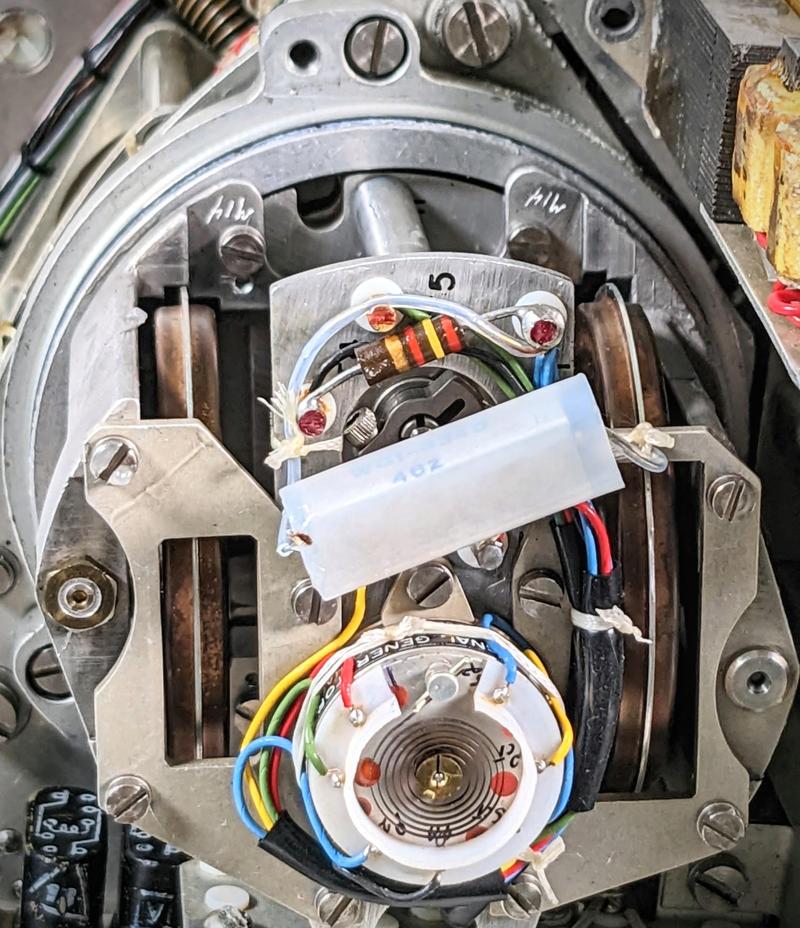

With the unit's cover removed, you can see the internal mechanisms and circuitry. Each of the three indicators is controlled by a small DC motor with a potentiometer providing feedback. To the right, two circuit boards provide the electronics to drive the indicators.4 At the upper right, the black blob is a 26-volt 400-Hertz transformer to power the unit. Some power supply components are in front of it. Below the transformer is an orangish flexible printed-circuit board, which seems advanced for the timeframe. This flexible ribbon connects the transformer, the external connector, and the printed-circuit board sockets, providing the backplane for the system.

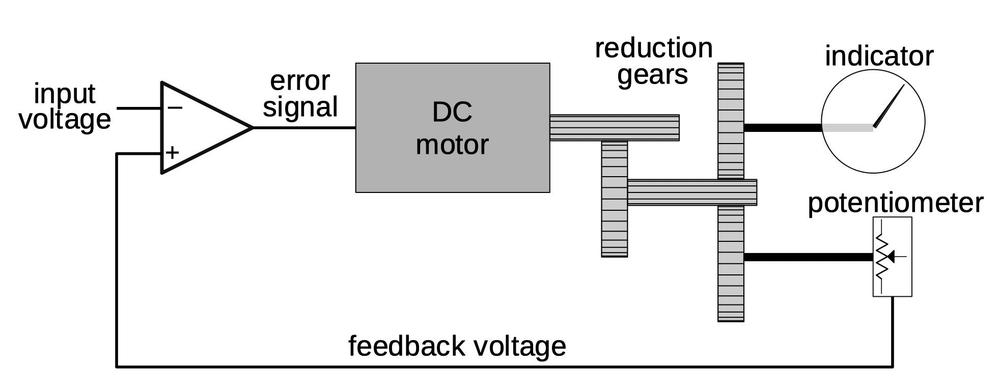

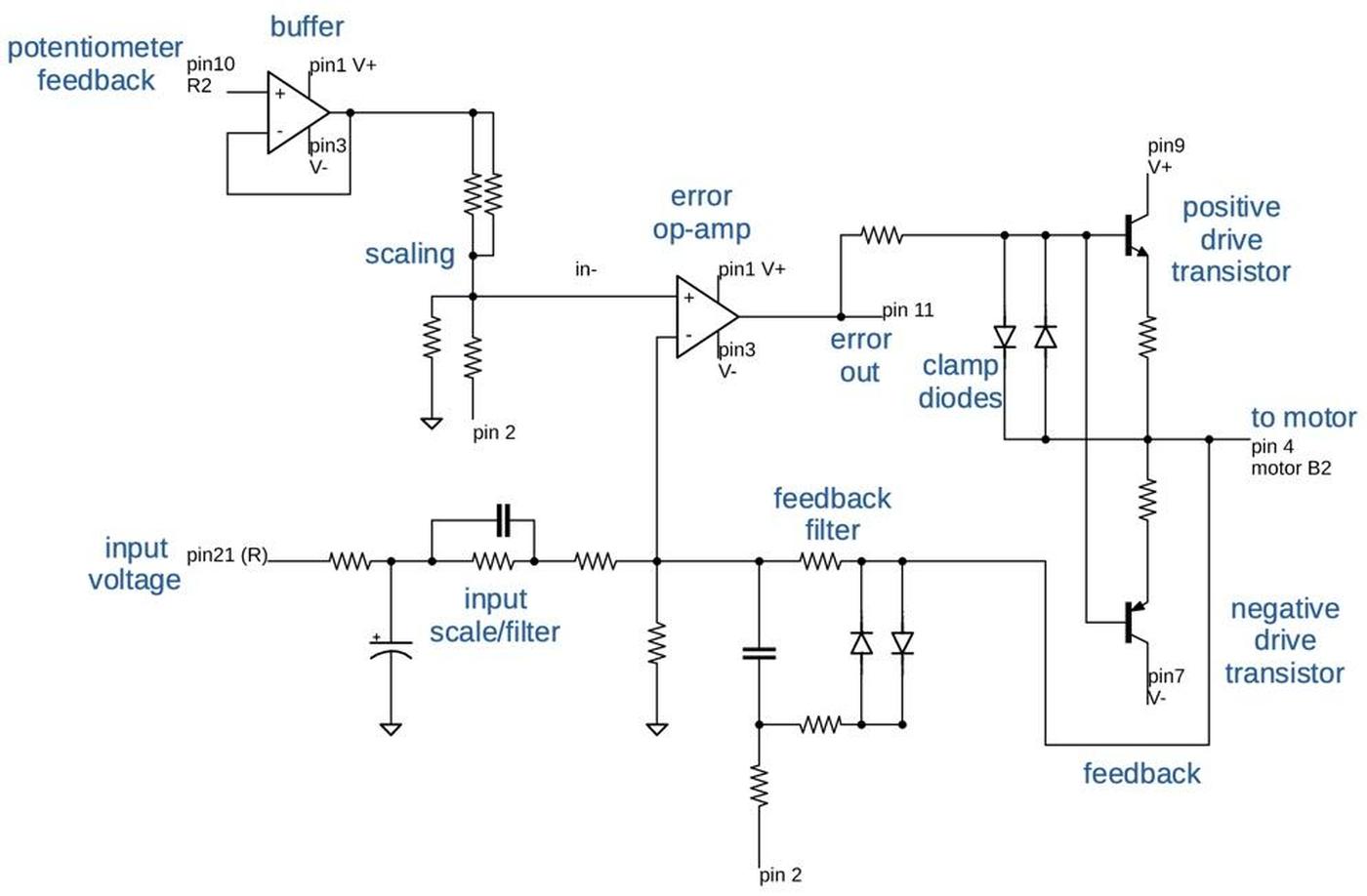

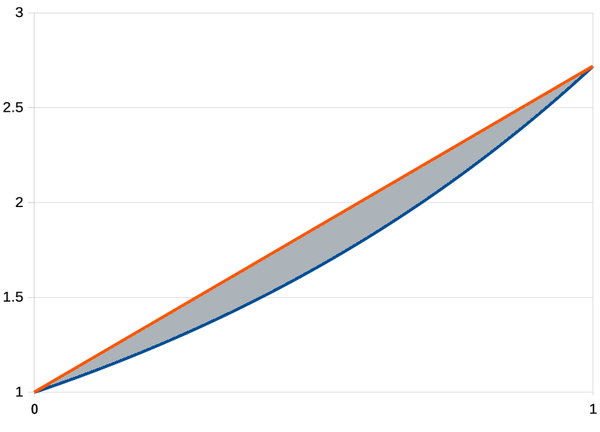

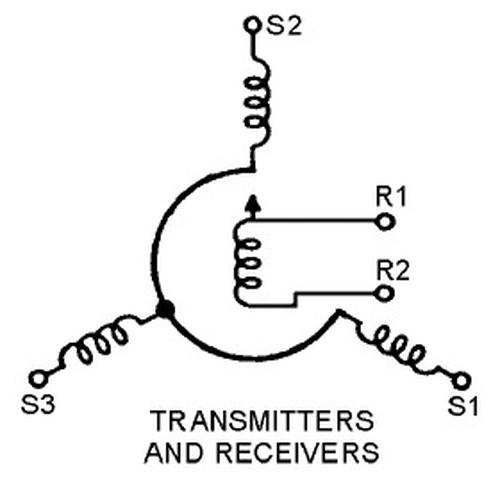

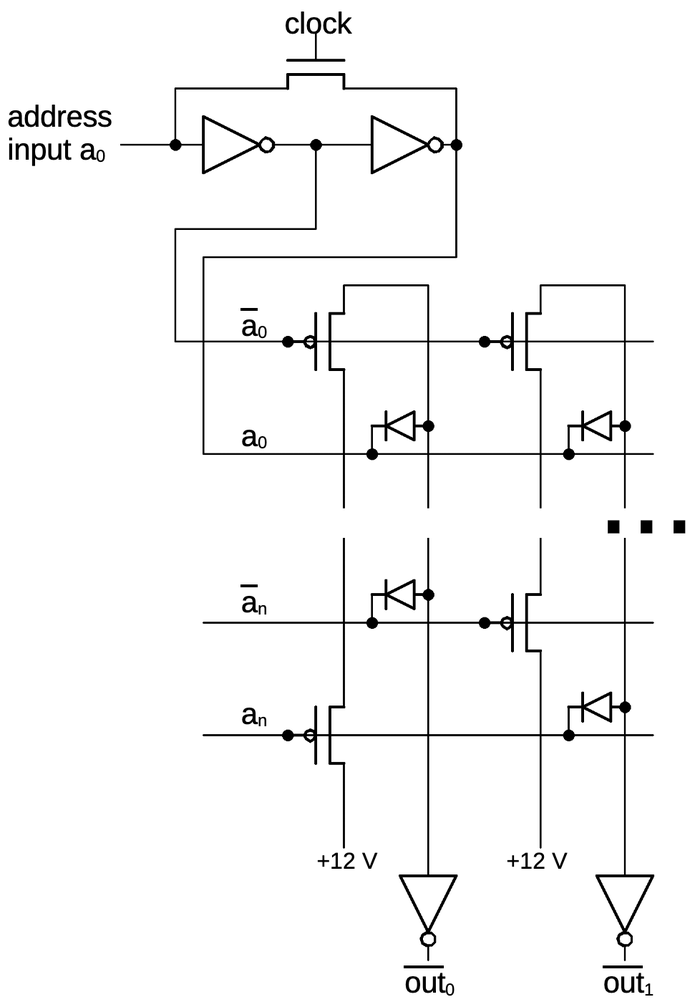

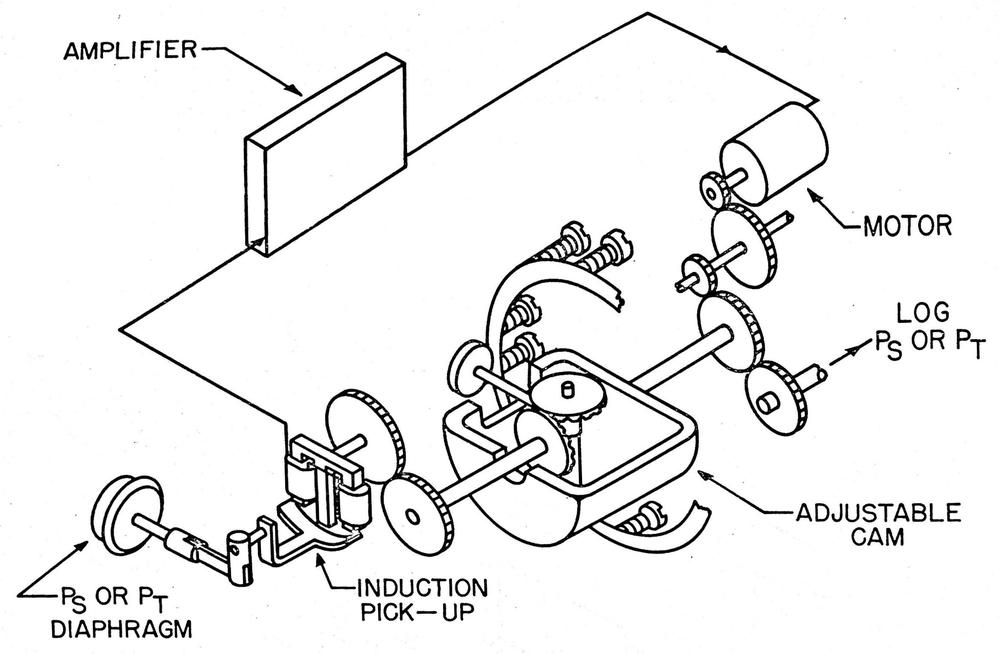

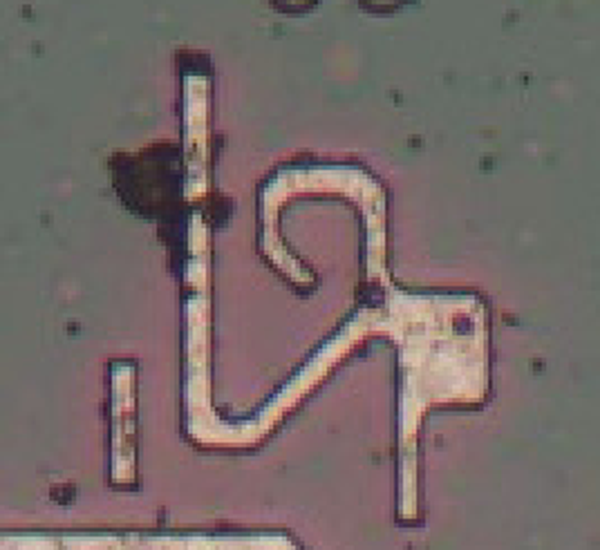

The diagram below shows the principle behind the servo mechanism that controls each indicator. The goal is to rotate the indicator to a position corresponding to the input voltage. A feedback loop is used to achieve this. The potentiometer provides a voltage proportional to its rotation. The input voltage and the feedback voltage are inputs to an op amp, which generates an error signal based on the difference between the inputs. The error signal rotates the DC motor in the appropriate direction until the potentiometer voltage matches the input voltage. Because the indicator and the potentiometer are geared together, the indicator will be in the correct position. As the input voltage changes, the system will continuously track the changes and keep the indicator updated.

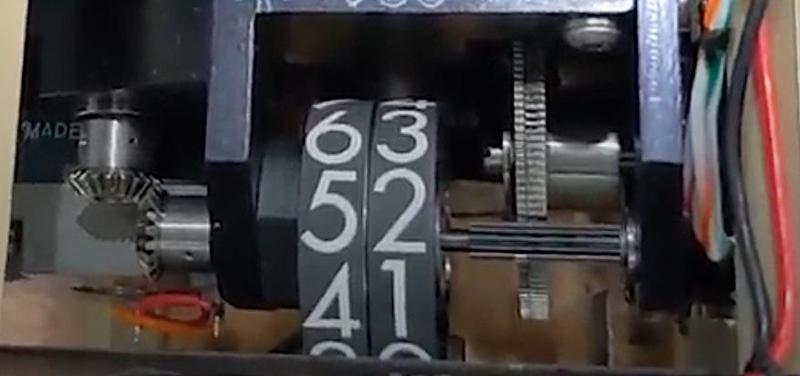

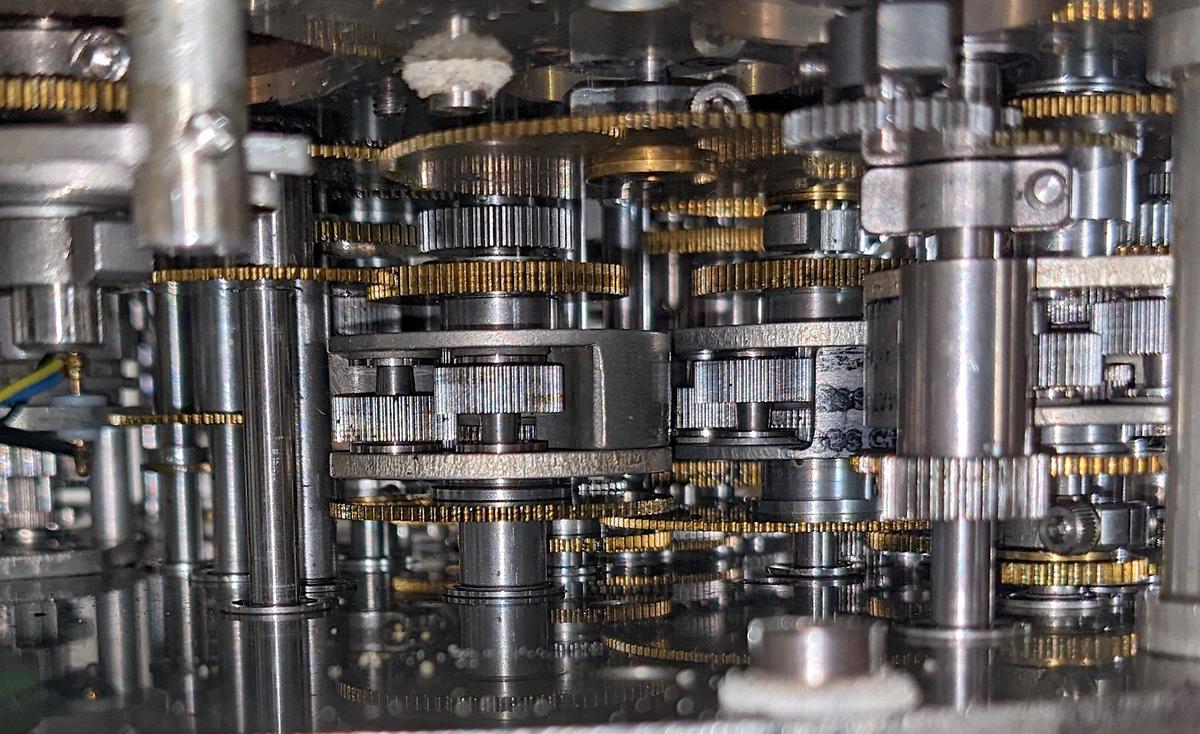

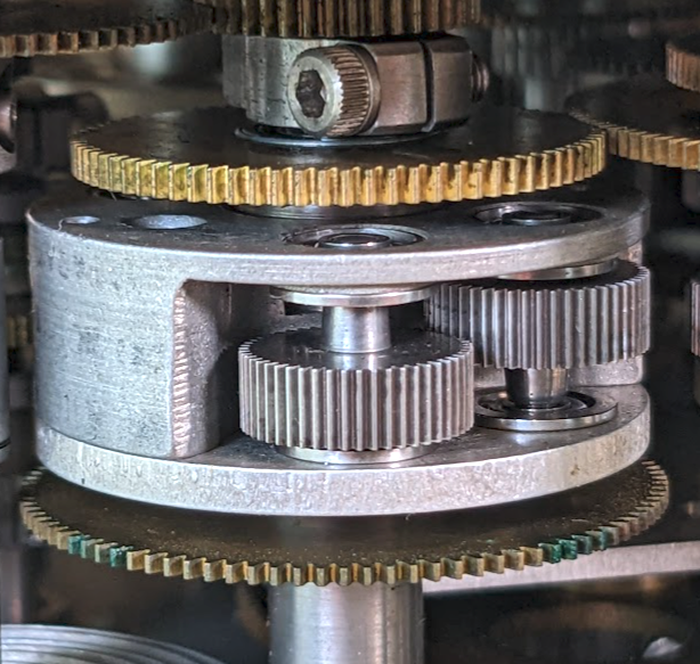

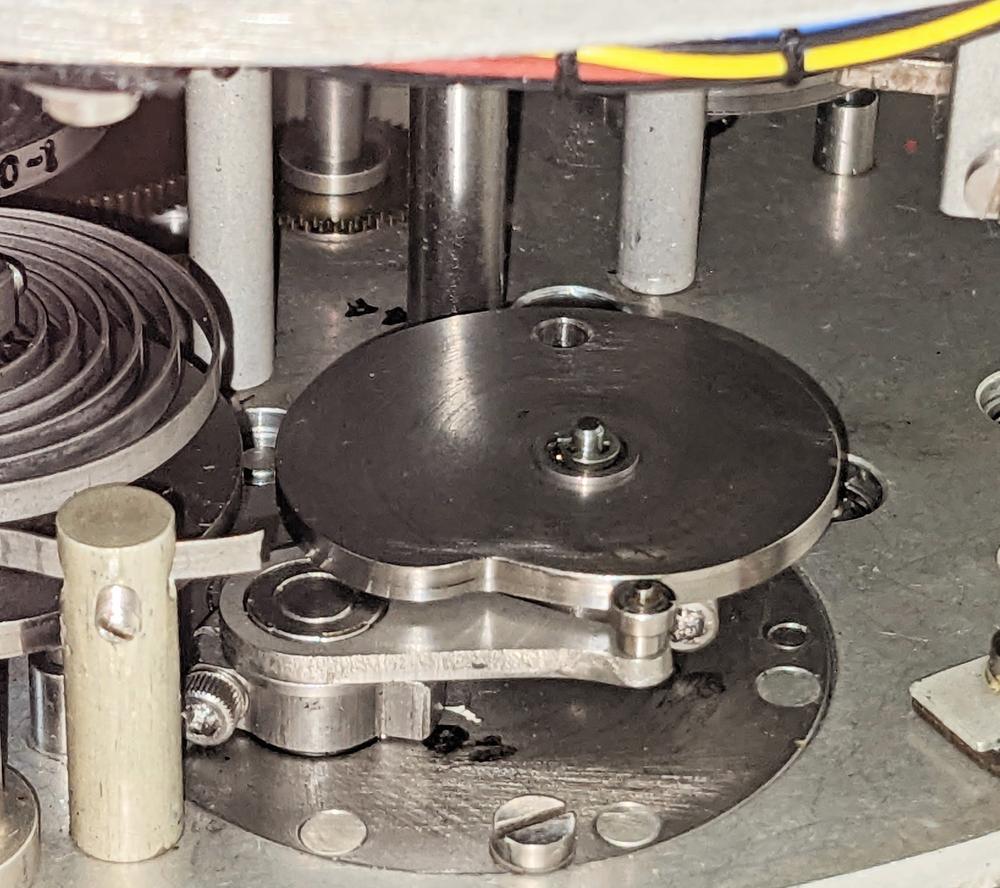

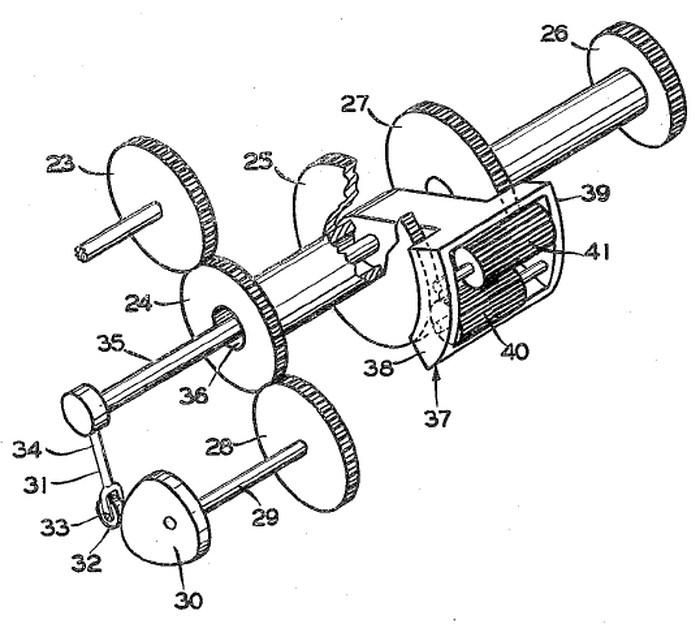

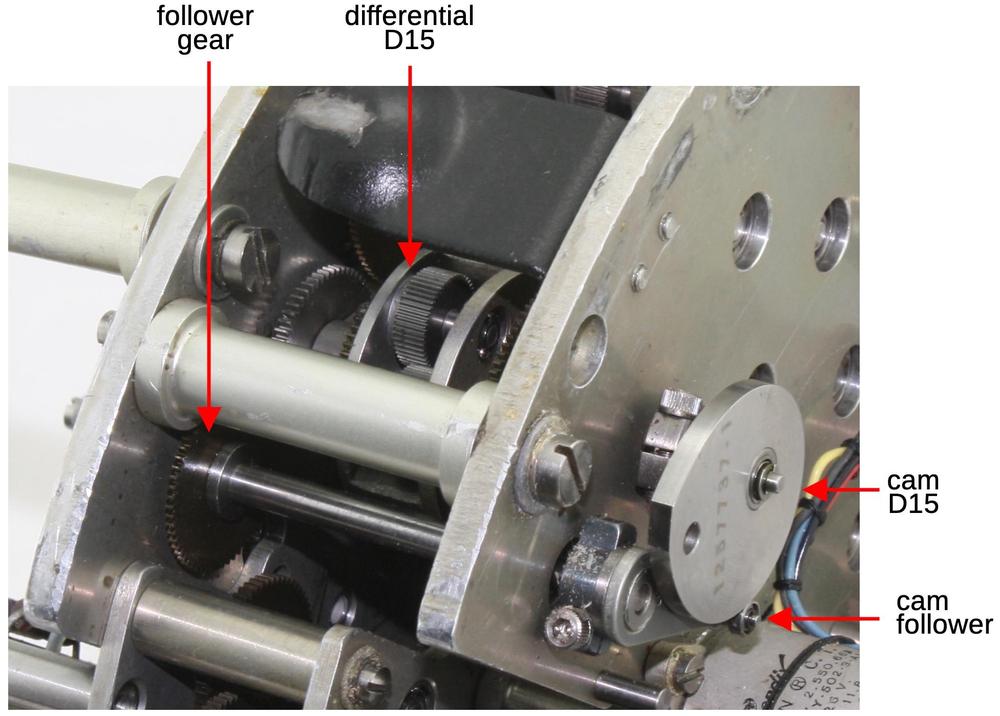

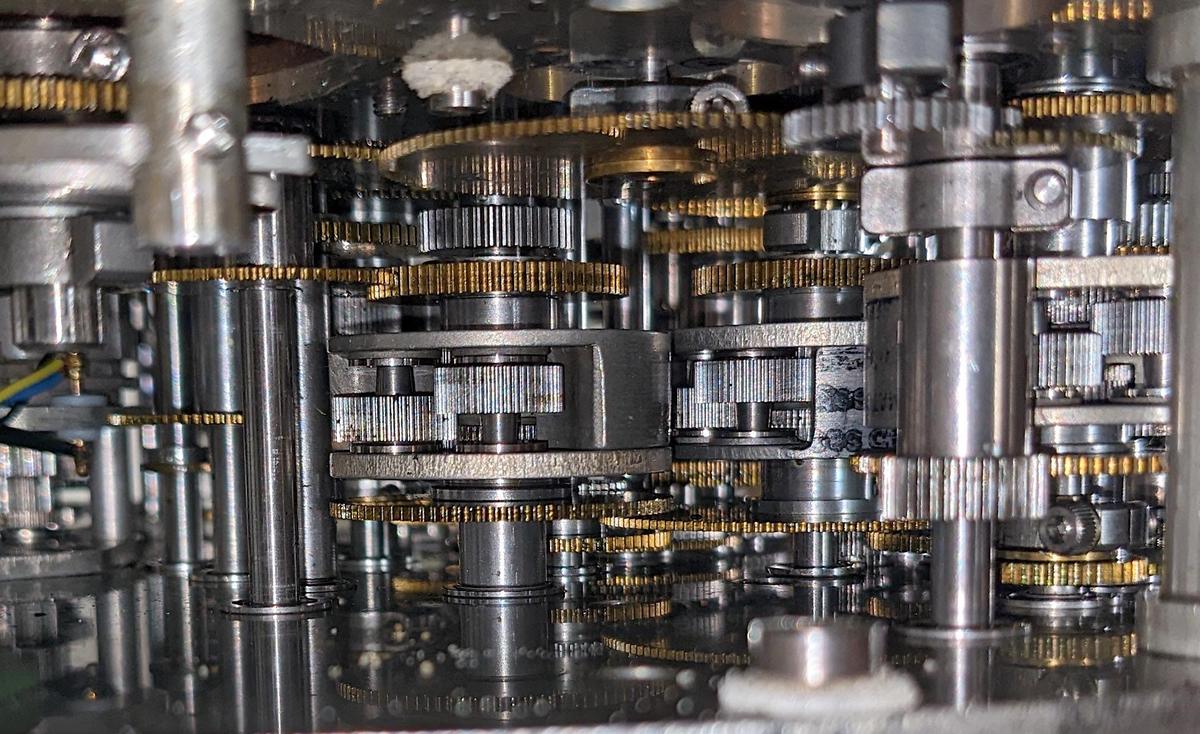

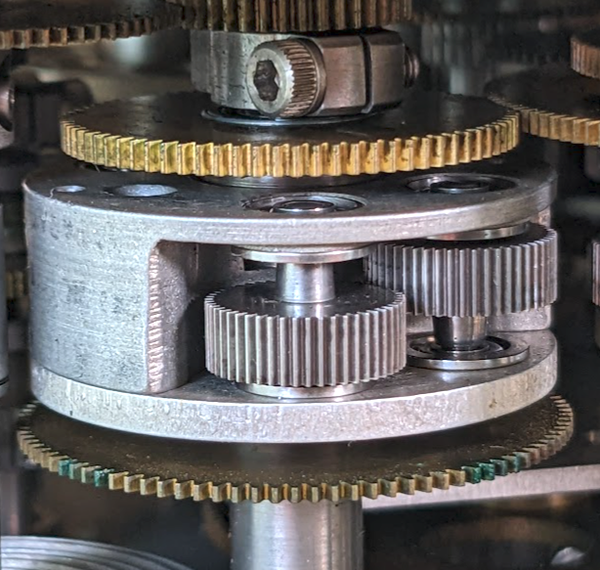

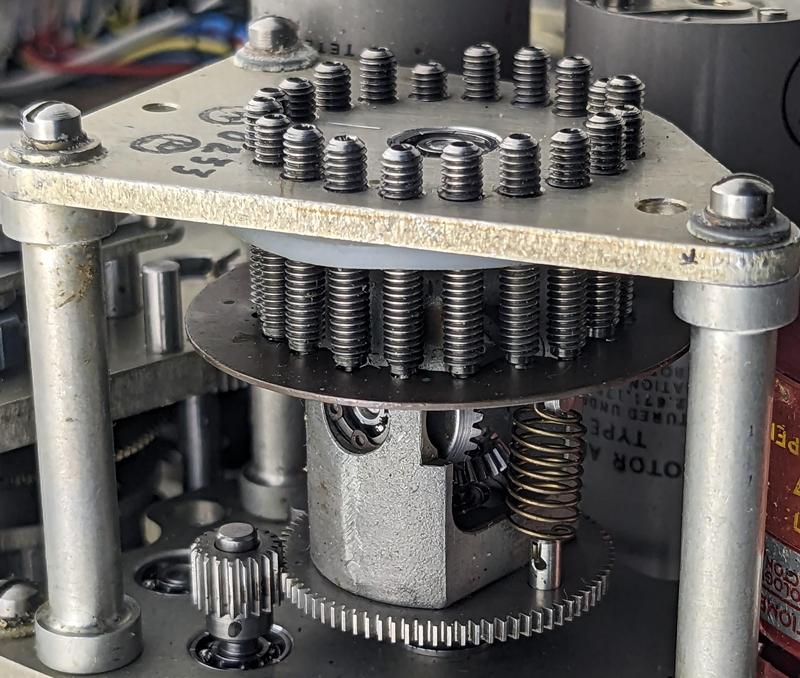

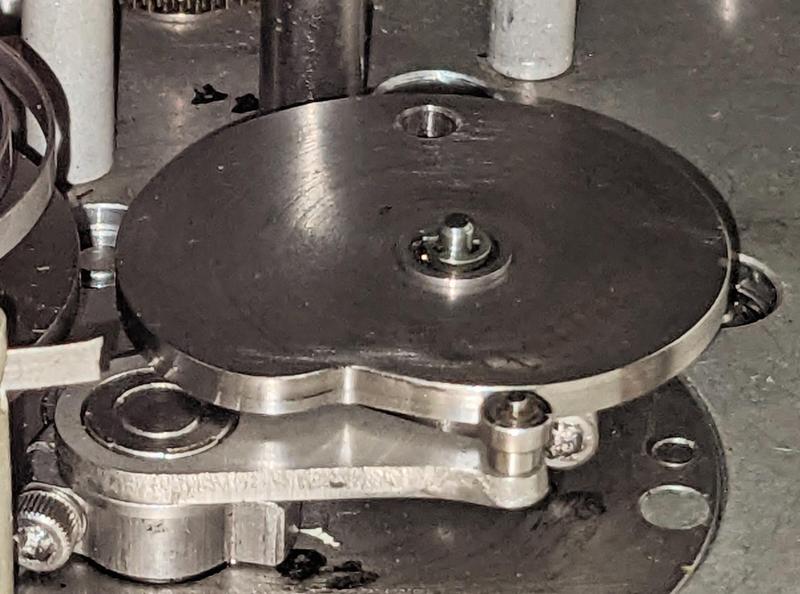

Because the DC motor spins much faster than the dial moves, reduction gears slow the rotation. The photo below shows the gear train in the unit. A potentiometer is at the upper-right with three wires attached.

The Mach number has additional gearing to rotate the numbered wheels. When the low-digit wheel cycles around, it advances the high-digit wheel, similar to an odometer.

Fault checking

One interesting feature of the indicator unit is that it implements fault checking to alert the pilot if something goes wrong. The front panel has a three-position flag. By default it's in the OFF position. Powering the coil in one direction rotates the flag to the blank side. Powering the coil in the other direction rotates the flag to the "VMO" position which indicates that the pilot has exceeded the maximum operating speed.

I figured that powering up the unit would move the flag out of the OFF position, but it's more complicated than that. First, the unit checks that the air data computer is providing a suitable reference voltage. Second, the unit verifies that the motor voltages for the two needles are within limits; this ensures that the servo loop is operating successfully. Third, the unit checks that signals are received on status pins K and L. The unit only moves out of the OFF state if all these conditions are satisfied.5 Thus, if the unit receives bad signals or is malfunctioning, the pilot will be alerted by the OFF indicator, rather than trusting the faulty display.

The circuitry

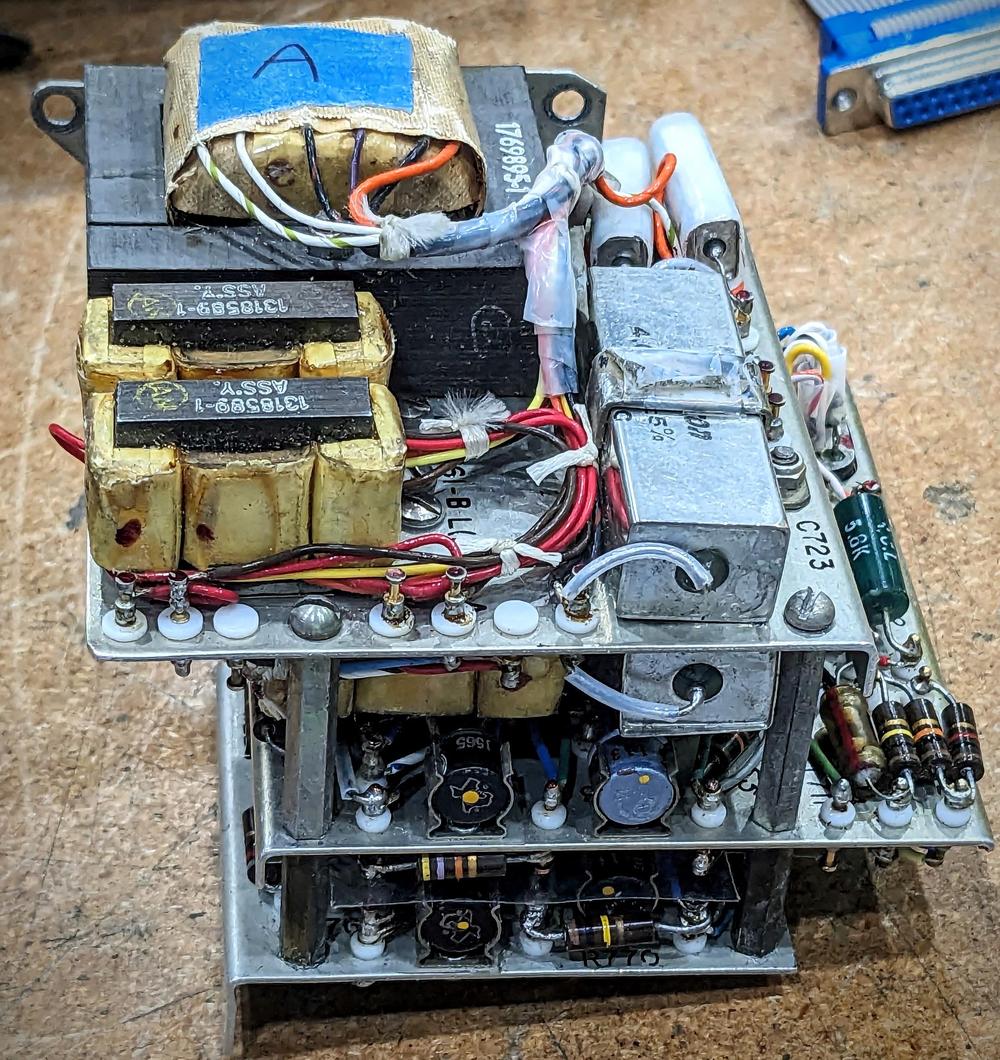

The unit is powered by 26 volts, 400 Hz, a standard voltage for aviation. A small transformer provides multiple outputs for the various internal voltages. The unit has four power supplies: three on the first board and one on the back wall of the unit. One power supply is for the status indicator, one is for the op amps, one powers the 41.7V motors, and the fourth provides other power.

One subtlety is how the feedback potentiometers are powered. The servo loop compares the potentiometer voltage with the input voltage. But this only works if the potentiometer and the input voltage are using the same reference. One solution would be for the indicator unit and the air data computer to contain matching precision voltage regulators. Instead, the system uses a simpler, more reliable approach: the air data computer provides a reference voltage that the indicator unit uses to power the potentiometers.6 With this approach, the air data computer's voltage reference can fluctuate and the indicator will still reach the right position. (In other words, a 5V input with a 10V reference and a 6V input with a 12V reference are both 50%.)

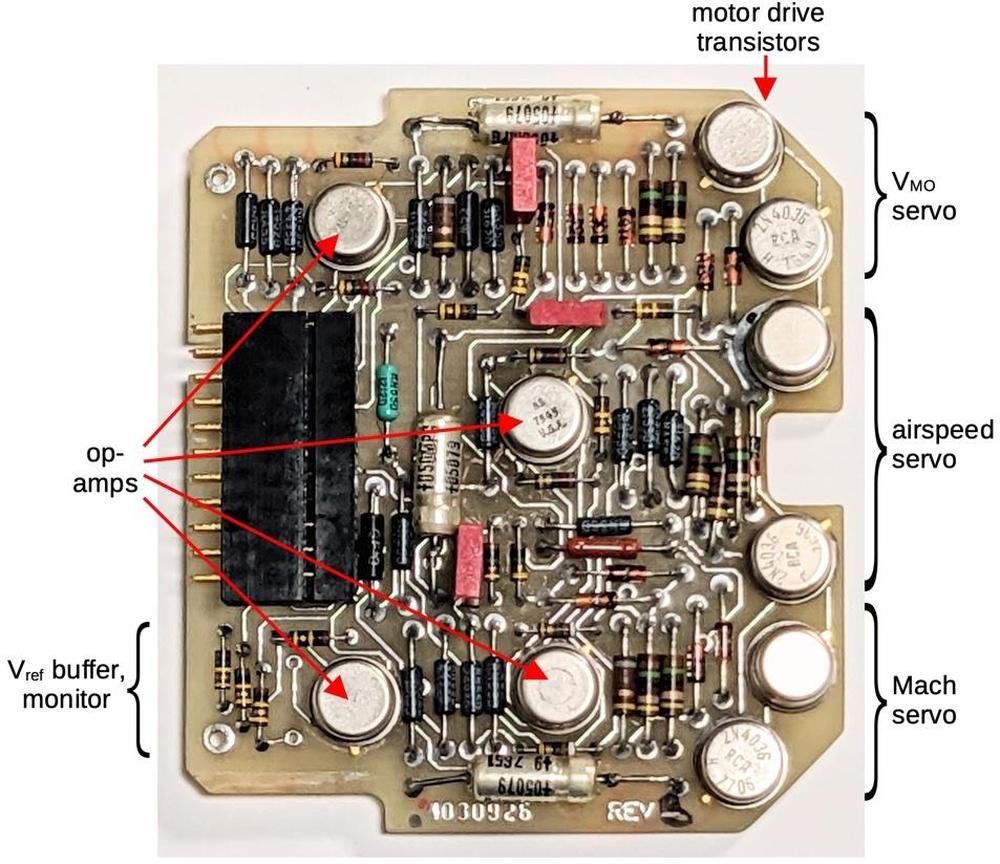

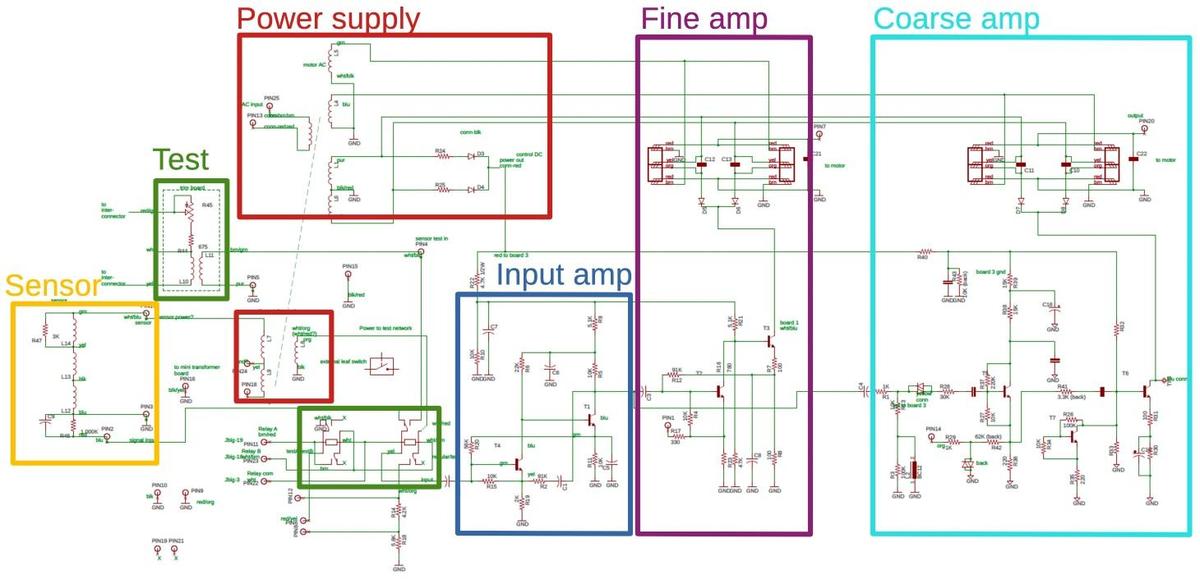

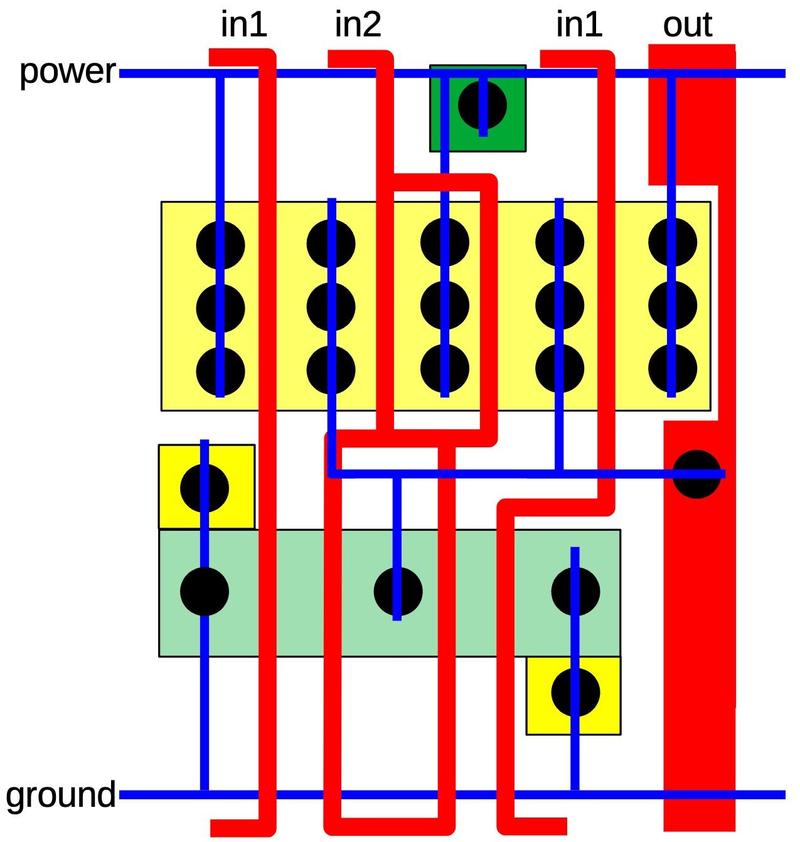

The diagram below shows the board with the servo circuitry. The board uses dual op-amp integrated circuits, packaged in 10-pin metal cans that protected against interference.7 The ICs and some of the other components have obscure military part numbers; I don't know if this unit was built for military use or if military-grade parts were used for reliability.

The circuitry in the lower-left corner handles the reference voltage from the air data computer. The board buffers this voltage with an op amp to power the three feedback potentiometers. The op amp also ensures that the reference voltage is at least 10 volts. If not, the indicator unit shows the "OFF" flag to alert the pilot.

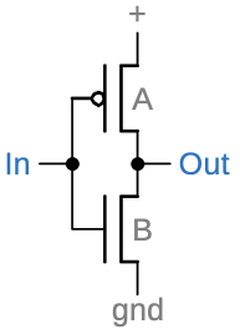

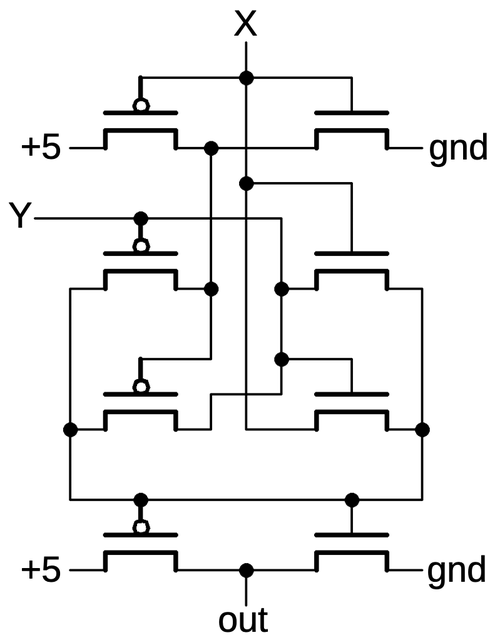

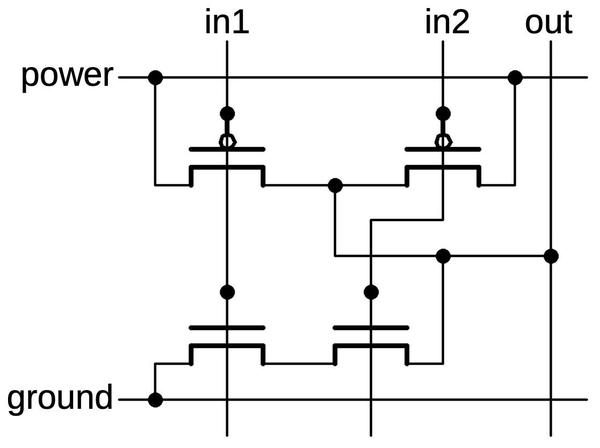

The schematic below shows one of the servo circuits; the three circuits are roughly the same. The heart of the circuit is the error op amp in the center. It compares the voltage from the potentiometer with the input voltage and generates an error output that moves the motor appropriately. A positive error output will turn on the upper transistor, driving the motor with a positive voltage. Conversely, a negative error output will turn on the lower transistor, driving the motor with a negative voltage. The motor drive circuit has clamp diodes to limit the transistor base voltages.

The op amp also receives a feedback signal from the motor output. I don't entirely understand this signal, which goes through a filter circuit with resistors, diodes, and a capacitor. I think it dampens the motor signal so the motor doesn't overshoot the desired position. I think it also keeps the transistor drive signal biased relative to the emitter voltage (i.e. the motor output).

On the input side, the potentiometer voltage goes through an op amp follower buffer, which simply outputs its input voltage. This may seem pointless, but the op amp provides a high-impedance input so the potentiometer's voltage doesn't get distorted.

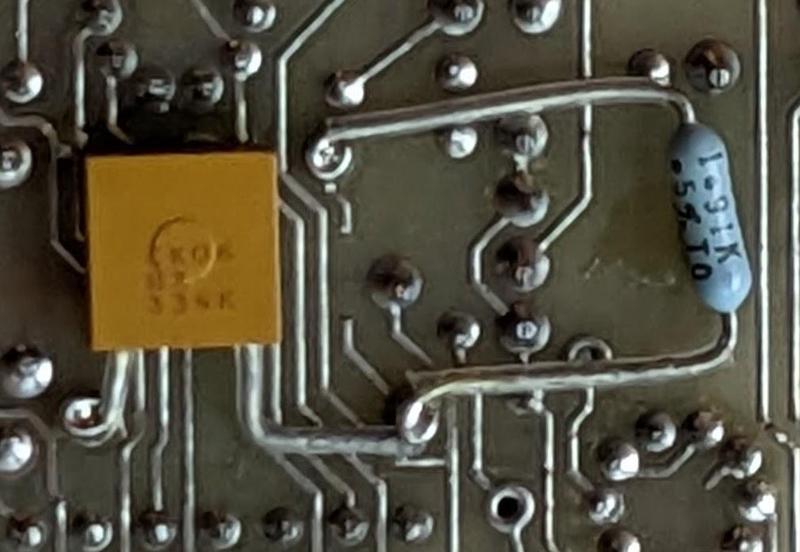

The external input voltage goes through a resistor/capacitor circuit to scale it and filter out noise. Curiously, the circuit board was modified by cutting a trace and adding a resistor and capacitor to change the input circuit for one of the inputs. In the photo below, you can see the added resistor and capacitor; the cut trace is just to the right of the capacitor. I don't know if this modification changed the scale factor or if it filtered out noise. A label on the box says that Honeywell performed a modification on November 8, 1991, which presumably was this circuit.

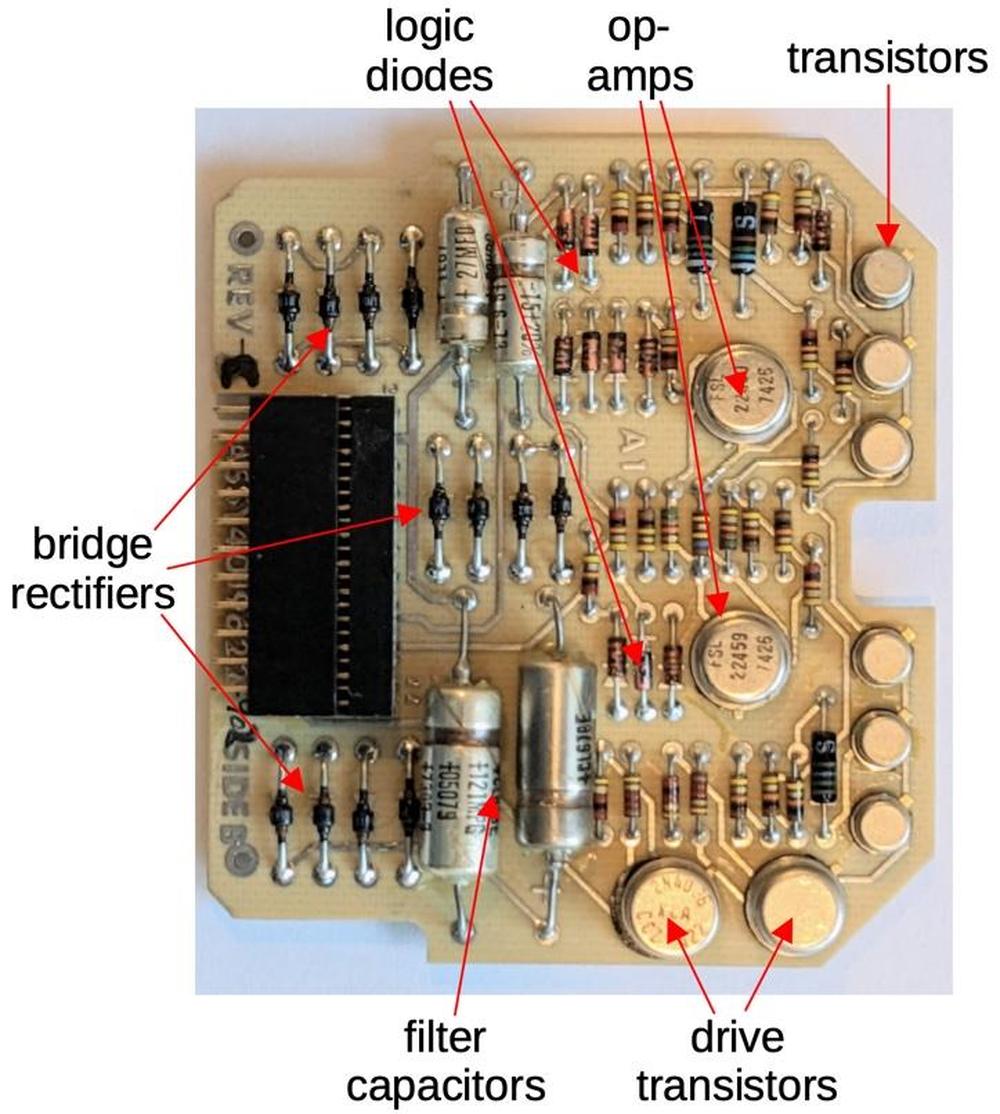

The second board implements three power supplies as well as the circuitry for the OFF/VMO flag. The power supplies are simple and unregulated, just diode bridges to convert AC to DC, along with filter capacitors. Most of the circuitry on the board controls the status flag. Two dual op amps check the motor voltages against upper and lower limits to ensure that the motors are tracking the inputs. These outputs, along with other logic status signals, are combined with diode-transistor logic to determine the flag status. Driver transistors provide +18 or -18 volts to the flag's coil to drive it to the desired position.

Conclusions

After reverse-engineering the pinout, I connected the airspeed indicator to a stack of power supplies and succeeded in getting the indicators to operate (video). This unit is much more complex than I expected for a simple display, with servoed motors controlled by two boards of electronics. Air safety regulations probably account for much of the complexity, ensuring that the display provides the pilot with accurate information. For all that complexity, the unit is essentially a voltmeter, indicating three voltages on its display. This airspeed indicator is a bit different from most of the hardware I examine, but hopefully you found this look at its internal circuitry interesting.

You can follow me on Twitter @kenshirriff or rss. I've also started experimenting with mastodon recently as @oldbytes.space@kenshirriff.

Notes and references

-

Since the unit has airspeed and maximum airspeed indicators, you might expect it to display the maximum airspeed warning flag based on the two speed inputs. Instead, the flag is controlled by input pin "L". In other words, the air data computer, not the indicator unit, determines when the maximum airspeed is exceeded. ↩

-

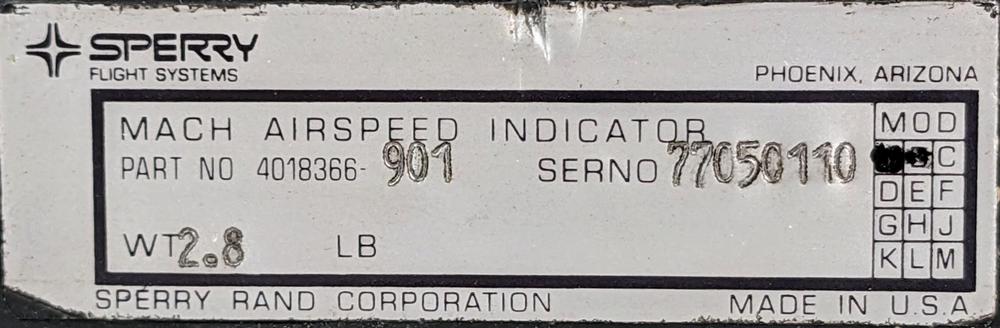

This unit is a "Mach Airspeed Indicator", 4018366, apparently also called the SI-225,2

Product label with part number 4018366-901.Note that the label says Sperry. In 1986, Sperry attempted to buy Honeywell but instead Burroughs made a hostile takeover bid. The merger of Sperry and Burroughs formed Unisys. A couple of months after the merger, the Sperry Aerospace Group was sold to Honeywell for $1.025 billion. Thus, the indicator became a Honeywell product. This corporate history explains why the unit has a Honeywell product support sticker.

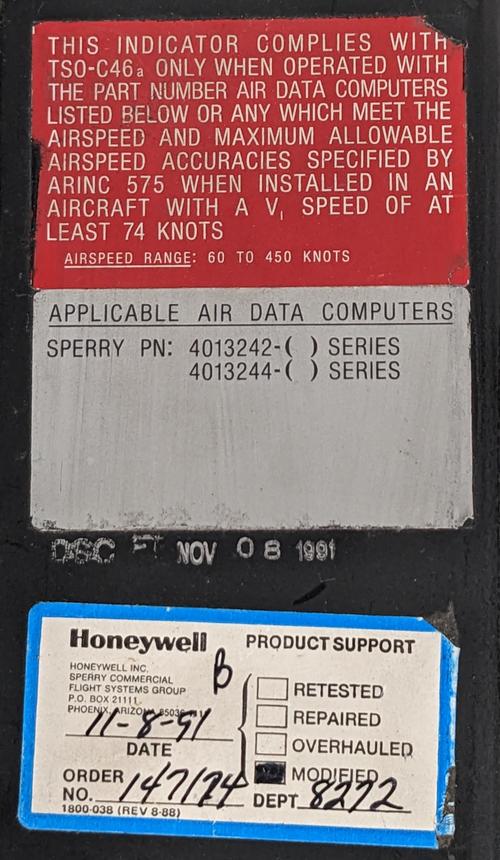

Labels on top of the unit indicate that it worked with the Sperry 4013242 and 4013244 air data computers. These became the Honeywell AZ-242 and AZ-244. -

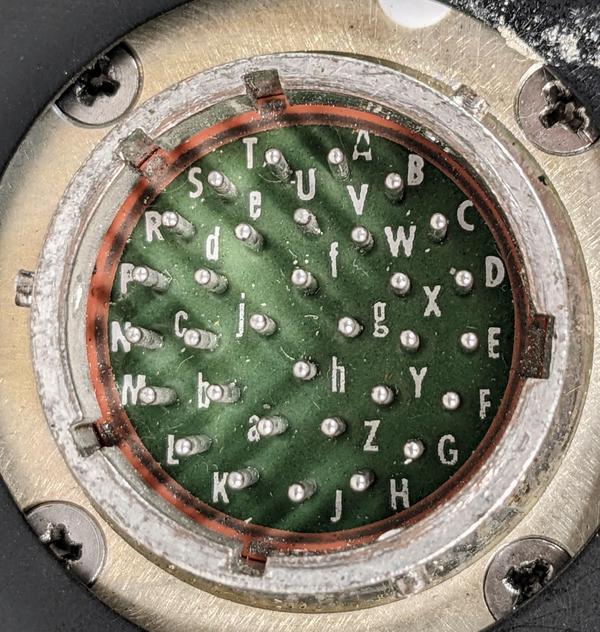

The connector is a 32-pin MIL Spec round connector. Most of the 32 pins are unused. The connector has complex keying with 5 slots. I assume the keying is specific to this indicator, so the wrong indicator doesn't get connected.

A closeup of the 32-pin connector, probably a MIL Spec 18-32.For reference, here is the pinout of the unit. Since this is based on reverse engineering, I don't guarantee it 100%. Don't use this for flight!

Pin Use A 5V illumination B Chassis ground C AC ground E 26V 400 Hz F 26V 400 Hz K Enable L Speed ok M Signal ground N Ref. voltage P Vmax control voltage R Airspeed control voltage S Mach control voltage V Chassis ground Pins D, G, H, J, T, U, W, X, Y, Z, a, b, c, d, e, f, g, h, and j are unused. ↩

-

The chassis has an empty slot for a third circuit board. My guess is that this chassis was used for multiple types of indicators and others required a third board. ↩

-

If the L pin goes low, the indicator will move to the VMO position. ↩

-

My hypothesis is that the correct reference voltage is 11.7 volts. This yields a scale factor of 1 volt equals 50 knots. It also matches up the display's change in scale at 250 knots with the measured scale change. ↩

-

The meter uses three different integrated circuits in 10-pin metal cans with mysterious military markings: "FHL 24988", "JM38510/10102BIC 27014", and "SL14040". These appear to all be equivalent to uA747 dual op amps. (Note that JM38510 is not a part number; it is a general military specification for integrated circuits. The number after it is the relevant part number.) ↩

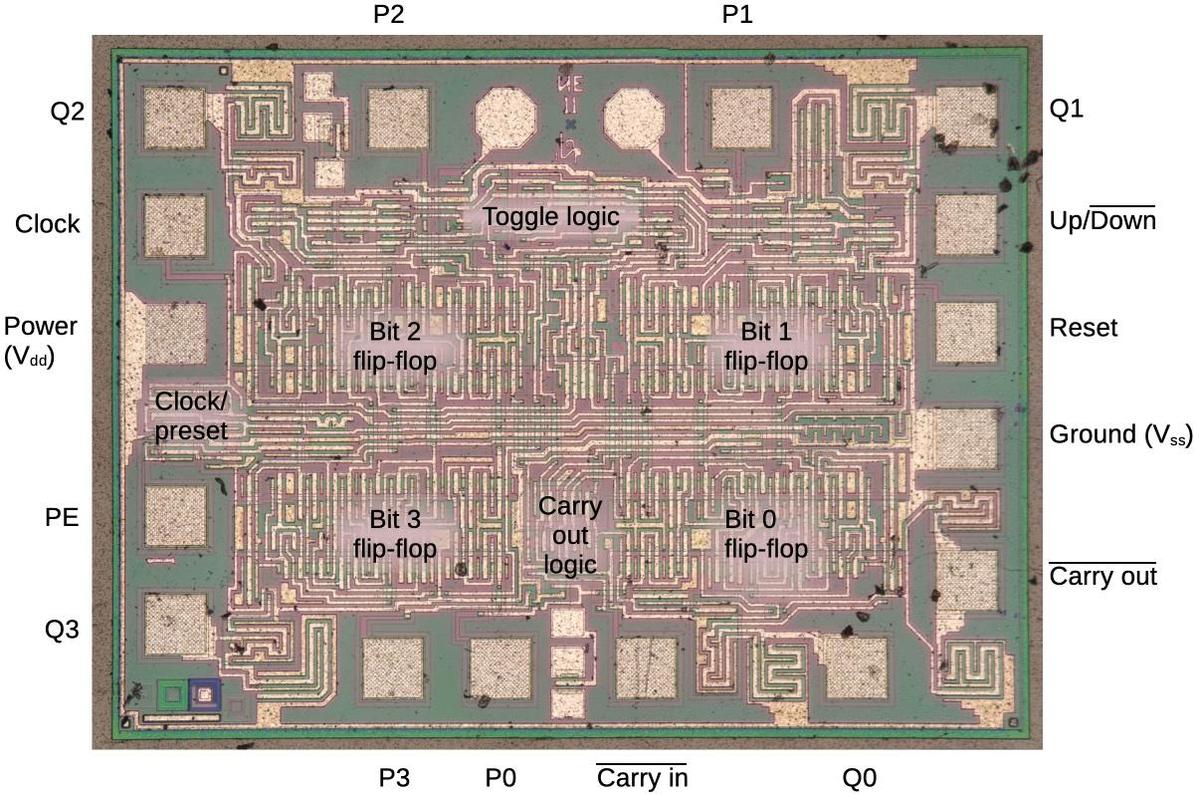

Subject: The 8086 processor's microcode pipeline from die analysis

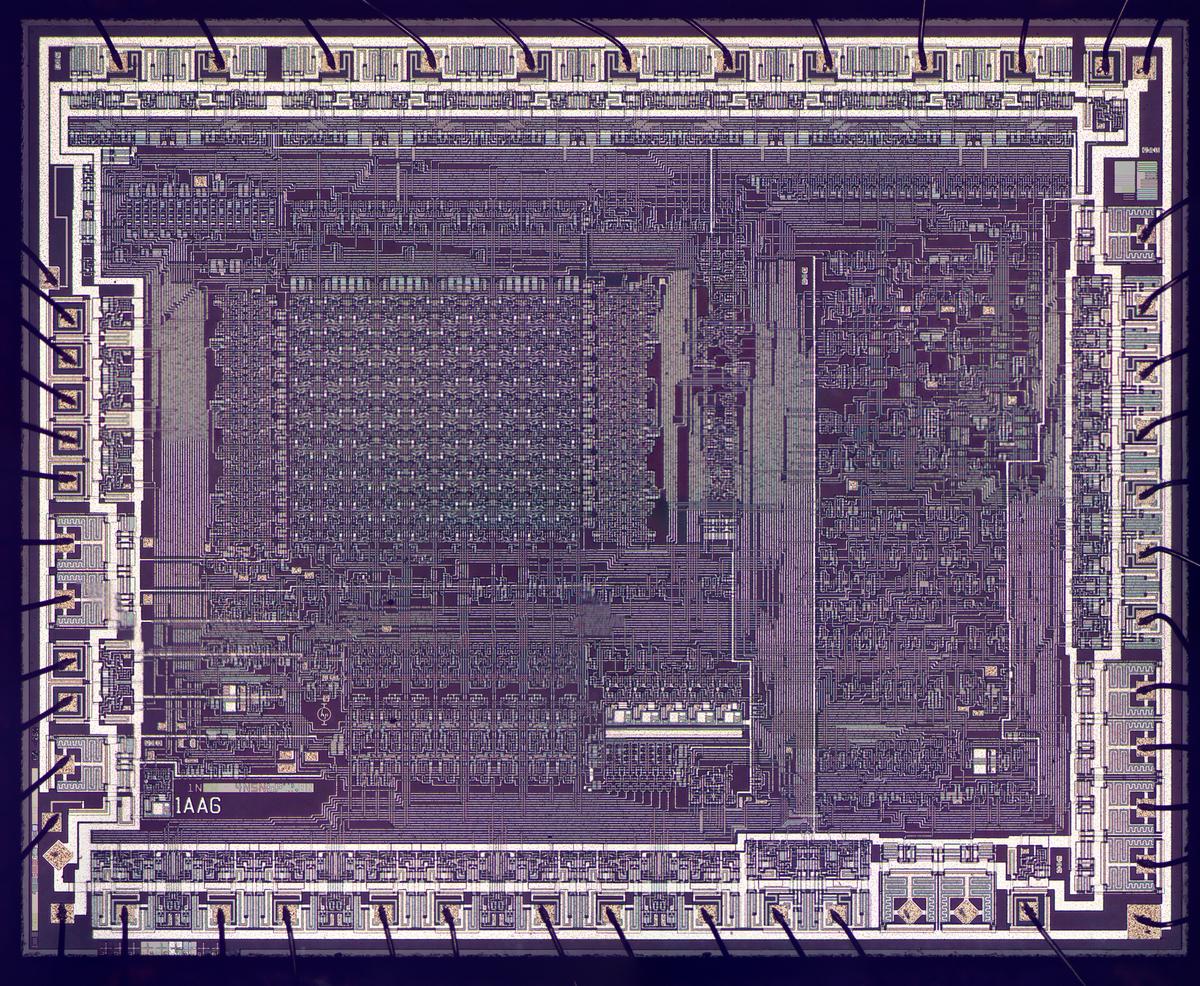

Intel introduced the 8086 microprocessor in 1978, and its influence still remains through the popular x86 architecture. The 8086 was a fairly complex microprocessor for its time, implementing instructions in microcode with pipelining to improve performance. This blog post explains the microcode operations for a particular instruction, "ADD immediate". As the 8086 documentation will tell you, this instruction takes four clock cycles to execute. But looking internally shows seven clock cycles of activity. How does the 8086 fit seven cycles of computation into four cycles? As I will show, the trick is pipelining.

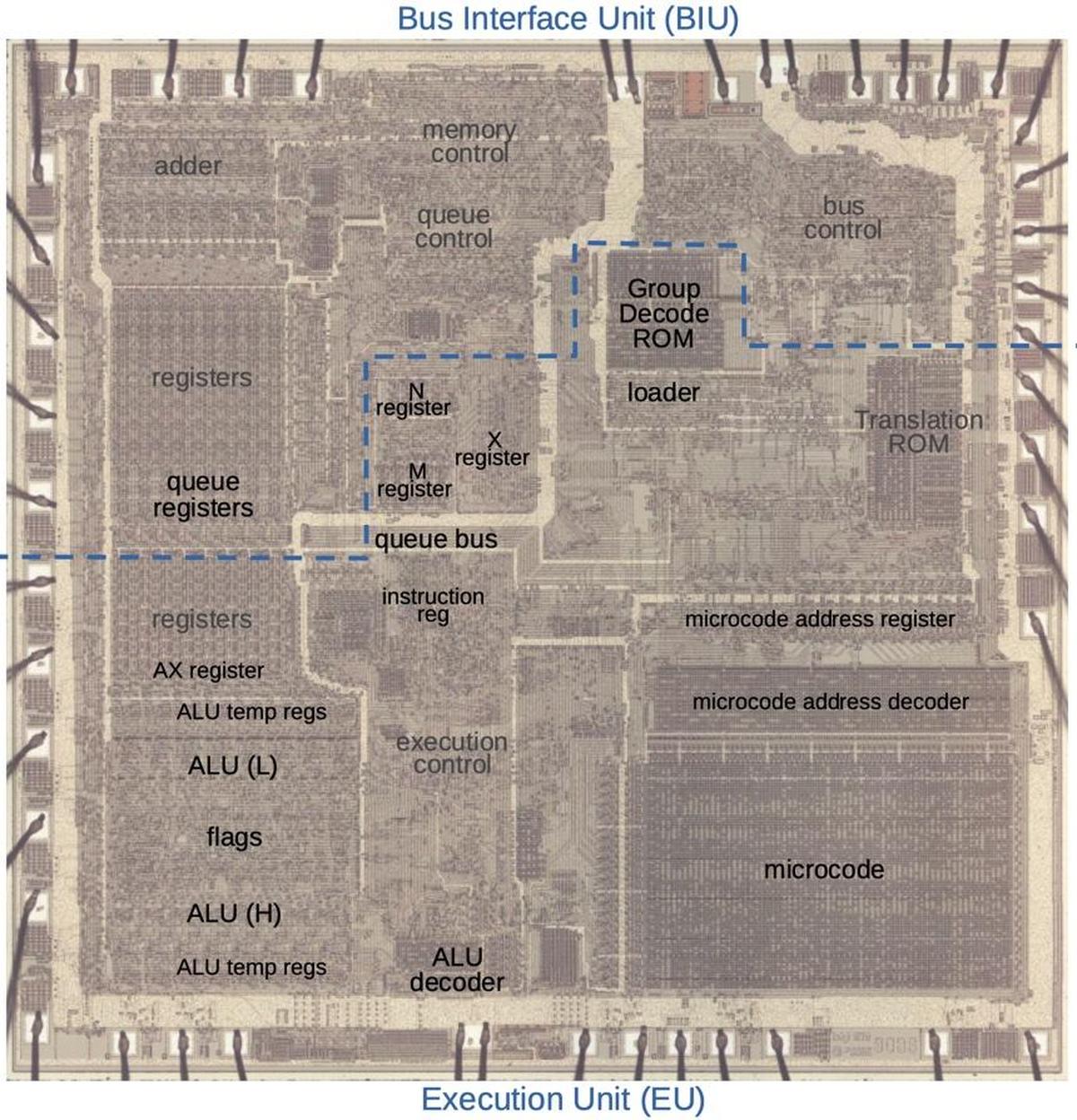

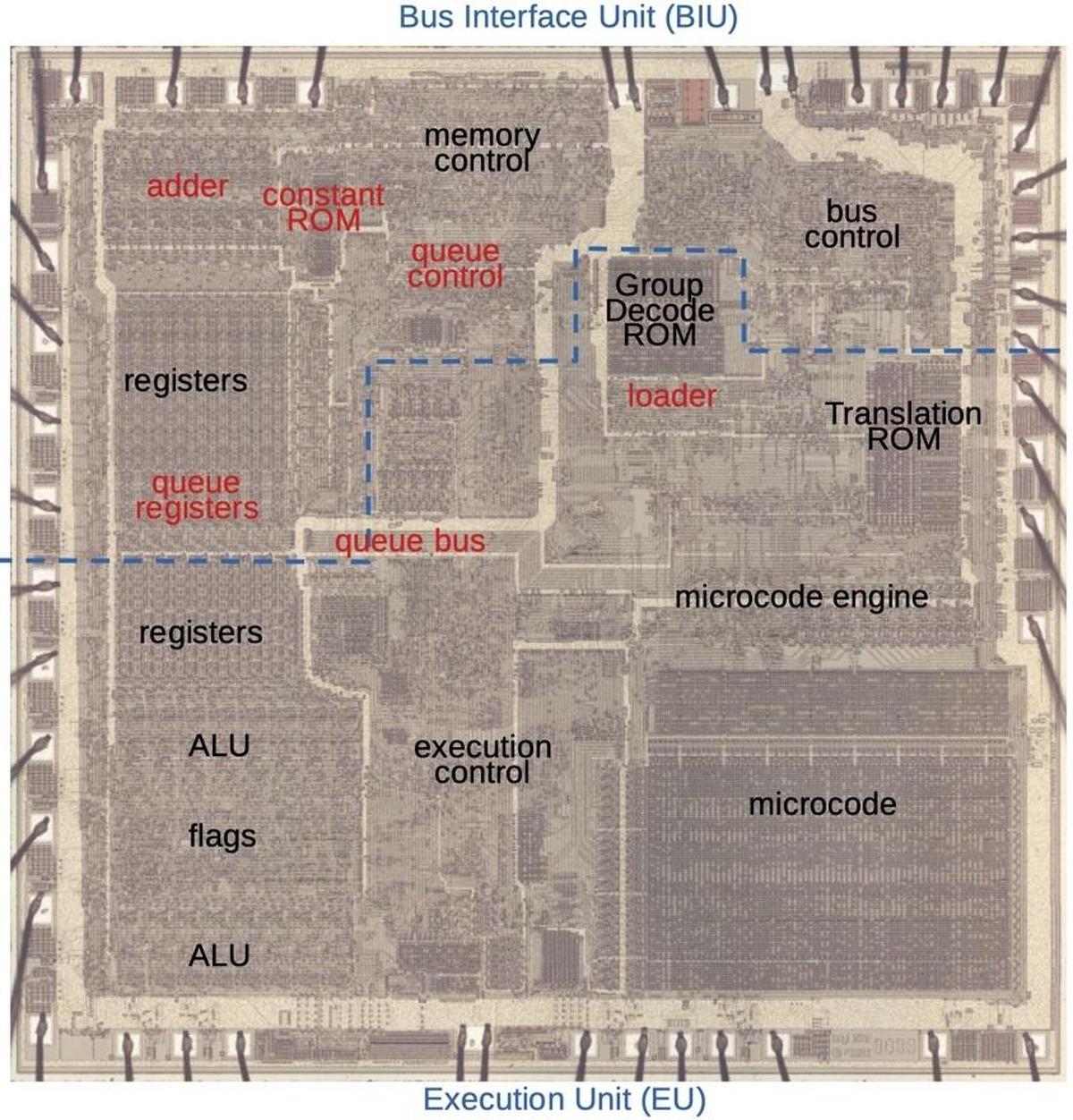

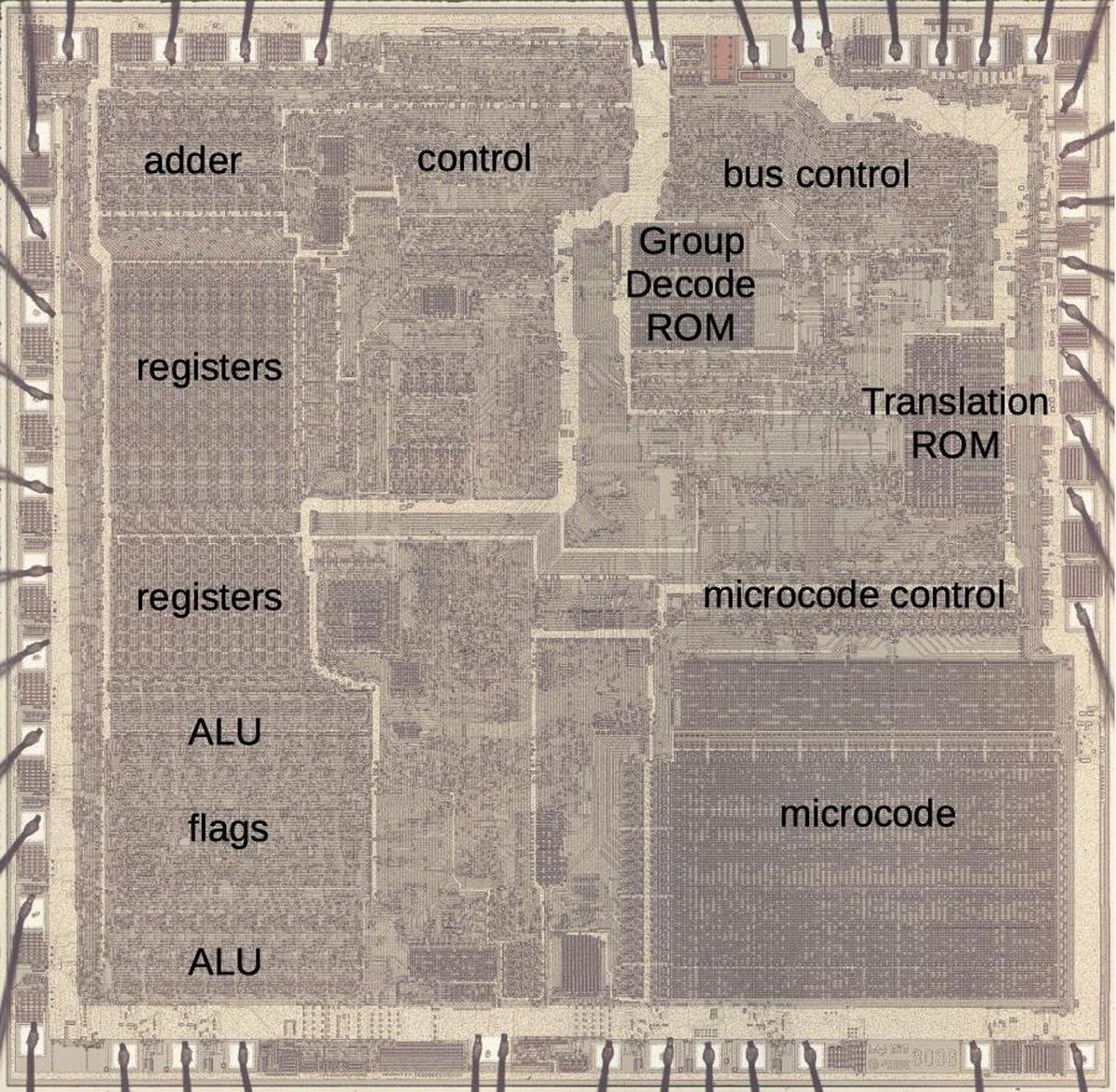

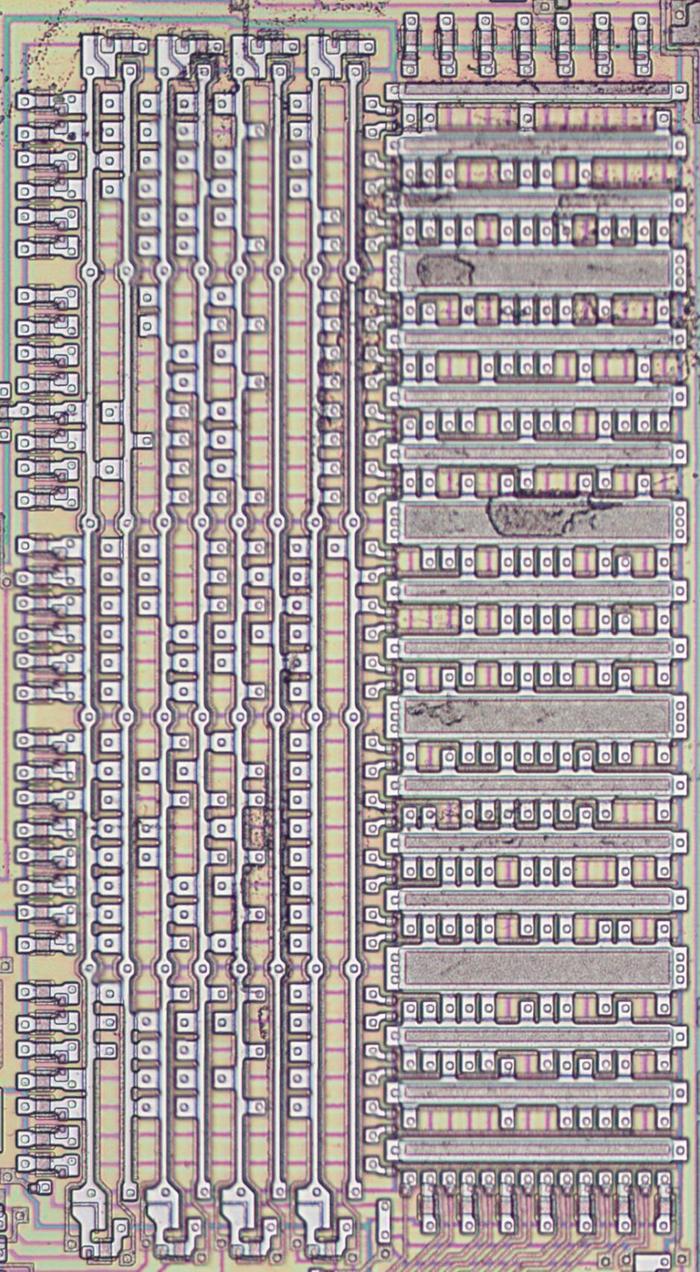

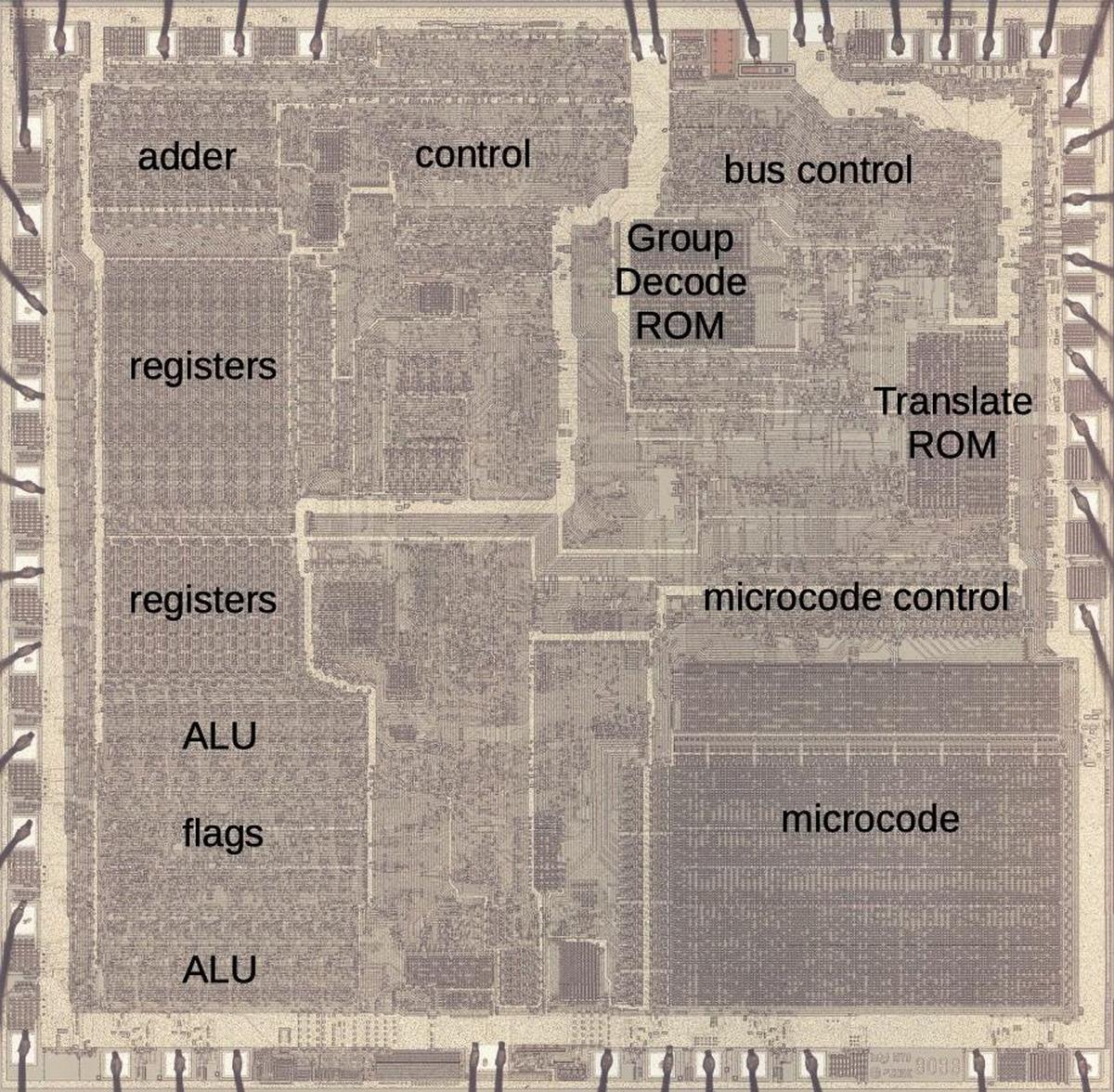

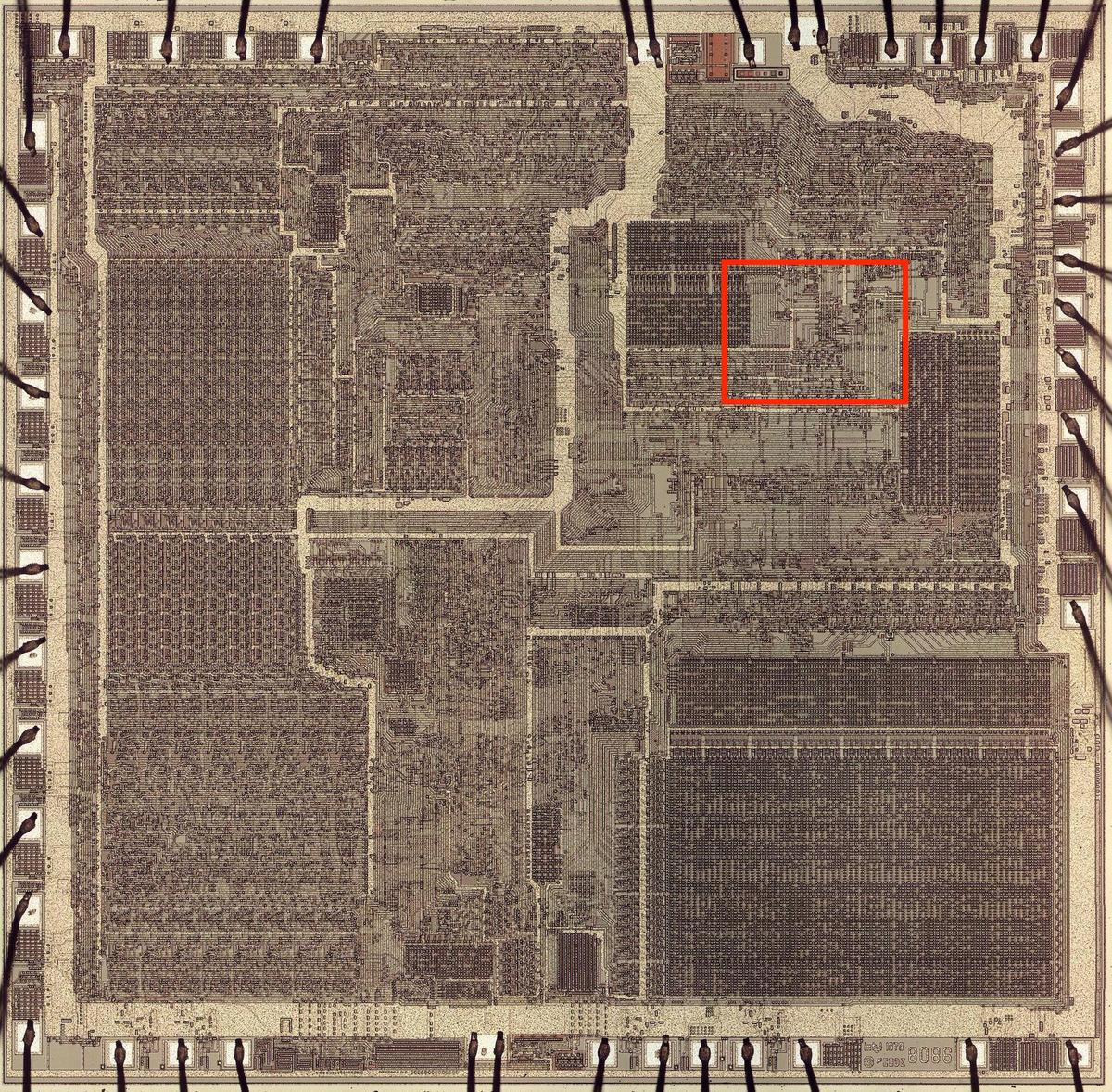

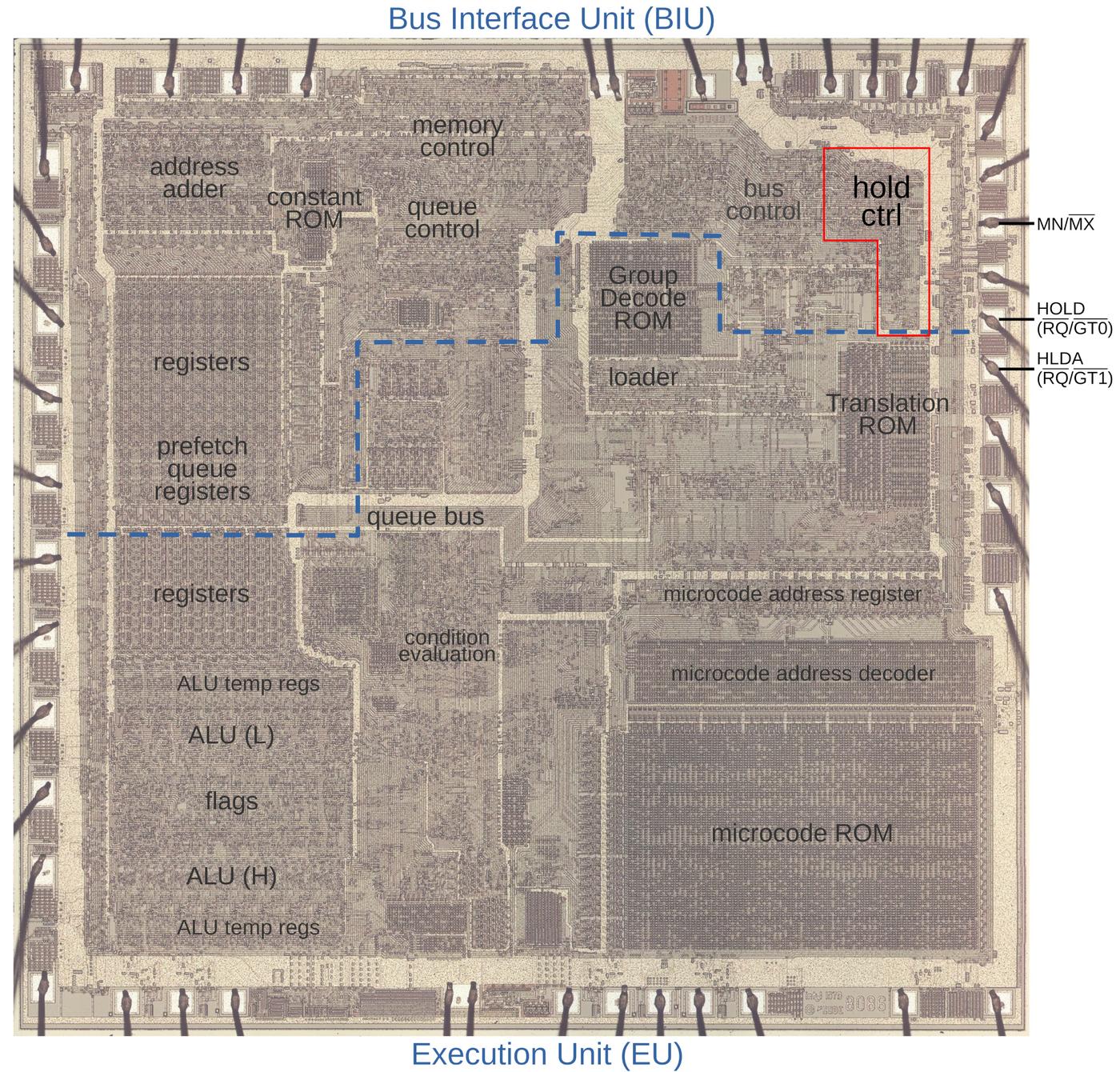

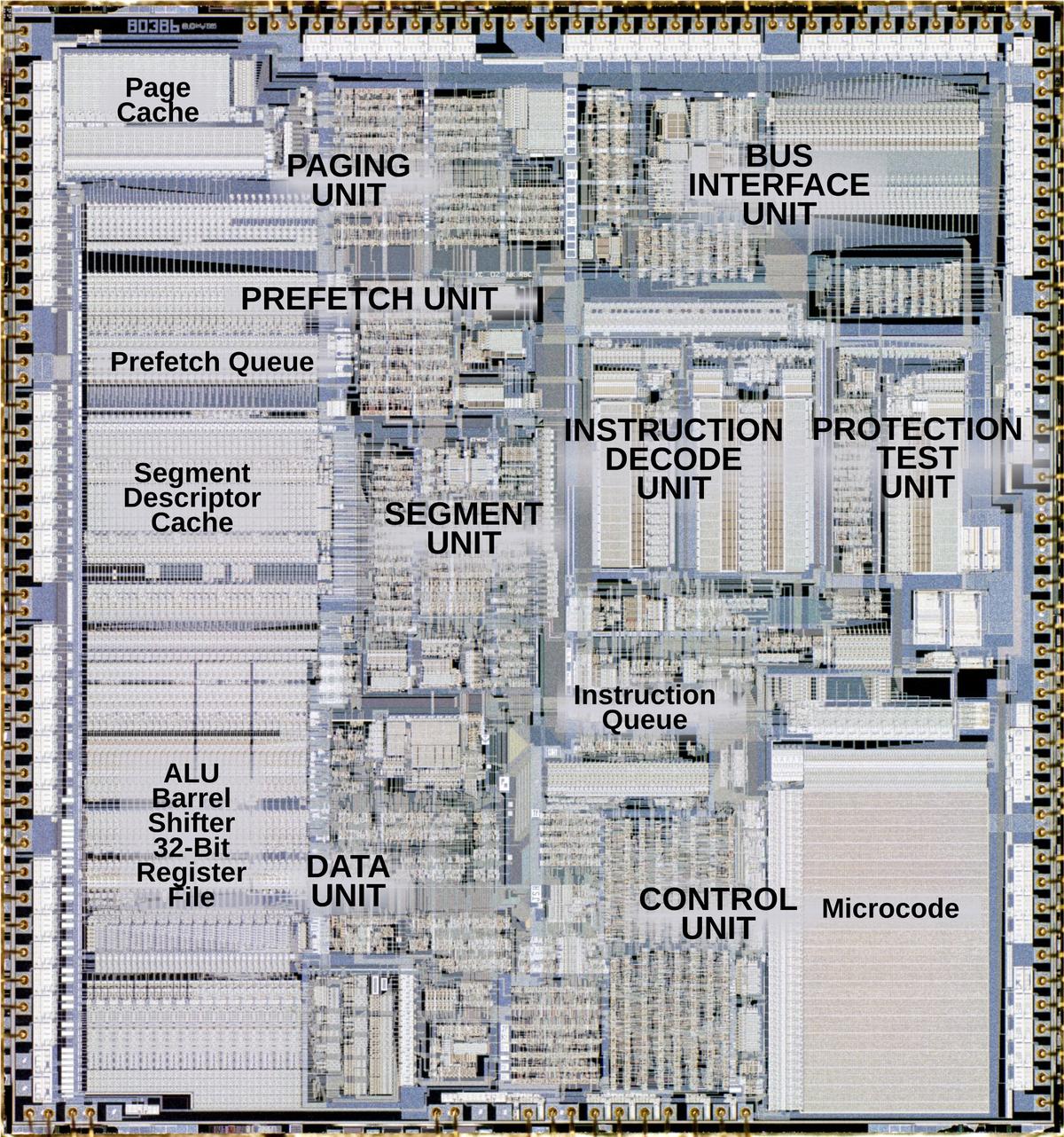

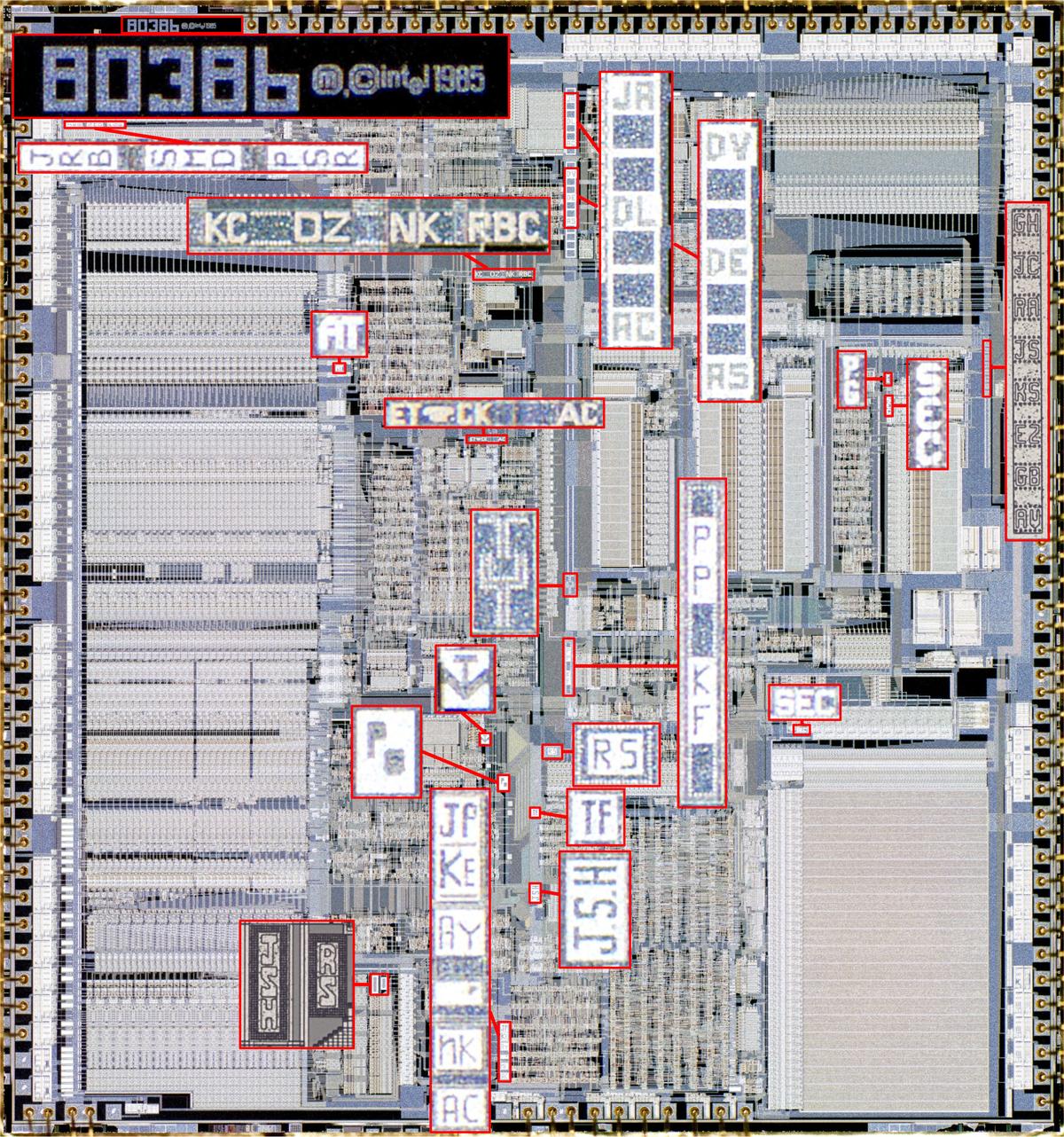

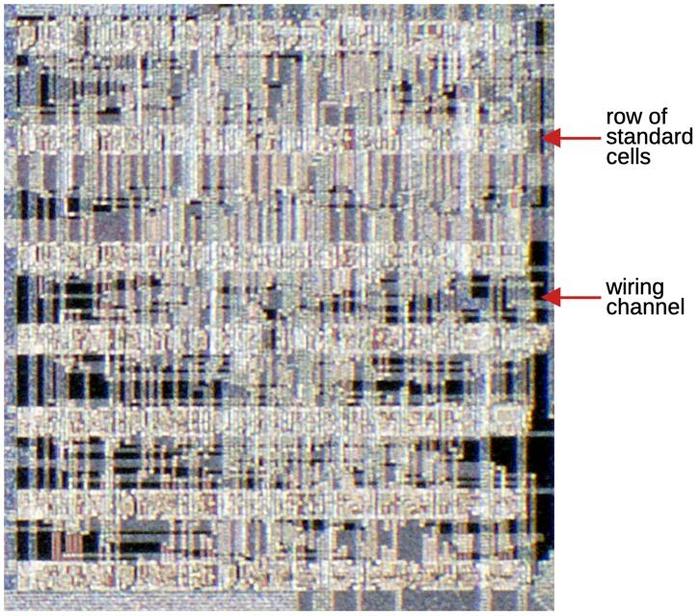

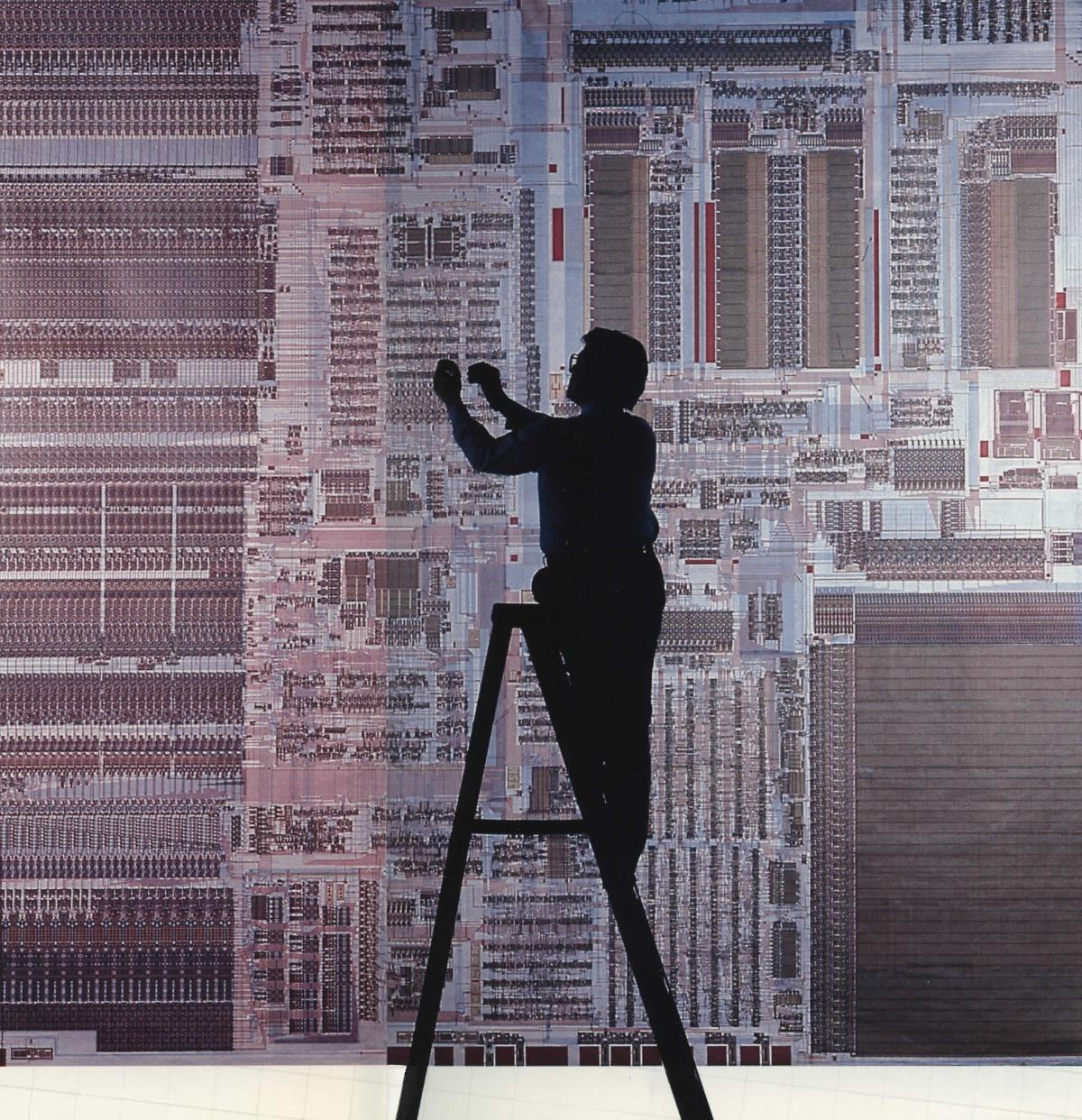

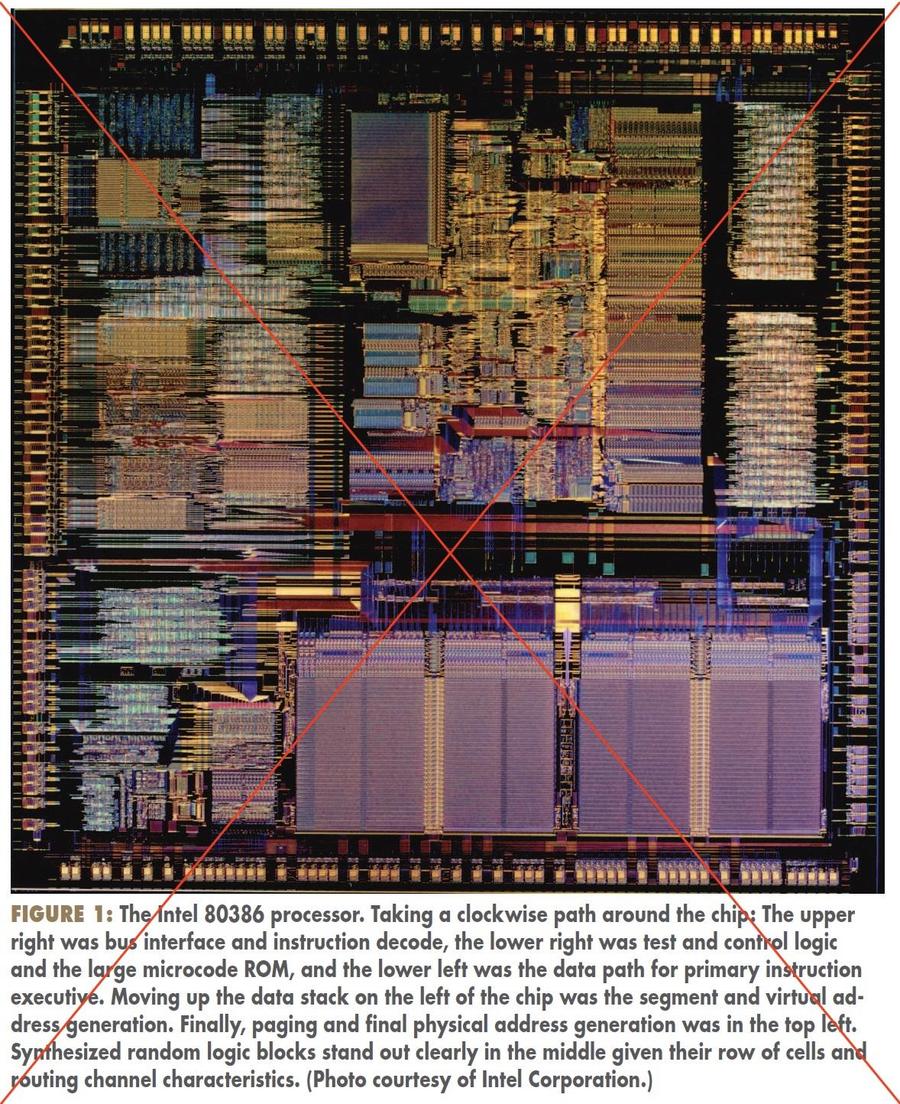

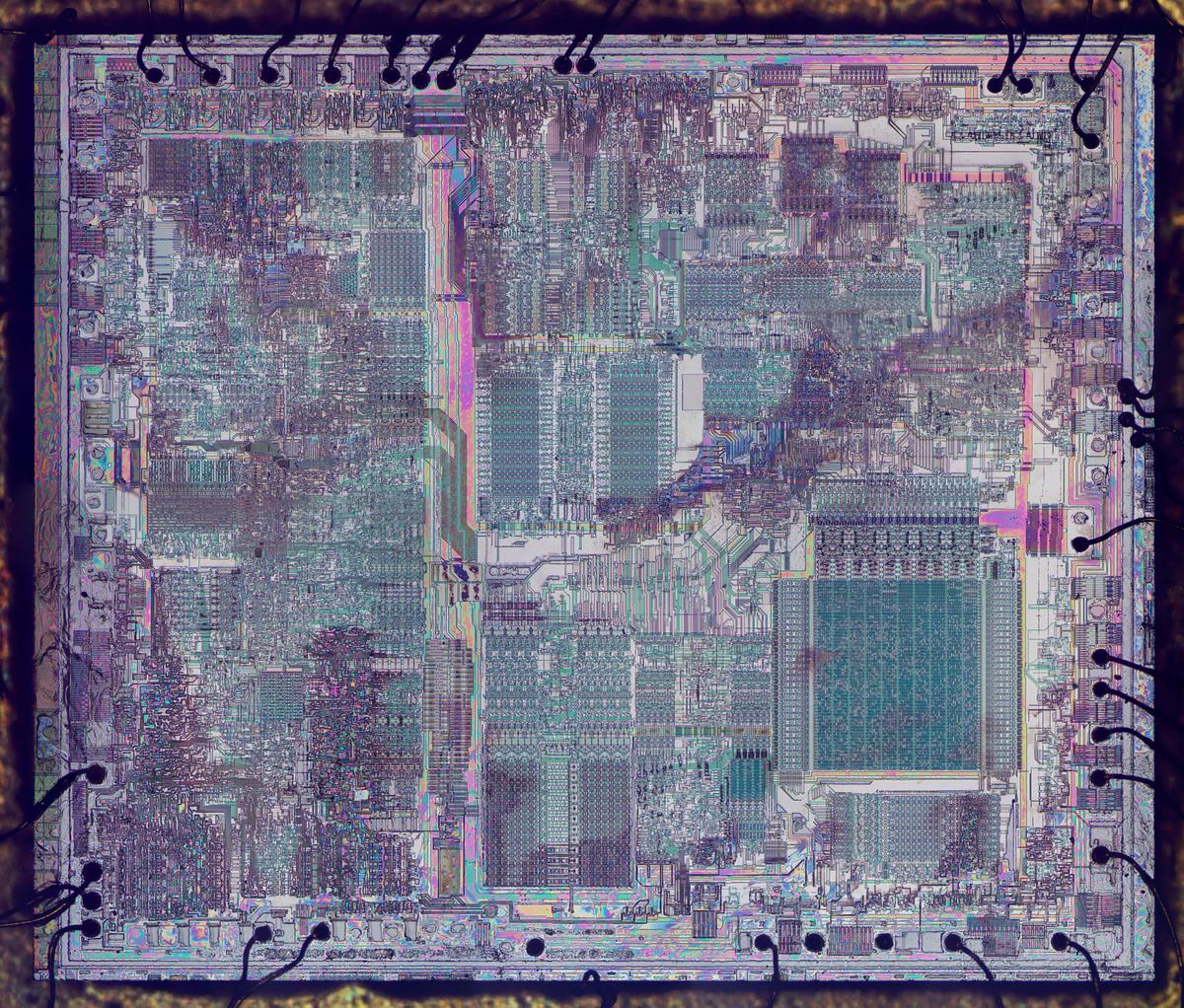

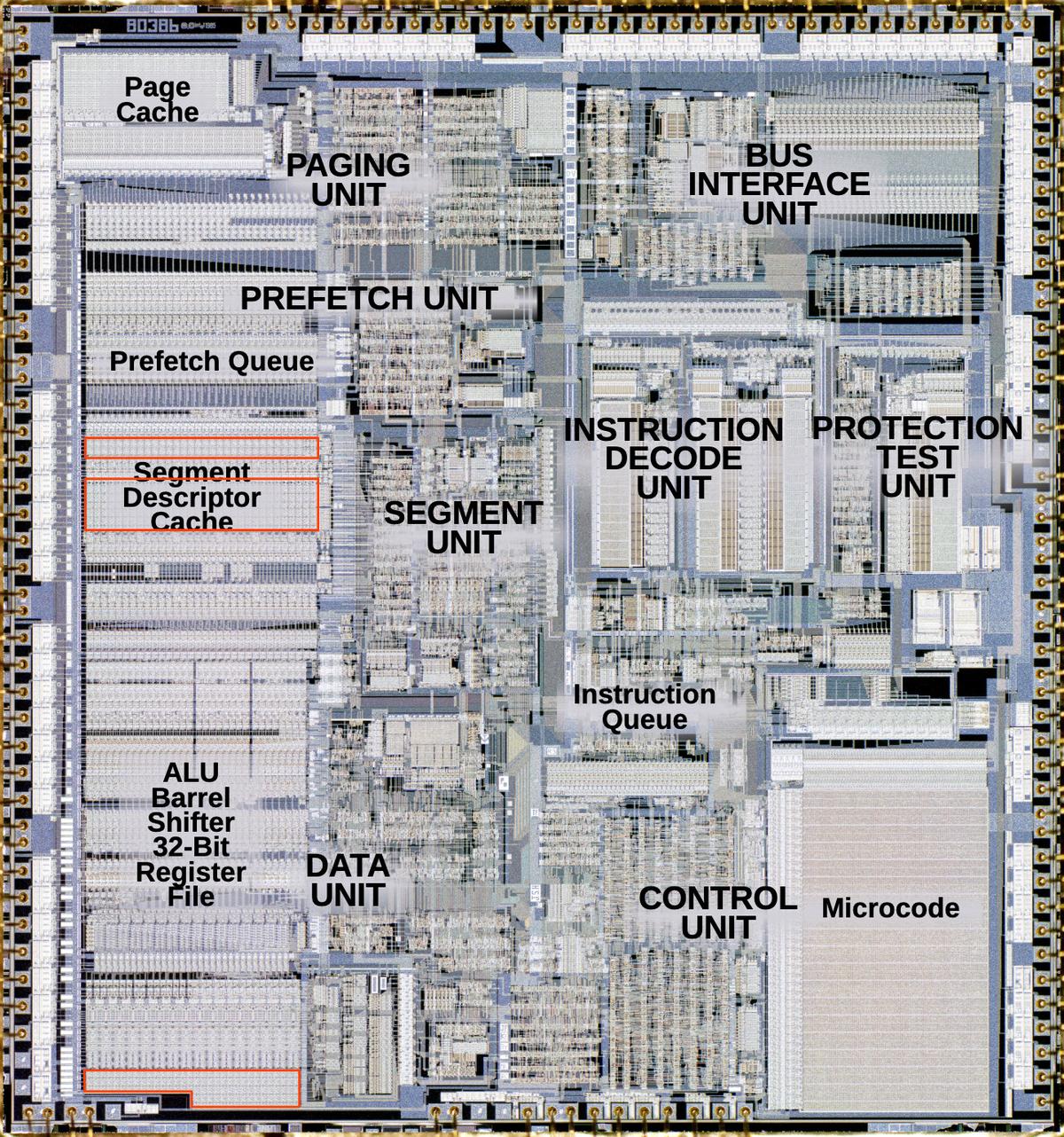

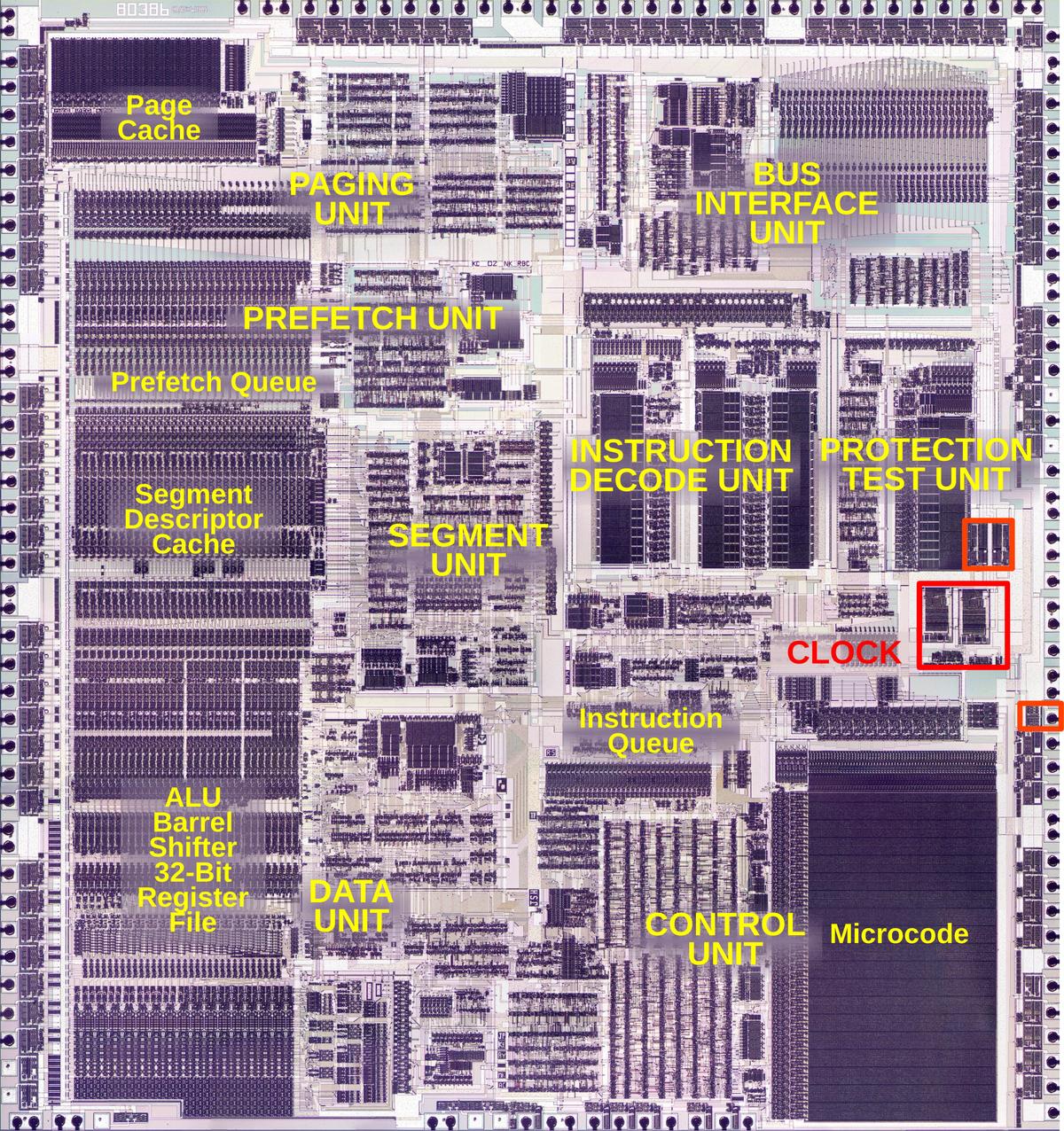

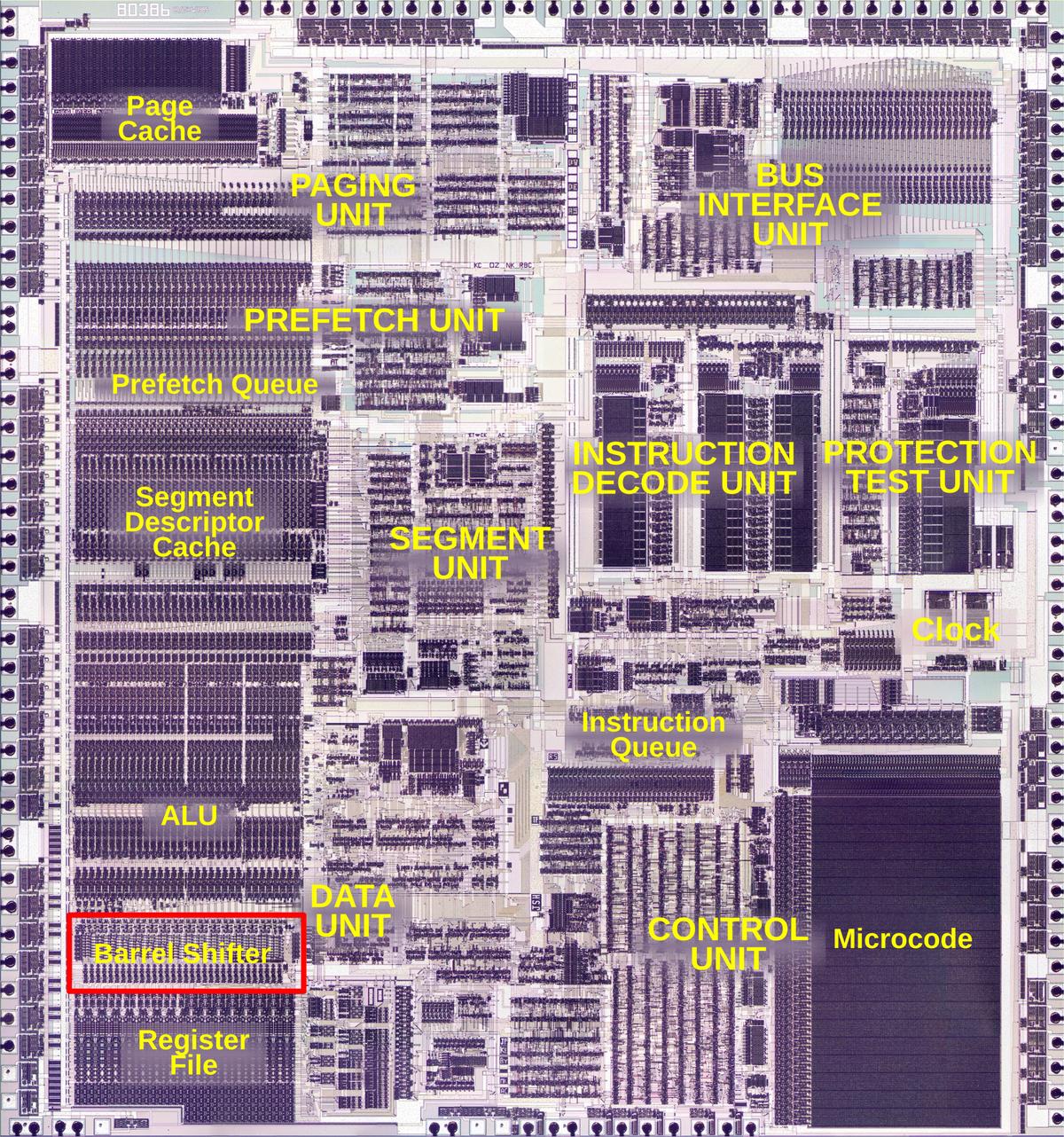

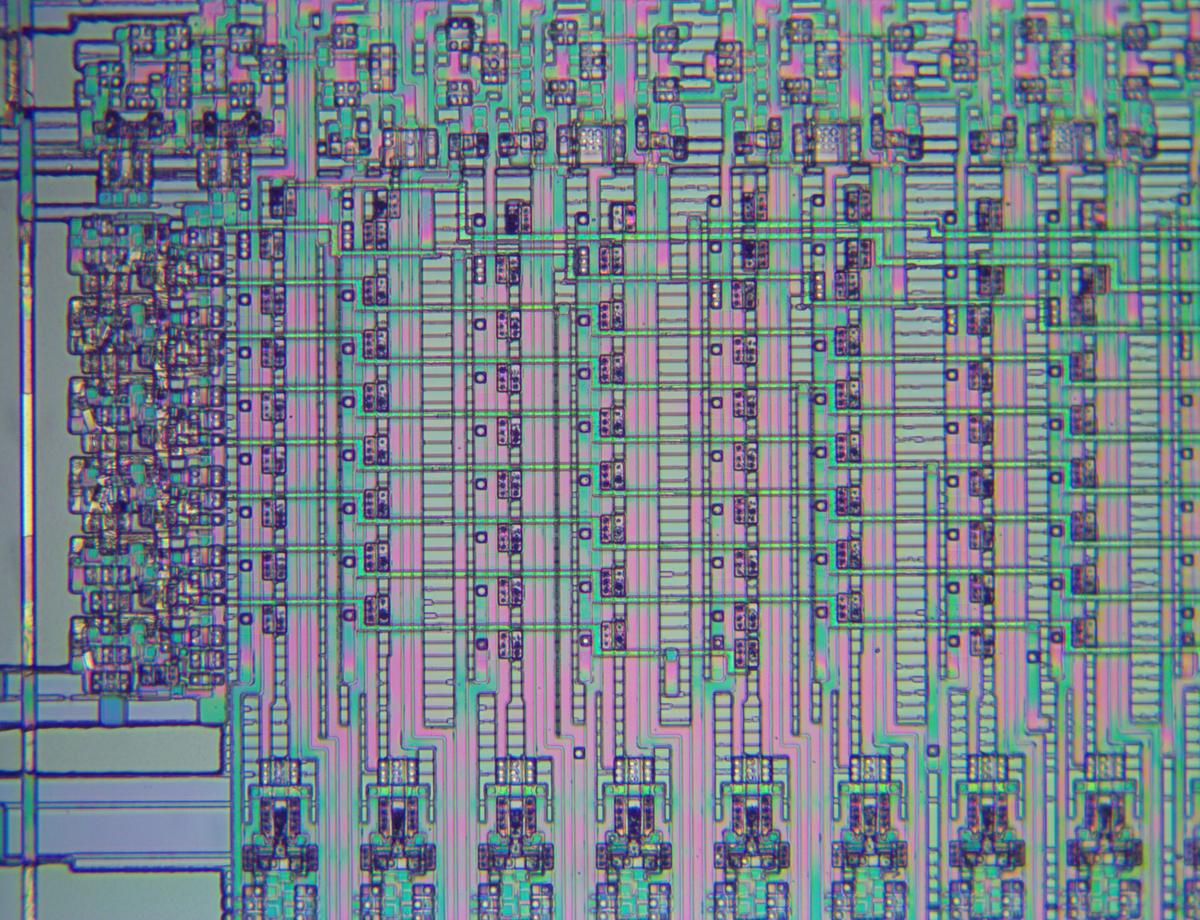

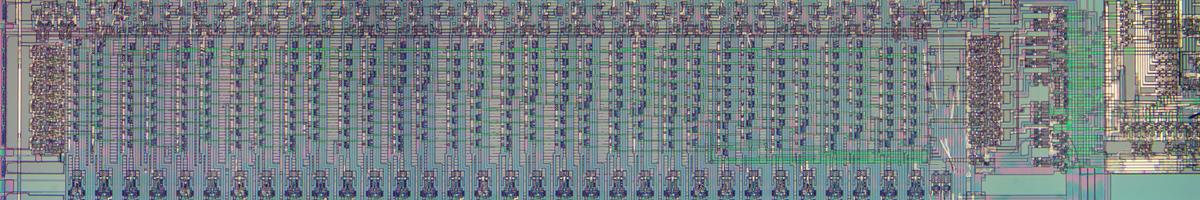

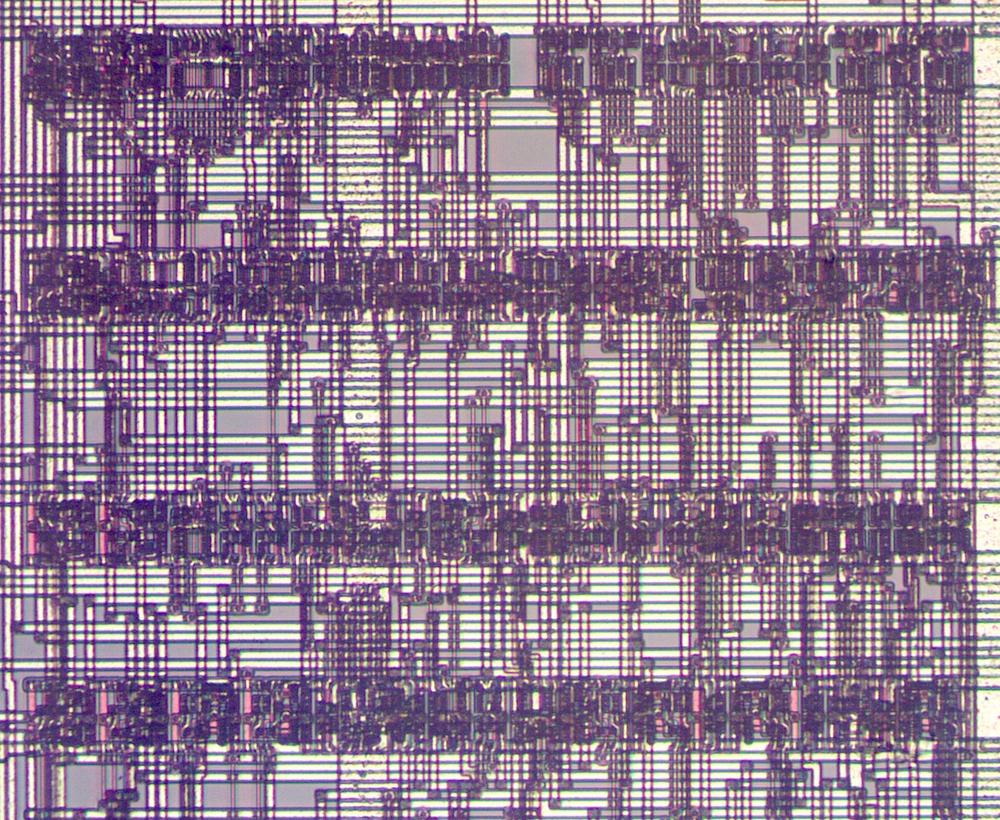

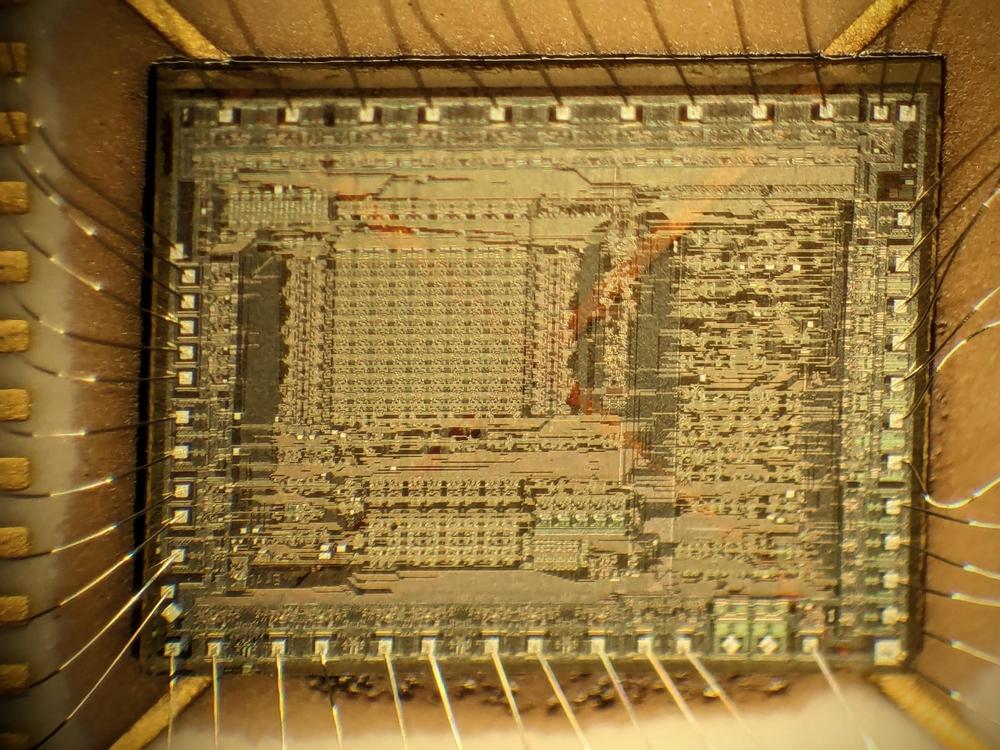

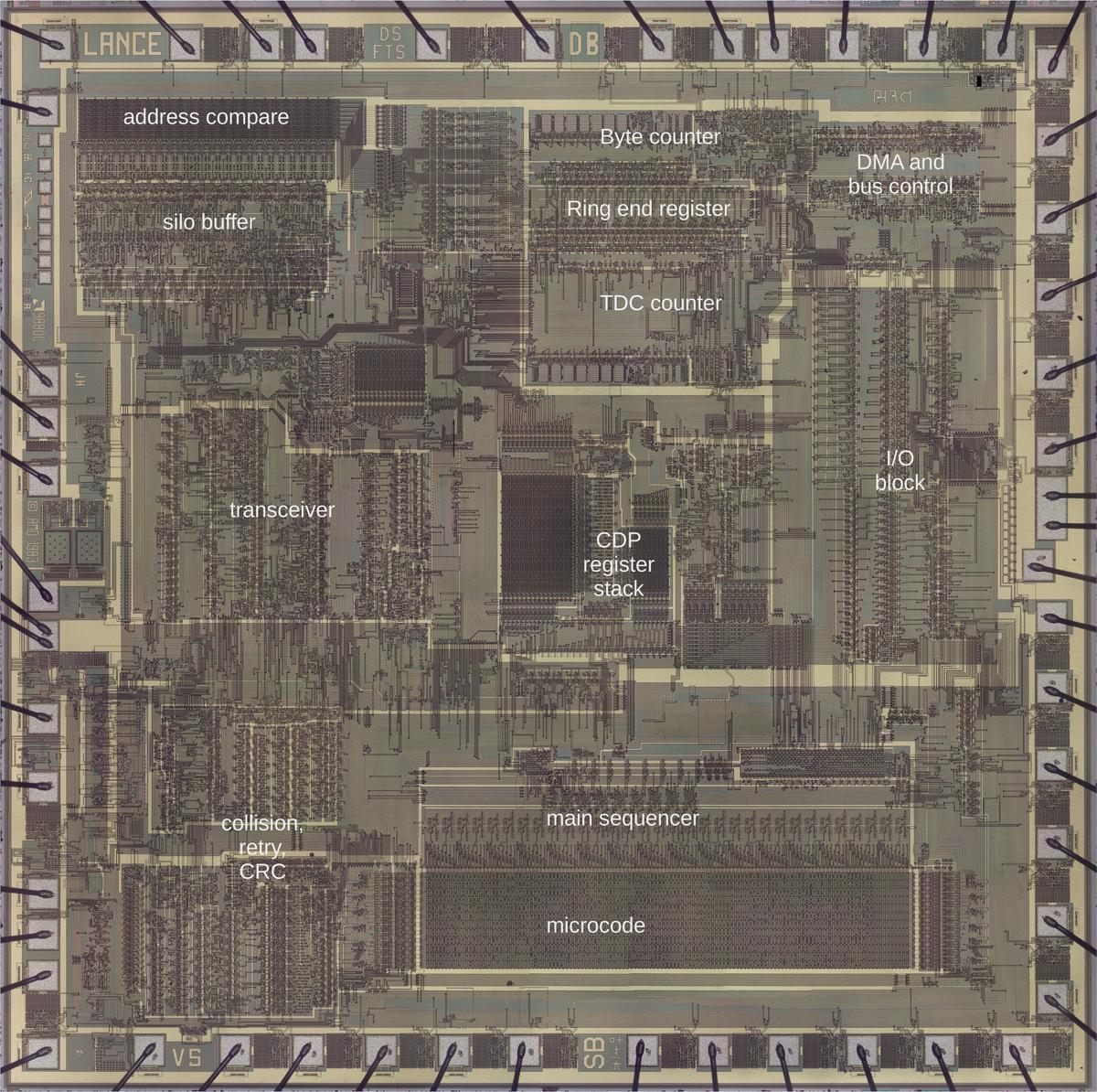

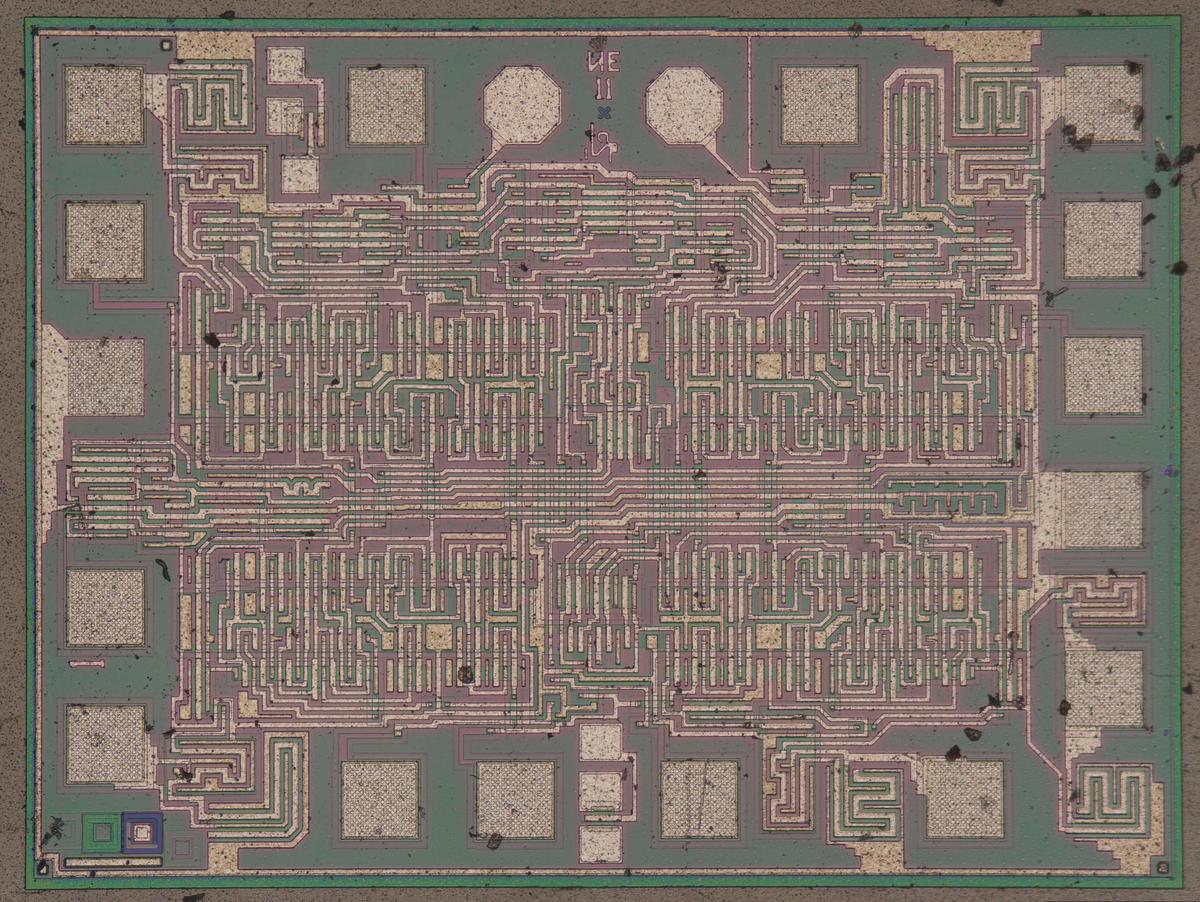

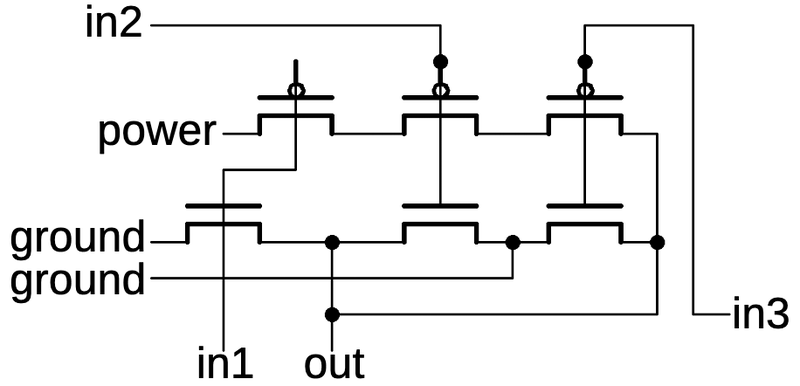

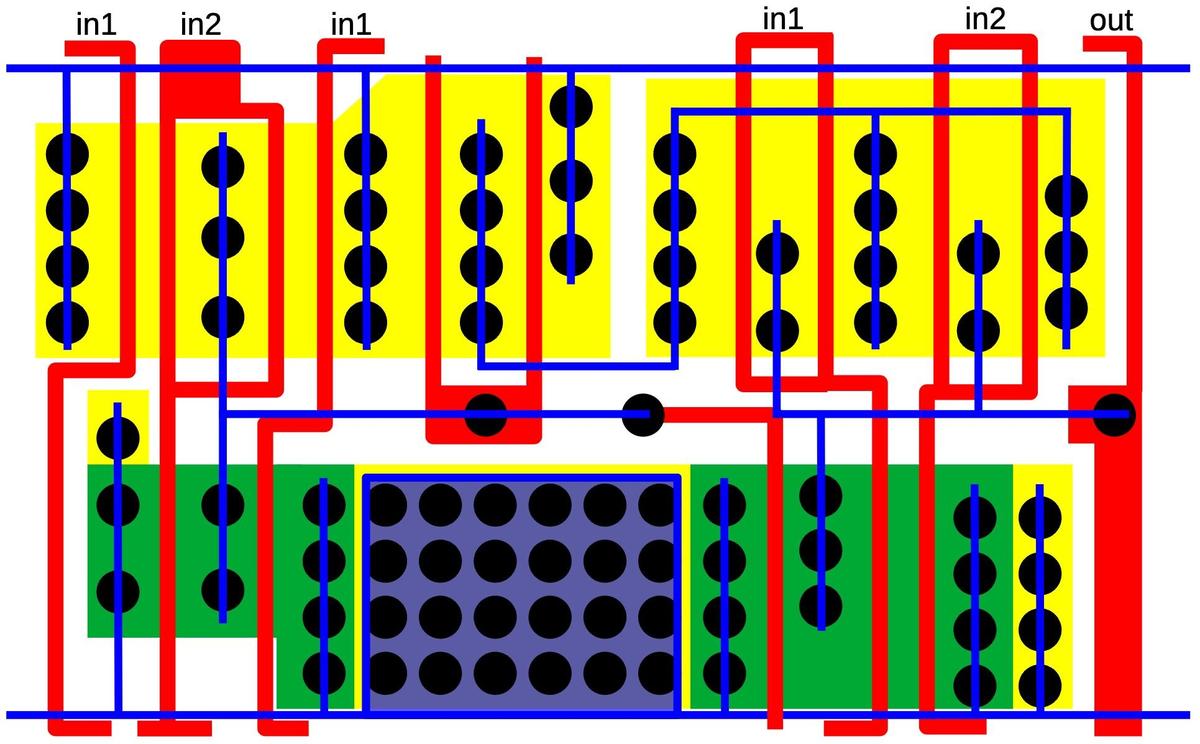

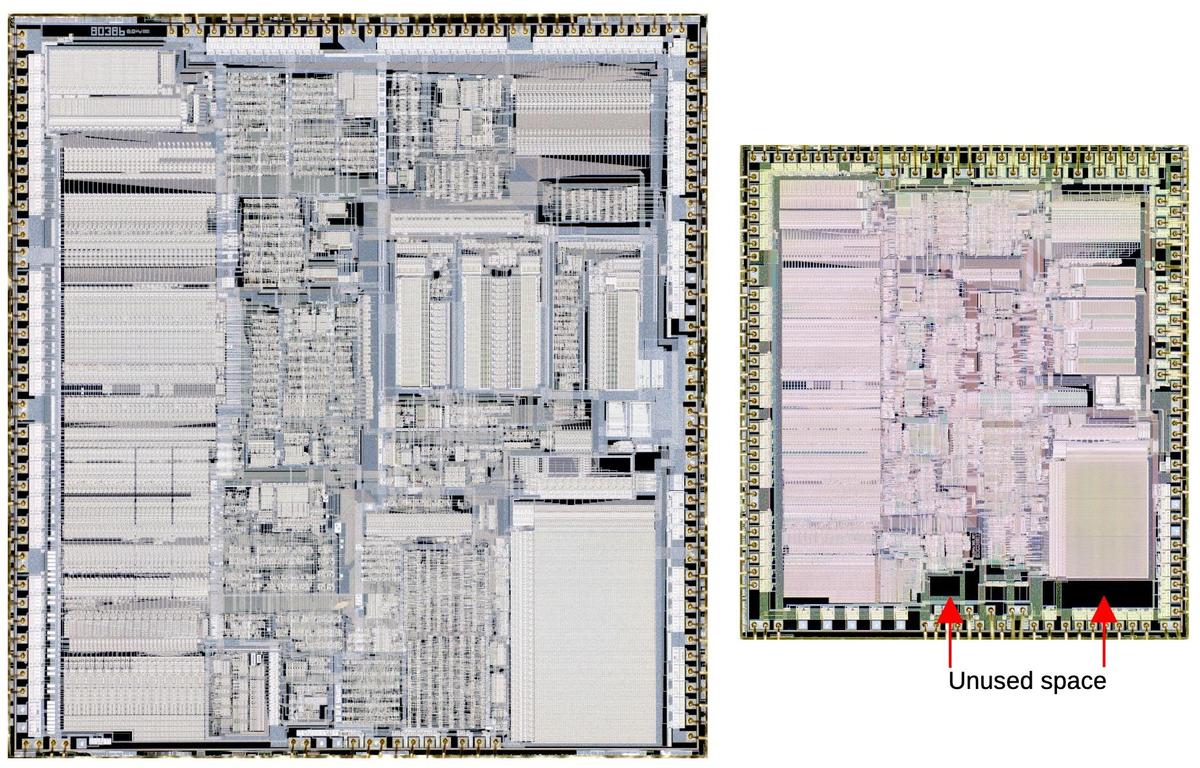

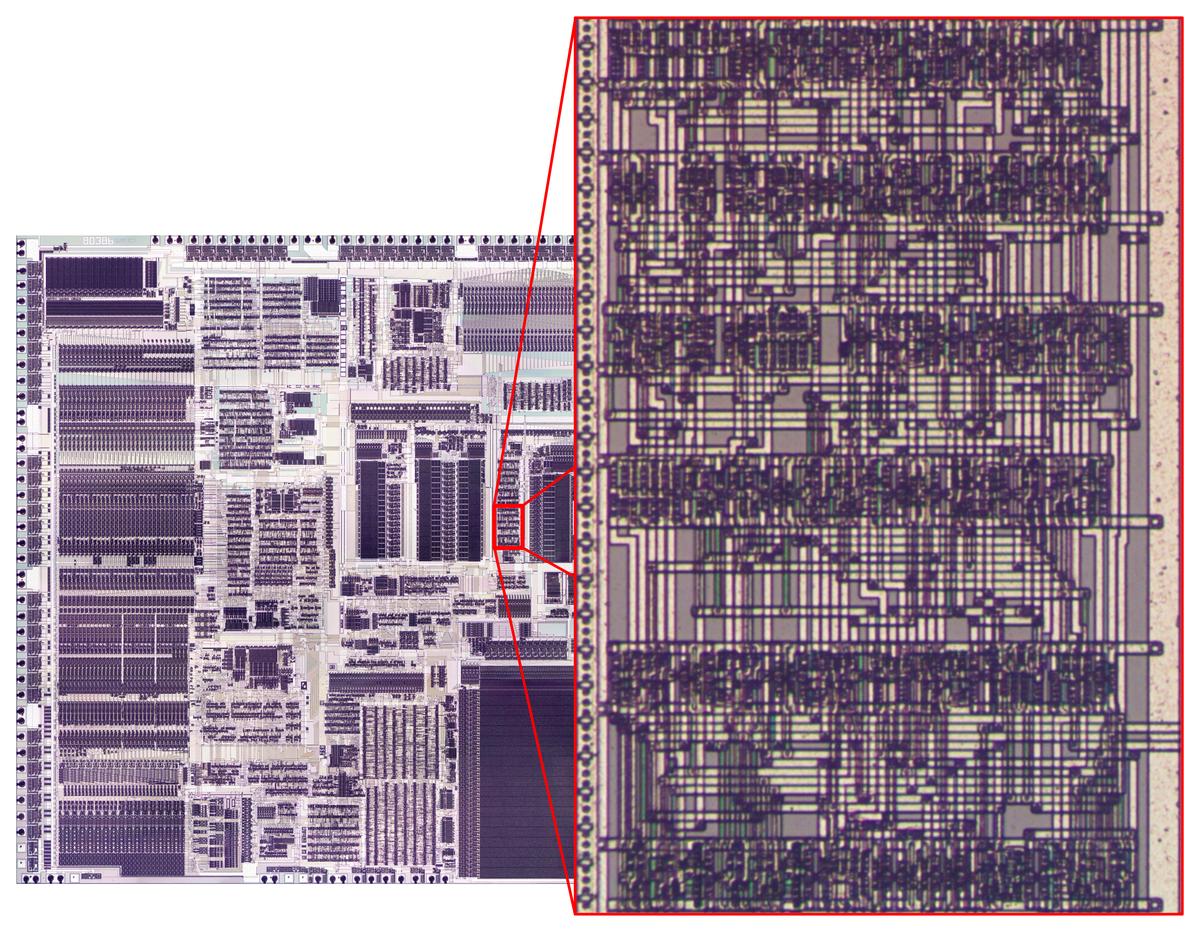

The die photo below shows the 8086 microprocessor under a microscope. The metal layer on top of the chip is visible, with the silicon and polysilicon mostly hidden underneath. Around the edges of the die, bond wires connect pads to the chip's 40 external pins. Architecturally, the chip is partitioned into a Bus Interface Unit (BIU) at the top and an Execution Unit (EU) below, which will be important in the discussion. The Bus Interface Unit handles memory accesses (including instruction prefetching), while the Execution Unit executes instructions. The functional blocks labeled in black are the ones that are part of the discussion below. In particular, the registers and ALU (Arithmetic/Logic Unit) are at the left and the large microcode ROM is in the lower-right.

Microcode for "ADD"

Most people think of machine instructions as the basic steps that a computer performs. However, many processors (including the 8086) have another layer of software underneath: microcode. The motivation is that instructions usually require multiple steps inside the processor. One of the hardest parts of computer design is creating the control logic that directs the processor for each step of an instruction. The straightforward approach is to build a circuit from flip-flops and gates that moves through the various steps and generates the control signals. However, this circuitry is complicated, error-prone, and hard to design.

The alternative is microcode: instead of building the control circuitry from complex logic gates, the control logic is largely replaced with code. To execute a machine instruction, the computer internally executes several simpler micro-instructions, specified by the microcode. In other words, microcode forms another layer between the machine instructions and the hardware. The main advantage of microcode is that it turns the processor's control logic into a programming task instead of a difficult logic design task.

The 8086 uses a hybrid approach: although the 8086 uses microcode, much of the instruction functionality is implemented with gate logic. This approach removed duplication from the microcode and kept the microcode small enough for 1978 technology. In a sense the microcode is parameterized. For instance, the microcode can specify a generic ALU operation, and the gate logic determines from the instruction which ALU operation to perform. Likewise, the microcode can specify a generic register and the gate logic determines which register to use. The simplest instructions (such as prefixes or condition-code operations) don't use microcode at all. Although this made the 8086's gate logic more complicated, the tradeoff was worthwhile.

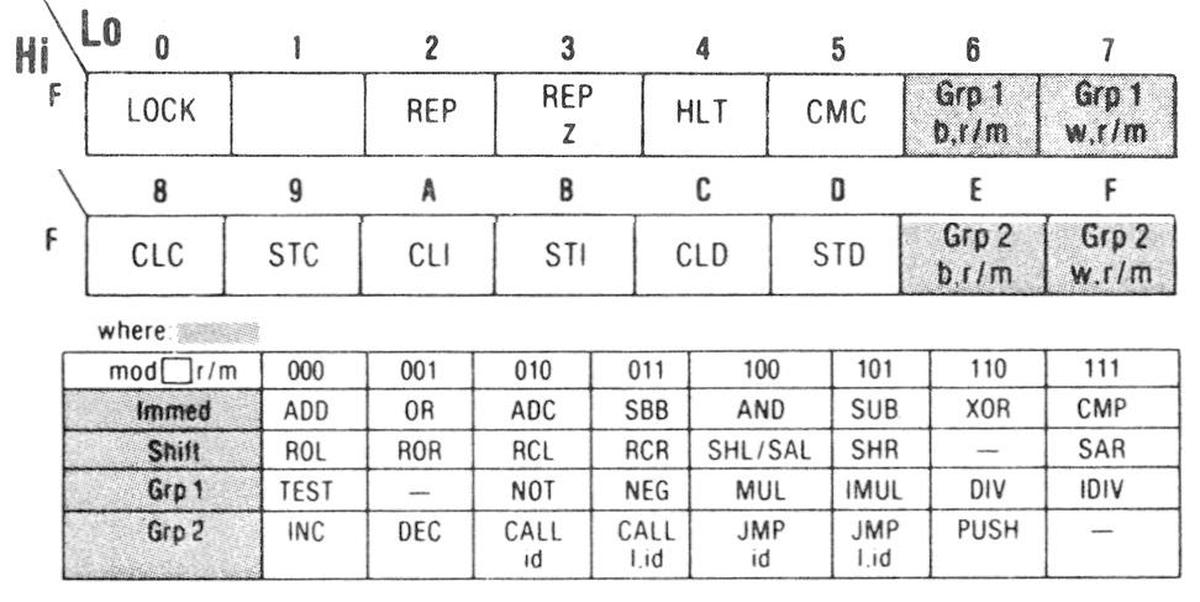

The 8086's microcode was disassembled by Andrew Jenner (link) from my die photos, so we can see exactly what micro-instructions the 8086 is running for each machine instruction. In this post, I will focus on the ADD instruction, since it is fairly straightforward. In particular, the "ADD AX, immediate" instruction contains a 16-bit value that is added to the value in the 16-bit AX register. This instruction consists of three bytes: the opcode 05, followed by the two-byte immediate value. (An "immediate" value is included in the instruction, rather than coming from a register or memory location.)

This ADD instruction is implemented in the 8086's microcode as four micro-instructions, shown below. Each micro-instruction specifies a move operation across the internal ALU bus. It also specifies an action. In brief, the first two instructions get the immediate argument from the prefetch queue. The third instruction gets the argument from the AX register and starts the ALU (Arithmetic/Logic Unit) operation. The final instruction stores the result into the AX register and updates the condition flags.

µ-address move action 018 Q → tmpBL L8 2 019 Q → tmpBH 01a M → tmpA XI tmpA, NXT 01b Σ → M RNI FLAGS

In detail, the first instruction moves a byte from the prefetch queue (Q) to one of the ALU's temporary registers, specifically the low byte of the tmpB register.

(The ALU has three temporary registers to hold arguments: tmpA, tmpB, and tmpC.

These temporary registers are invisible to the programmer and are unrelated to the AX, BX, CX registers.)

Likewise, the second instruction fetches the high byte of the immediate value from the queue and stores it in the high byte of the ALU's tmpB register.

The action in the first micro-instruction, L8, will branch to step 2 (01a) if the instruction specifies an 8-bit operation, skipping the load of the high byte.

Thus, the same microcode supports the 8-bit and 16-bit ADD instructions.1

The third micro-instruction is more complicated. The move section moves the AX register's contents (indicated by M) to the accumulator's tmpA register, getting both arguments ready for

the operation. XI tmpA starts an ALU operation, in this case adding tmpA to tmpB.2

Finally, NXT indicates that this is the next-to-last micro-instruction, as will be discussed below.

The last micro-instruction stores the ALU's result (Σ) into the AX register.

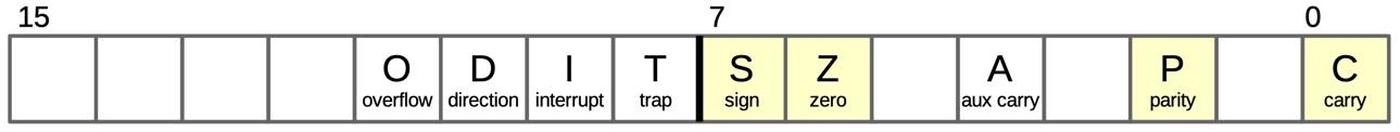

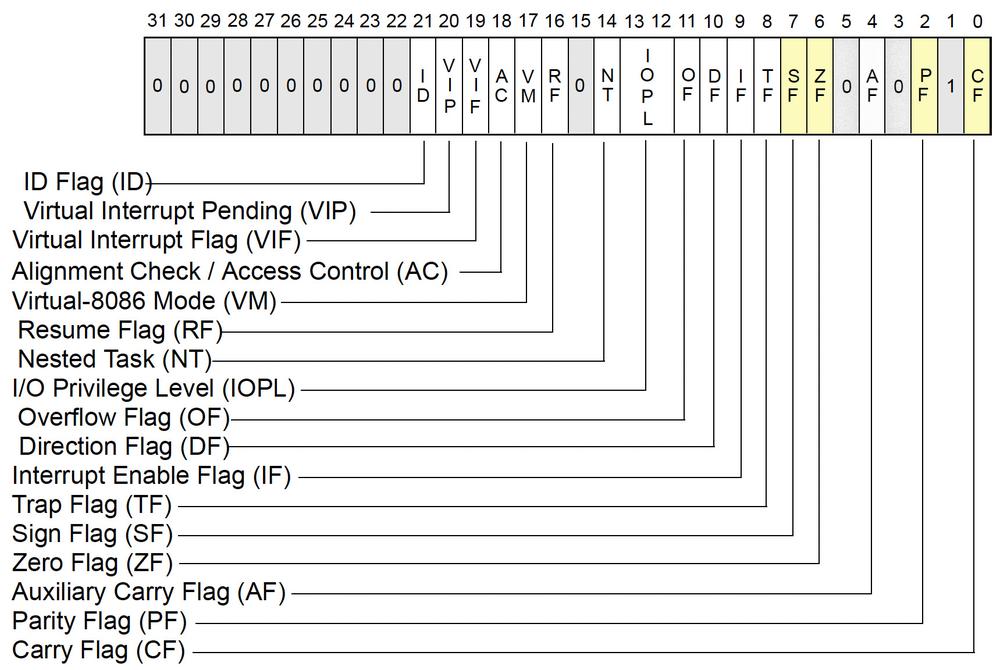

The end of the microcode for this machine instruction is indicated by RNI (Run Next Instruction). Finally, FLAGS causes the 8086's condition flags register to be updated,

indicating if the result is zero, negative, and so forth.

You may have noticed that the microcode doesn't explicitly specify the ADD operation or the AX register, using XI and M instead.

This illustrates the "parameterized" microcode mentioned earlier.

The microcode specifies a generic ALU operation with XI,3 and the hardware fills in the particular ALU operation from bits 5-3 of the machine instruction.

Thus, the microcode above can be used for addition, subtraction, exclusive-or, comparisons, and four other arithmetic/logic operations.

The other parameterized aspect is the generic M register specification.

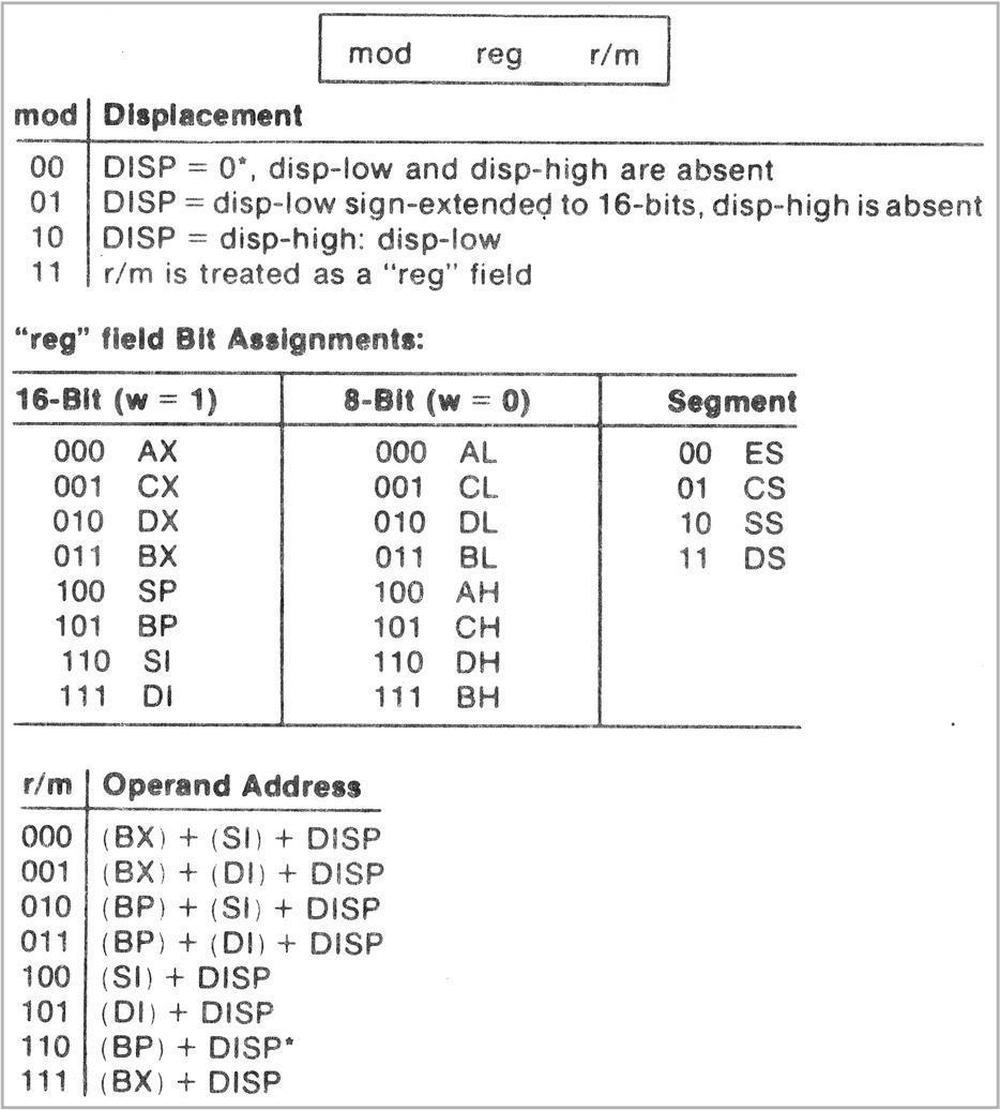

The 8086's instruction set has a flexible way of specifying registers for the source and destination of an operation:

registers are often specified by a "Mod R/M" byte, but can also be specified by bits in the first opcode.

Moreover, many instructions have a bit to switch the source and destination, and another bit to specify an 8-bit or 16-bit register.

The microcode can ignore all this; a micro-instruction uses M and N for the source and destination registers, and the hardware handles the details.4

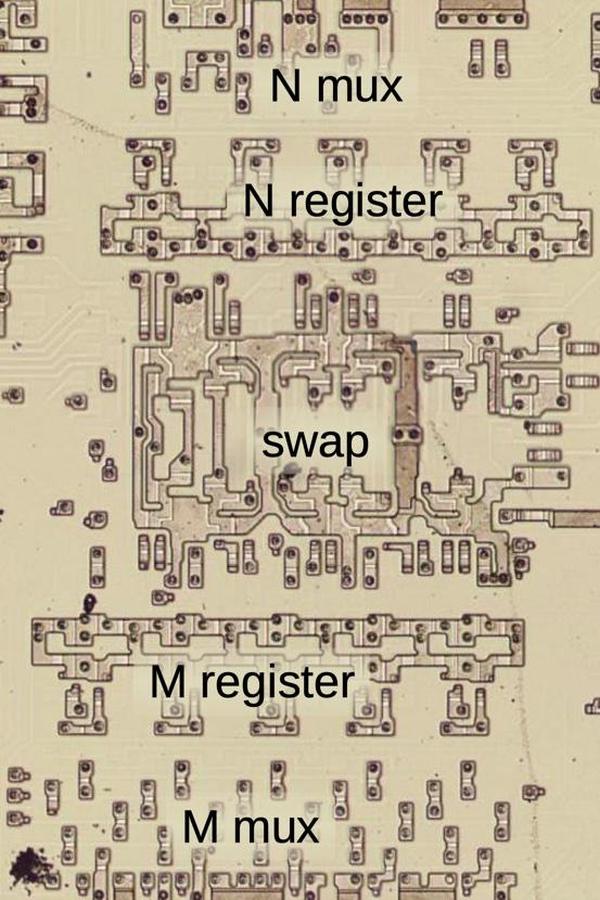

The M and N values are implemented by 5-bit registers that are invisible to the programmer and specify the "real" register to use. The diagram below shows how they appear on the die.

Pipelining

The 8086 documentation says this ADD instruction takes four clock cycles, and as we have seen, it is implemented with four micro-instructions. One micro-instruction is executed per clock cycle, so the timing seems straightforward. The problem, however, is that a micro-instruction can't be completed in one clock cycle. It takes a clock cycle to read a micro-instruction from the microcode ROM. Sending signals across an internal bus typically takes a clock cycle and other actions take more time. So a typical micro-instruction ends up taking 2½ clock cycles from start to end. One solution would be to slow down the clock, so the micro-instruction can complete in one cycle, but that would drastically reduce performance. A better solution is pipelining the execution so a micro-instruction can complete every cycle.5

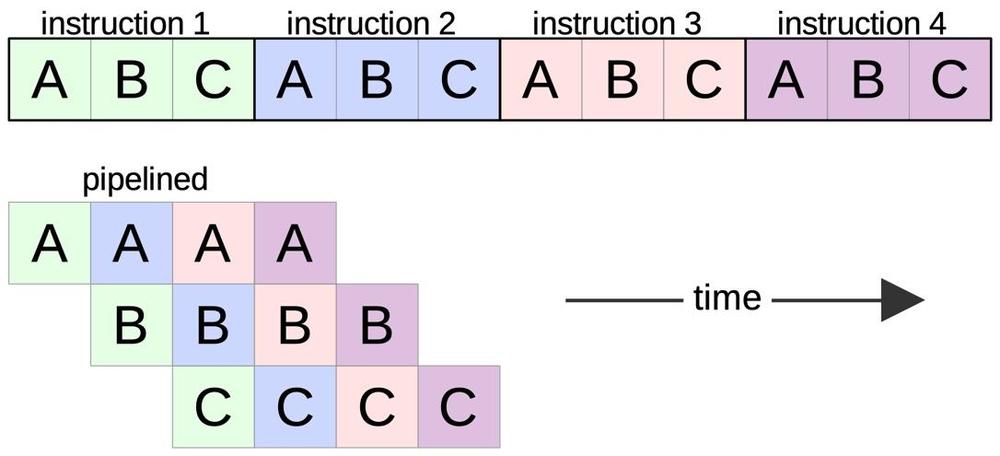

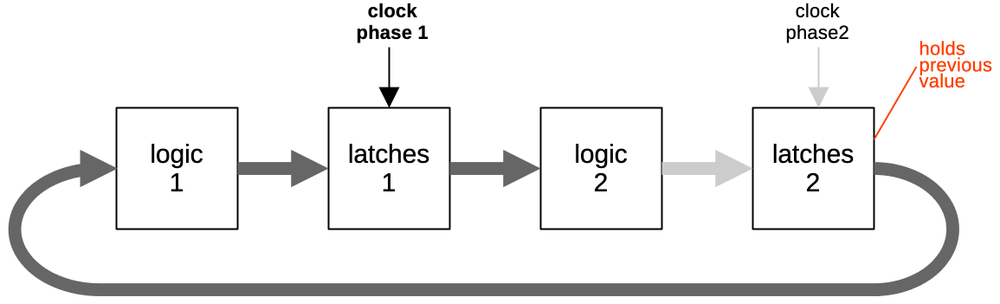

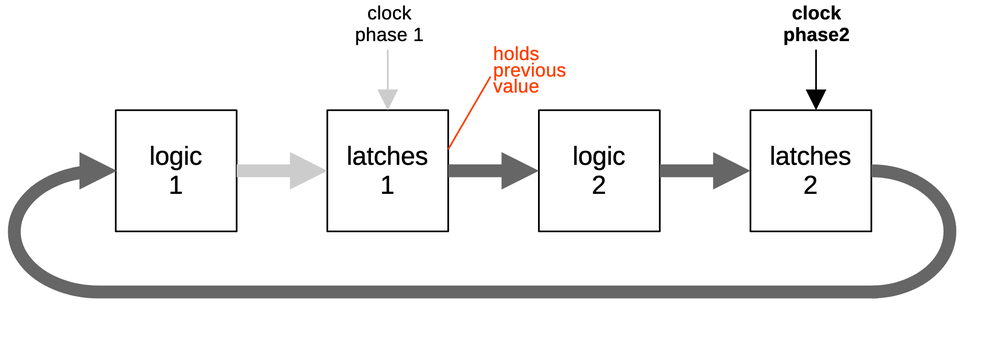

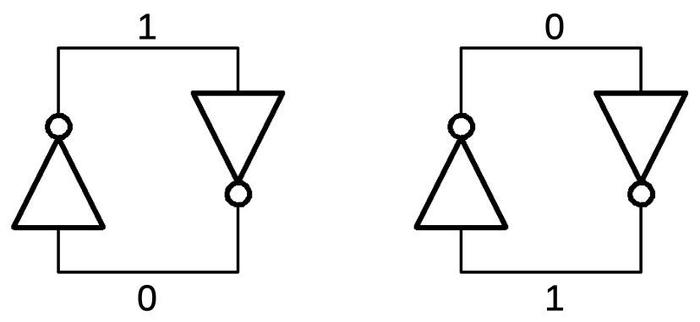

The idea of pipelining is to break instruction processing into "stages", so different stages can work on different instructions at the same time. It's sort of like an assembly line, where a particular car might take an hour to manufacture, but a new car comes off the assembly line every minute. The diagram below shows a simple example. Suppose executing an instruction requires three steps: A, B, and C. Executing four instructions, as shown at the top would take 12 steps in total.

However, suppose the steps can execute independently, so step B for one instruction can execute at the same time as step A for another instruction. Now, as soon as instruction 1 finishes step A and moves on to step B, instruction 2 can start step A. Next, instruction 3 starts step A as instructions 2 and 1 move to steps B and C respectively. The first instruction still takes 3 time units to complete, but after that, an instruction completes every time unit, providing a theoretical 3× speedup.6 In a bit, I will show how the 8086 uses the idea of pipelining.

The prefetch queue

The 8086 uses instruction prefetching to improve performance. Prefetching is not the focus of this article, but a brief explanation is necessary. (I wrote about the prefetch circuitry in detail earlier.) Memory accesses on the 8086 are relatively slow (at least four clock cycles), so we don't want to wait every time the processor needs a new instruction. The idea behind prefetching is that the processor fetches future instructions from memory while the CPU is busy with the current instruction. When the CPU is ready to execute the next instruction, hopefully the instruction is already in the prefetch queue and the CPU doesn't need to wait for memory. The 8086 appears to be the first microprocessor to implement prefetching.

In more detail, the 8086 fetches instructions into its prefetch queue asynchronously from instruction execution: The "Bus Interface Unit" performs prefetches, while the "Execution Unit" executes instructions. Prefetched instructions are stored in the 6-byte prefetch queue. The Q bus (short for "Queue bus") provides bytes, one at a time, from the prefetch queue to the Execution Unit.7 If the prefetch queue doesn't have a byte available when the Execution Unit needs one, the Execution Unit waits until the prefetch circuitry can complete a memory access.

The loader

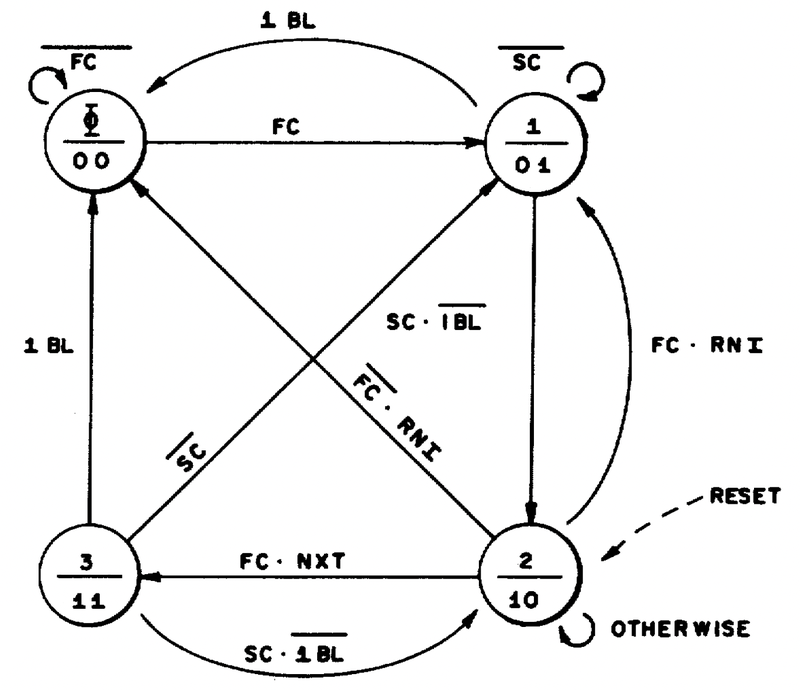

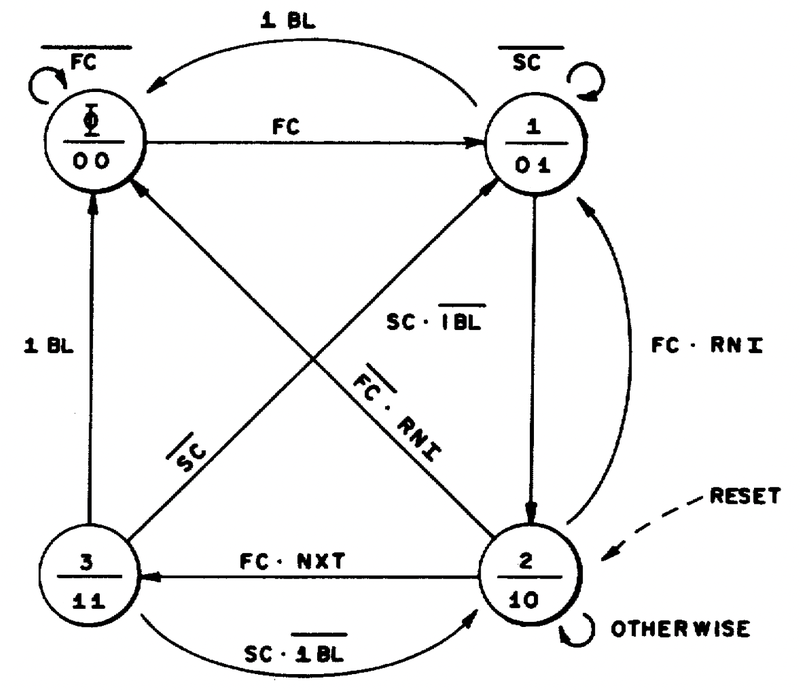

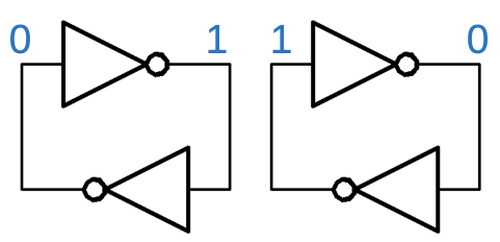

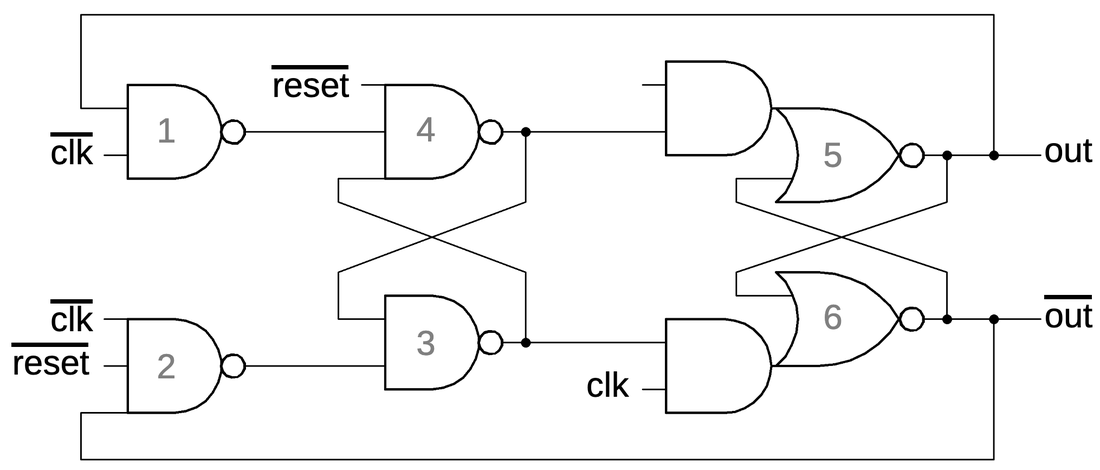

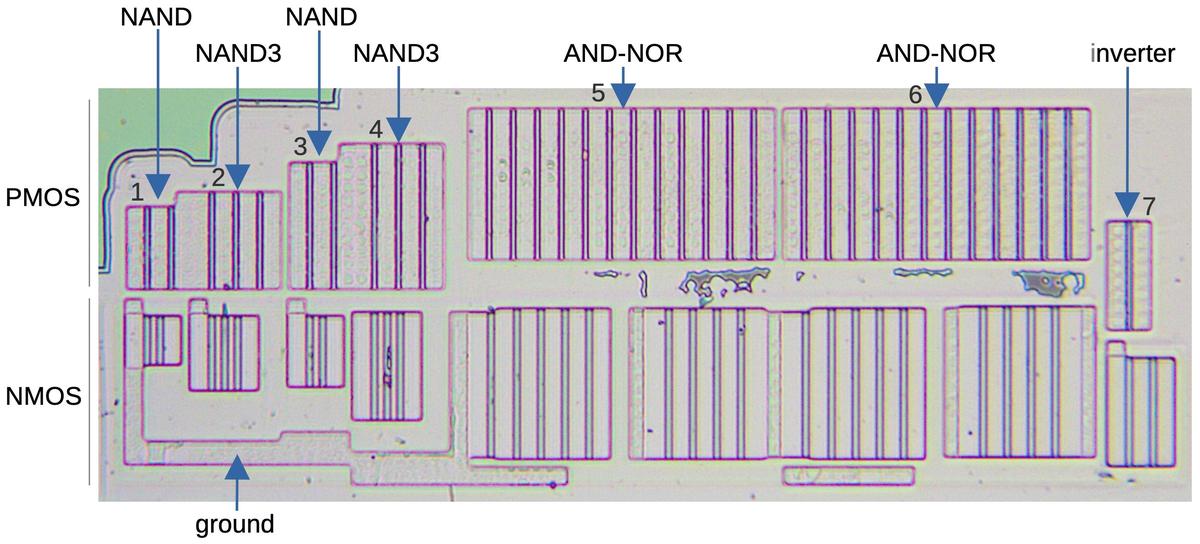

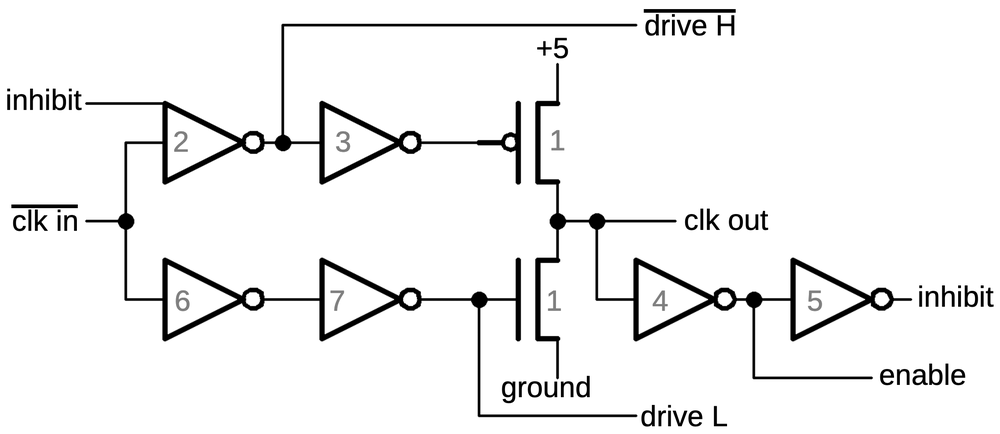

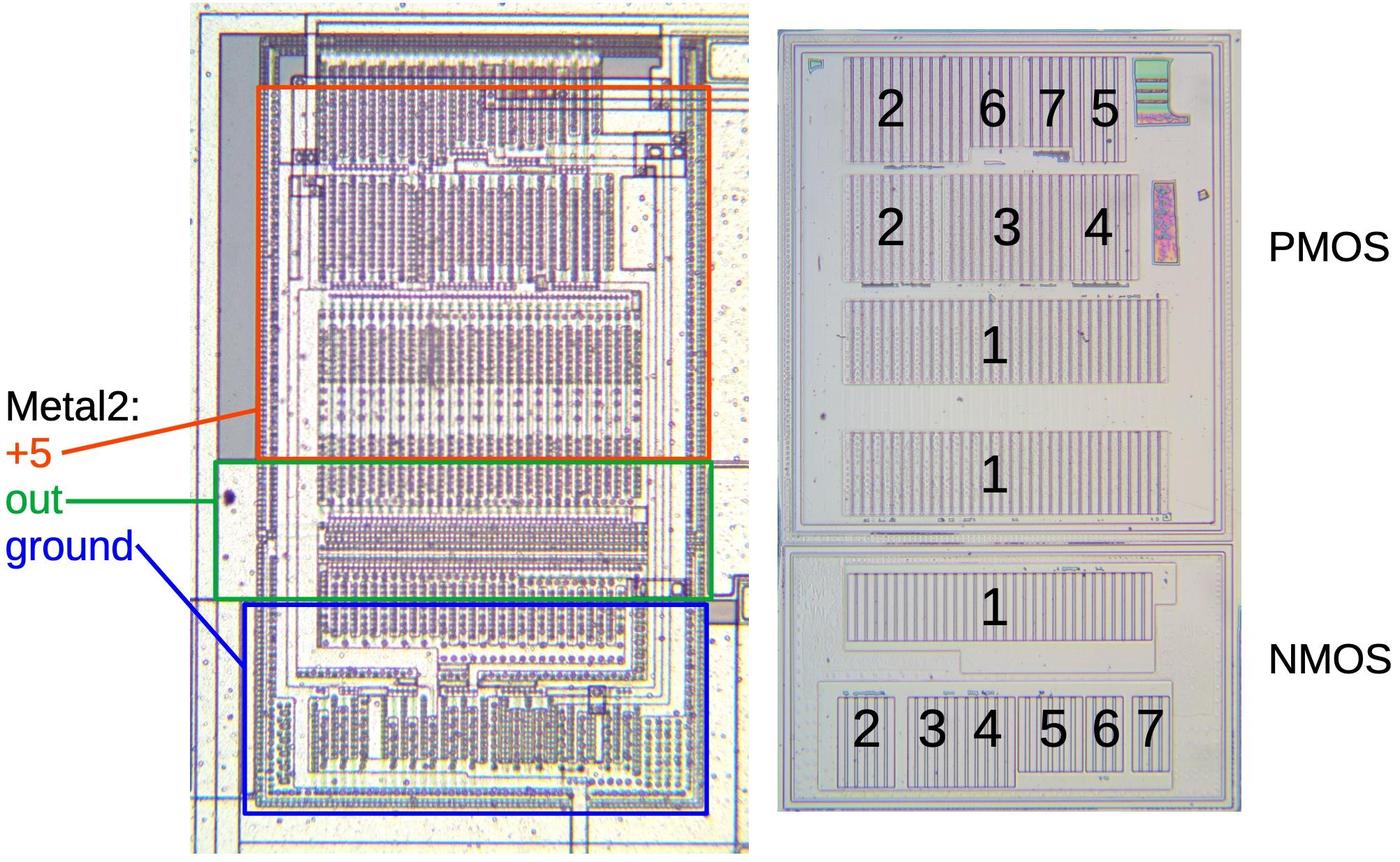

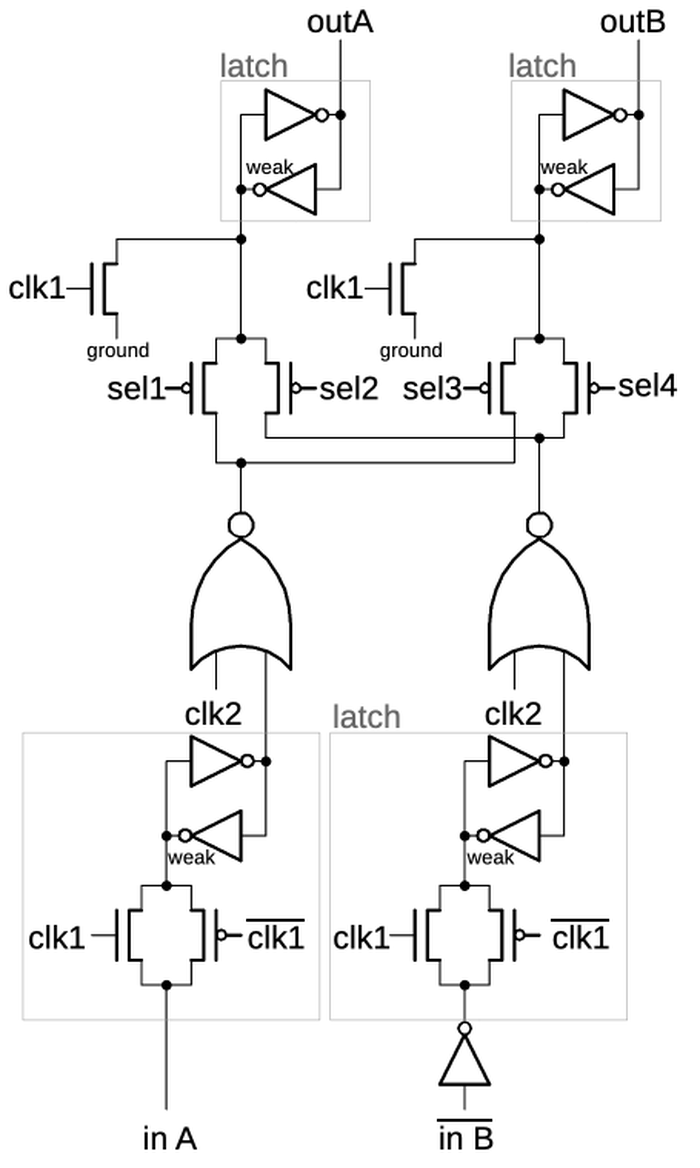

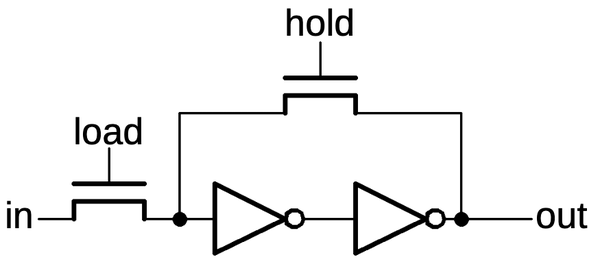

To decode and execute an instruction, the Execution Unit must get instruction bytes from the prefetch queue, but this is not entirely straightforward. The main problem is that the prefetch queue can be empty, blocking execution. Second, instruction decoding is relatively slow, so for maximum performance, the decoder needs a new byte before the current instruction is finished. A circuit called the "loader" solves these problems by using a small state machine (below) to efficiently fetch bytes from the queue at the right time.

The loader generates two timing signals that synchronize instruction decoding and microcode execution with the prefetch queue. The FC (First Clock) indicates that the first instruction byte is available, while the SC (Second Clock) indicates the second instruction byte. Note that the First Clock and Second Clock are not necessarily consecutive clock cycles because the first byte could be the last one in the queue, delaying the Second Clock.

At the end of a microcode sequence, the Run Next Instruction (RNI) micro-operation causes the loader to fetch the next machine instruction.

However, microcode execution would be blocked for a cycle due to the delay of fetching and decoding the next instruction.

In many cases, this can be avoided: if the microcode knows that it is one micro-instruction away from finishing,

it issues a Next-to-last (NXT) micro-operation so the loader can start loading the next instruction before the previous instruction finishes.

As will be shown in the next section,

this usually allows micro-instructions to run without interruption.

Instruction execution

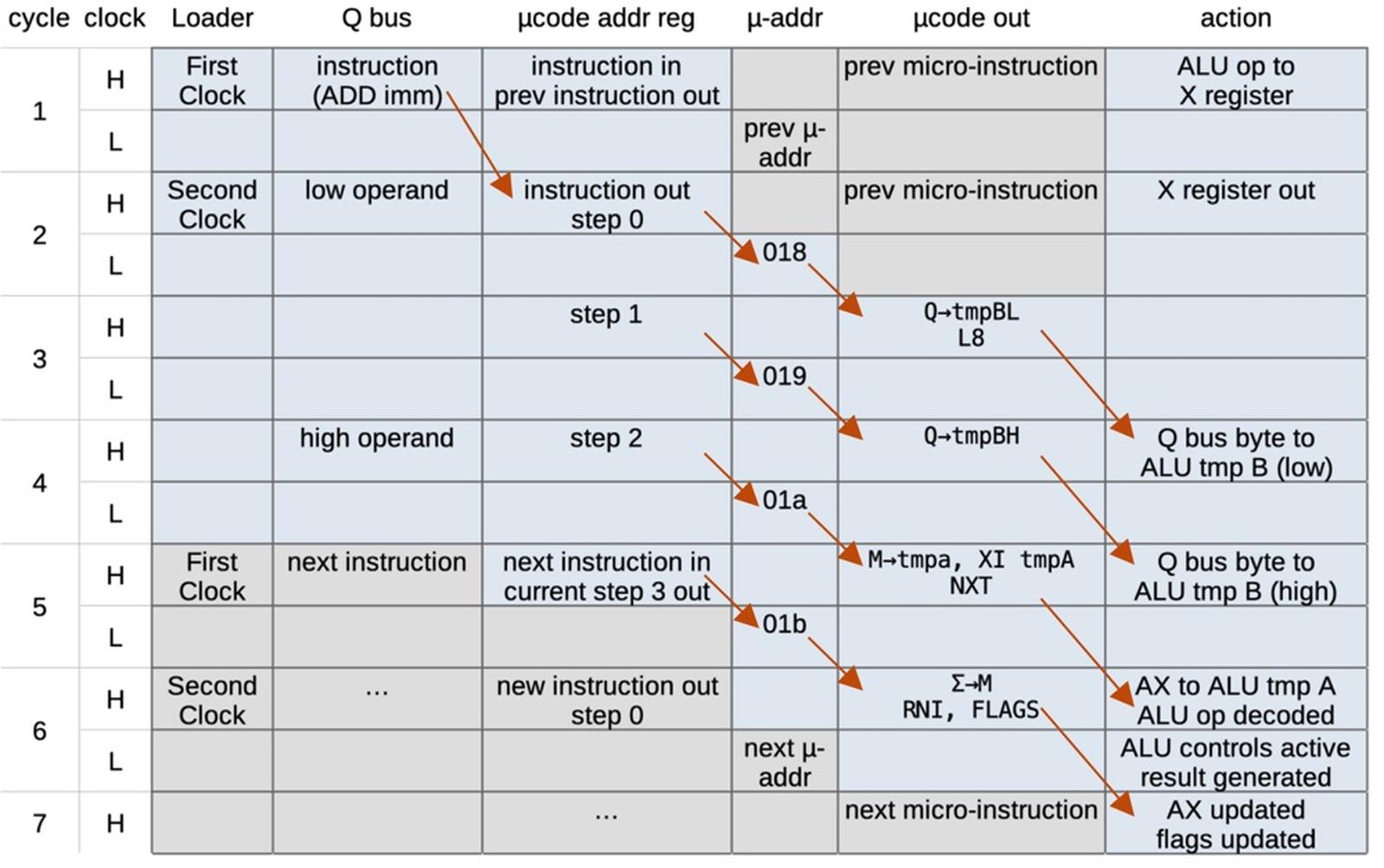

Putting this all together, we can see how the ADD instruction is executed, cycle by cycle. Each clock cycle starts with the clock high (H) and ends with the clock low (L).8 The sequence starts with the prefetch queue supplying the ADD instruction across the Q bus in cycle 1. The loader indicates that this is First Clock and the instruction is loaded into the microcode address register. It takes a clock cycle for the address to exit the address register (as indicated by an arrow) along with the microcode counter value indicating step 0. To remember the ALU operation, bits 5-3 of the instruction are saved in the internal X register (unrelated to the AX register).

In cycle 2, the prefetch queue has supplied the second byte of the instruction so the loader indicates Second Clock. In the second half of cycle 2, the microcode address decoder has converted the instruction-based address to the micro-address 018 and supplies it to the microcode ROM.

In cycle 3, the microcode ROM outputs the micro-instruction at micro-address 018: Q→tmpBL, which will move a byte from the prefetch queue bus (Q bus) to the low byte of the ALU temporary B register, as described earlier.

It takes a full clock cycle for this action to take place, as the byte traverses buses to reach the register.

This micro-instruction also generates the L8 micro-op, which will branch if an 8-bit operation is taking place.

As this is a 16-bit operation, no branch takes place.9

Meanwhile, the microcode address register moves to step 1, causing the decoder to produce the micro-address 019.

In cycle 4,

the prefetch queue provides a new byte, the high byte of the immediate value.

The microcode ROM outputs the micro-instruction at micro-address 019: Q→tmpBH, which will move this byte from the prefetch queue bus to the high byte of the ALU temporary B register.

As before, it takes a full cycle for this move to complete.

Meanwhile, the microcode address register moves to step 2, causing the decoder to produce the micro-address 01a.

In cycle 5,

the microcode ROM outputs the micro-instruction at micro-address 01a: M→tmpA,XI tmpA,NXT.

Since the M (source) register specifies AX, the contents of the AX register will be moved into the ALU tmpA register, but this will take a cycle to complete.

The XI tmpA part starts decoding the ALU operation saved in the X register, in this case ADD.

Finally, NXT indicates that the next micro-instruction is the last one in this instruction.

In combination with the next instruction on the Q bus, this causes the loader to issue First Clock. This starts execution of the next machine instruction, even though the current instruction is still executing.

In cycle 6,

the microcode ROM outputs the micro-instruction at micro-address 01b: Σ→M,RNI.

This will store the ALU output into the register indicated by M (i.e. AX), but not yet.

In the first half of cycle 6, the ALU decoder determines the ALU control signals that will cause an ADD to take place.

In the second half of cycle 6, the ALU receives these control signals and computes the sum.

The RNI (Run Next Instruction) and the second instruction byte from the prefetch queue cause the loader to issue Second Clock, and the micro-address for

the next machine instruction is sent to the microcode ROM.

Finally, in cycle 7, the sum is written to the AX register and the flags are updated, completing the ADD instruction. Meanwhile, the next instruction is well underway with its first micro-instruction being executed.

As you can see, execution of a micro-instruction is pipelined, with three full clock cycles from the arrival of an instruction until the first

micro-instruction completes in cycle 4.

Although this system is complex, in the best case it achieves the goal of running a micro-instruction each cycle, without gaps.

(There are gaps in some cases, most commonly when the prefetch queue is empty.

A gap will also occur if the microcode control flow doesn't allow a NXT micro-instruction to be issued.

In that case, the loader can't issue First Clock until the RNI micro-instruction is issued, resulting in a delay.)

Conclusions

The 8086 uses multiple types of pipelining to increase performance. I've focused on the pipelining at the microcode level, but the 8086 uses at least four interlocking types of pipelining. First, microcode pipelining allows micro-instructions to complete at the rate of one per clock cycle, even though it takes multiple cycles for a micro-instruction to complete. Admittedly, this pipeline is not very deep compared to the pipelines in RISC processors; the 8086 designers called the overlap in the microcode ROM a "sort of mini-pipeline."10

The second type of pipelining overlaps instruction decoding and execution. Instruction decoding is fairly complicated on the 8086 since there are many different formats of instructions, usually depending on the second byte (Mod R/M). The loader coordinates this pipelining, issuing the First Clock and Second Clock signals so decoding on the next instruction can start before the previous instruction has completed. Third is the prefetch queue, which overlaps fetching instructions from memory with execution. This is accomplished by partitioning the processor into the Bus Interface Unit and the Execution Unit, with the prefetch queue in between. (I recently wrote about instruction prefetching in detail.)

There's a final type of pipelining that I haven't discussed. Inside the memory access sequence, computing the memory address from a segment register and offset is overlapped with the previous memory access. The result is that memory accesses appear to take four cycles, even though they really take six cycles. I plan to write more about memory access in a later post.

The 8086 was a large advance in size, performance, and architecture compared to earlier microprocessors such as the Z80 (1976), 8085 (1977), and 6809 (1978). As well as moving to 16 bits, the 8086 had a considerably more complex architecture with instruction prefetching and microcode, among other features. At the same time, the 8086 avoided the architectural overreach of Intel's ill-fated iAPX 432, a complex processor that supported garbage collection and objects in hardware. Although the 8086's architecture had flaws, it was a success and led to the x86 architecture, still dominant today.

I plan to continue reverse-engineering the 8086 die so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff. If you're interested in the 8086, I wrote about the 8086 die, its die shrink process and the 8086 registers earlier.

Notes and references

-

The lowest bit of many 8086 instructions selects if the instruction operates on a byte or a word. Thus, many instructions in the instruction set appear in pairs. The support for byte operations gave the 16-bit 8086 processor compatibility with the older 8-bit 8080, if assembly code was suitably translated. ↩

-

The microcode for an ALU operation can select the first operand from tmpA, tmpB, or tmpC. The second operand is always tmpB. ↩

-

I don't know why Intel used XI to indicate the ALU opcode. I don't think it's the Greek letter Ξ, although they did use Σ (sigma) for the ALU output. The opcode is stored in the X register, so maybe XI is X Instruction? (It's also unclear why the register is called X.) ↩

-

Normally, the internal M register specifies the source register and the N register specifies the destination register, and these two registers are loaded from the instruction. However, some instructions only use the A or AX register, depending on whether the instruction acts on bytes or words. These instructions are the ALU immediate instructions, accumulator move instructions, string instructions, and the TEST, IN, and OUT instructions. For these instructions, the Group Decode ROM activates a signal that forces the M register to specify the AX register for a 16-bit operation, or the A register for an 8-bit operation. Thus, by specifying the M register in the microcode above, the same microcode is used for instructions with an 8-bit immediate argument or a 16-bit immediate argument. This also illustrates how the designers of the 8086 kept the microcode small by moving a lot of logic into hardware. ↩

-

I should mention that the pipelining in the 8086 is completely different from the parallelism in modern superscalar CPUs. The 8086 is executing instructions linearly, step-by-step, even though instructions overlap. There is only one execution path and no speculative execution, for instance. ↩

-

I showed a theoretical speedup from pipelining. Several issues make the real speedup smaller. First, the steps of an instruction typically don't take the same amount of time, so you're limited by the slowest step. Second, the overhead to handle the steps adds some delay. Finally, conflicts between instructions and other "hazards" may prevent overlap in various cases. ↩

-

The interaction between the prefetch queue and the Execution Unit is a "push" model rather than a "pull" model. If the prefetch queue contains a byte, the prefetch circuitry puts the byte on the Q bus and lets the Execution Unit know that a byte is available. The Execution Unit signals the prefetch circuitry when it uses a byte, and the prefetch queue moves to the next byte in the queue. If the Execution Unit needs a byte and it isn't ready, it blocks until a byte is available. The prefetch queue loads new words as it empties, when the memory bus isn't in use for other purposes. ↩

-

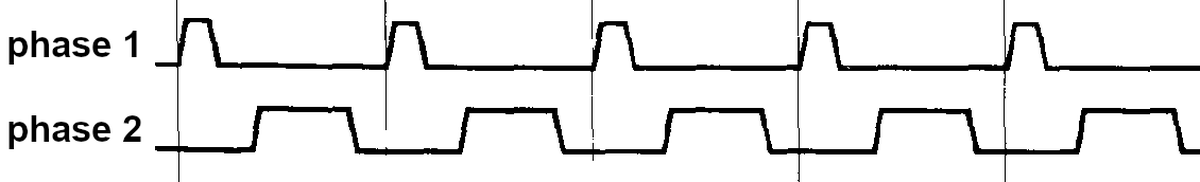

The 8086 is active during both phases (low and high) of the clock, with things happening both while the clock is high and while it is low. One unusual feature of the 8086 is that the clock signal is asymmetrical with a 33% duty cycle, so the clock is low for twice as long as the clock is high. In other words, the 8086 does twice as much (by time) during the low part of the clock cycle as during the high part of the clock cycle. There are multiple reasons why actions take a full clock cycle to complete. Much of the circuitry uses edge-triggered flip-flops to hold state. These latch data on one clock edge and move data internally during the other part of the clock. (The 8086 uses both positive-edge and negative-edge triggered flip flops; some latch when the clock goes high and others latch when the clock goes low.) Many control signals have their voltage level boosted by a bootstrap driver circuit, driven by the clock.

Many buses are precharged during one clock phase and then transmit a signal during the other phase. The motivation behind precharging the bus is that NMOS transistors are much better at pulling a line low than pulling it high (i.e. they can provide more current). This especially affects buses because they have relatively high capacitance due to their length, so pulling the bus high is slow. Thus, the bus is "leisurely" precharged to a high state during one clock phase, and then it can be rapidly pulled low (if the bit is a 0) and transmit the data during the other clock phase. ↩

-

You might expect that the 8-bit ADD would be faster than the 16-bit ADD since it is a 2-byte instruction instead of a 3-byte instruction and one micro-instruction is skipped. However, both the 8-bit and the 16-bit ADD instructions take 4 cycles. The reason is that branching to a new micro-instruction requires updating the microcode address register, which takes a clock cycle, resulting in a wasted clock cycle where no micro-instruction is executed. (Specifically, the next micro-instruction is on the way, so it is blocked by the ROM Enable (ROME) signal going low.) The result of this is that the branch for an 8-bit ADD costs an extra cycle, which cancels out the saved cycle. (In practice, the 16-bit instruction might be slower because it needs one more byte from the prefetch queue, which could cause a delay.) Just as a branch in the machine instructions can cause a delay (a "bubble") in the instruction pipeline, a branch in the microcode causes a delay in the micro-instruction pipeline. ↩

-

The design decisions for the 8086 are described in: J. McKevitt and J. Bayliss, "New options from big chips," in IEEE Spectrum, vol. 16, no. 3, pp. 28-34, March 1979, doi: 10.1109/MSPEC.1979.6367944. ↩

Subject: Inside the 8086 processor's instruction prefetch circuitry

The groundbreaking 8086 microprocessor was introduced by Intel in 1978 and led to the x86 architecture that still dominates desktop and server computing. One way that the 8086 increased performance was by prefetching: the processor fetches instructions from memory before they are needed, so the processor can execute them without waiting on the (relatively slow) memory. I've been reverse-engineering the 8086 from die photos and this blog post discusses what I've uncovered about the prefetch circuitry.

The 8086 was introduced at an interesting point in microprocessor history, where memory was becoming slower than the CPU. For the first microprocessors, the speed of the CPU and the speed of memory were comparable.1 However, as processors became faster, the speed of memory failed to keep up. The 8086 was probably the first microprocessor to prefetch instructions to improve performance. While modern microprocessors have megabytes of fast cache2 to act as a buffer between the CPU and much-slower main memory, the 8086 has just 6 bytes of prefetch queue. However, this was enough to increase performance by about 50%.3

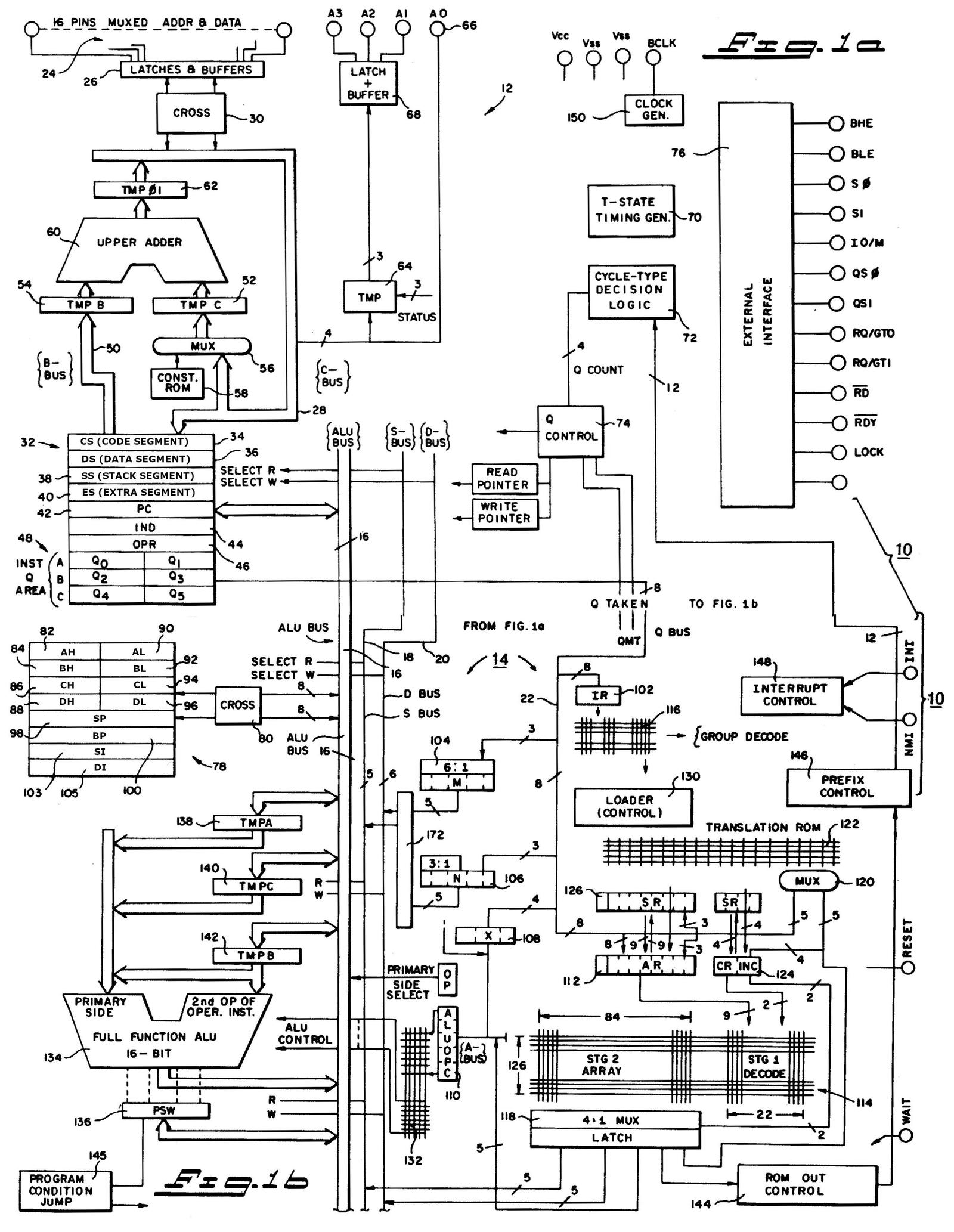

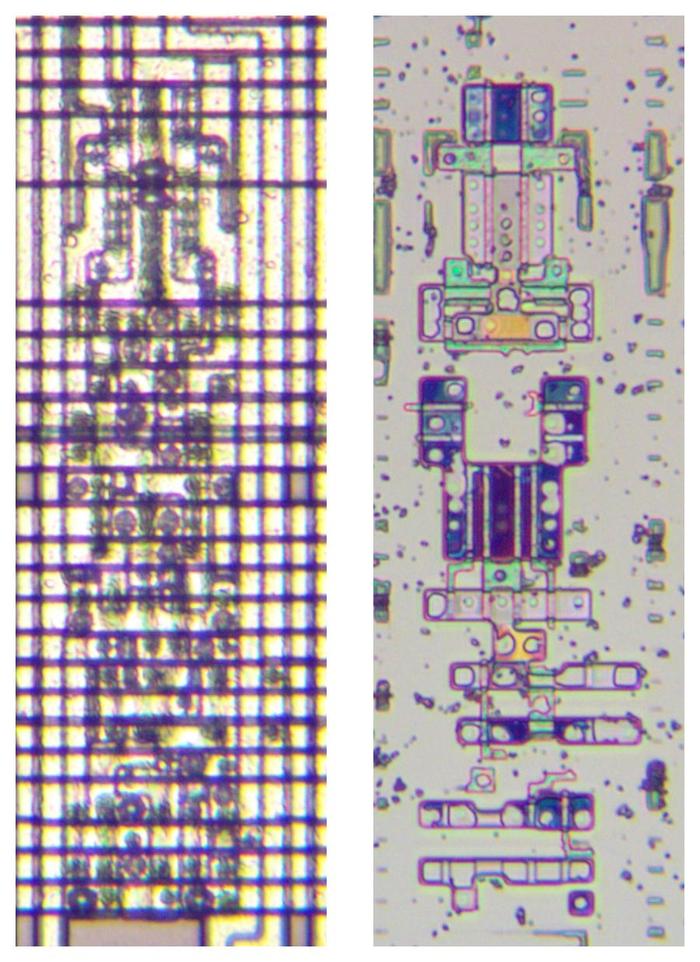

The die photo below shows the 8086 microprocessor under a microscope. The metal layer on top of the chip is visible, with the silicon and polysilicon mostly hidden underneath. Around the edges of the die, bond wires connect pads to the chip's 40 external pins. I've labeled the key functional blocks; ones that are important to the prefetch queue are highlighted in red and will be discussed in detail below. Architecturally, the chip is partitioned into a Bus Interface Unit (BIU) at the top and an Execution Unit (EU) below. The BIU handles memory accesses, while the Execution Unit (EU) executes instructions.

Prefetching and the architecture of the 8086

Prefetching had a major impact on the design of the 8086. Earlier processors such as the 6502, 8080, or Z80 were deterministic. The processor fetched an instruction, executed the instruction, fetched the next instruction, and so forth. Memory accesses corresponded directly to instruction fetching and execution and instructions took a predictable number of clock cycles. This all changed with the introduction of the prefetch queue. Memory operations became unlinked from instruction execution since prefetches happen as needed and when the memory bus is available.

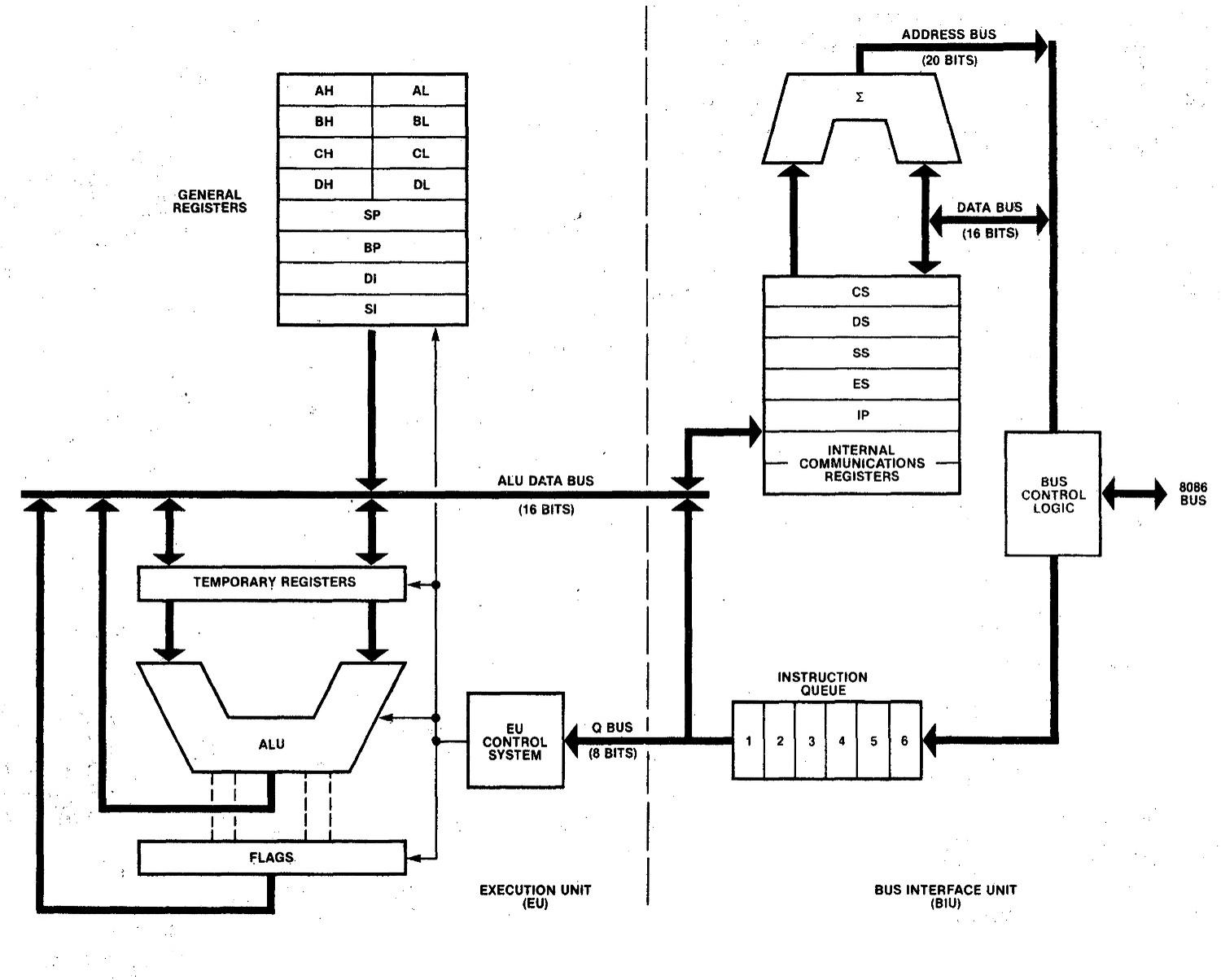

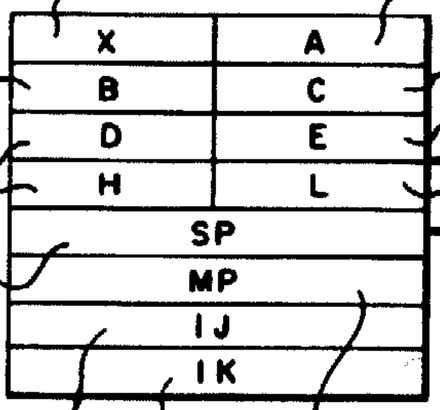

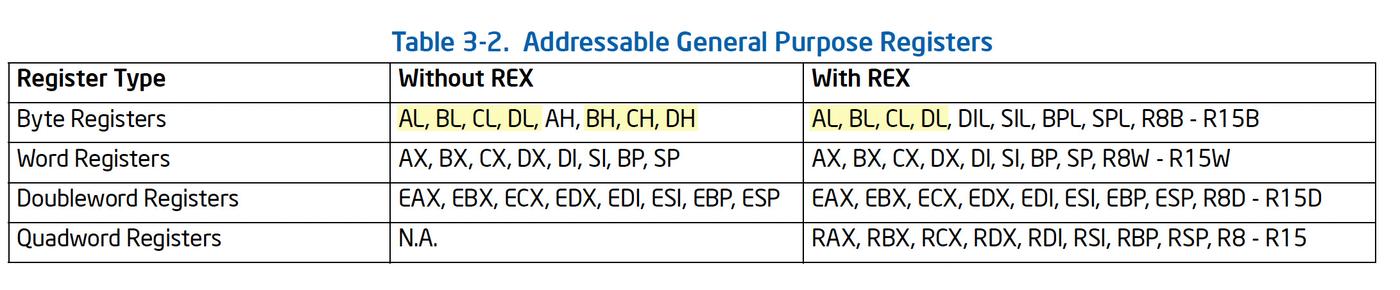

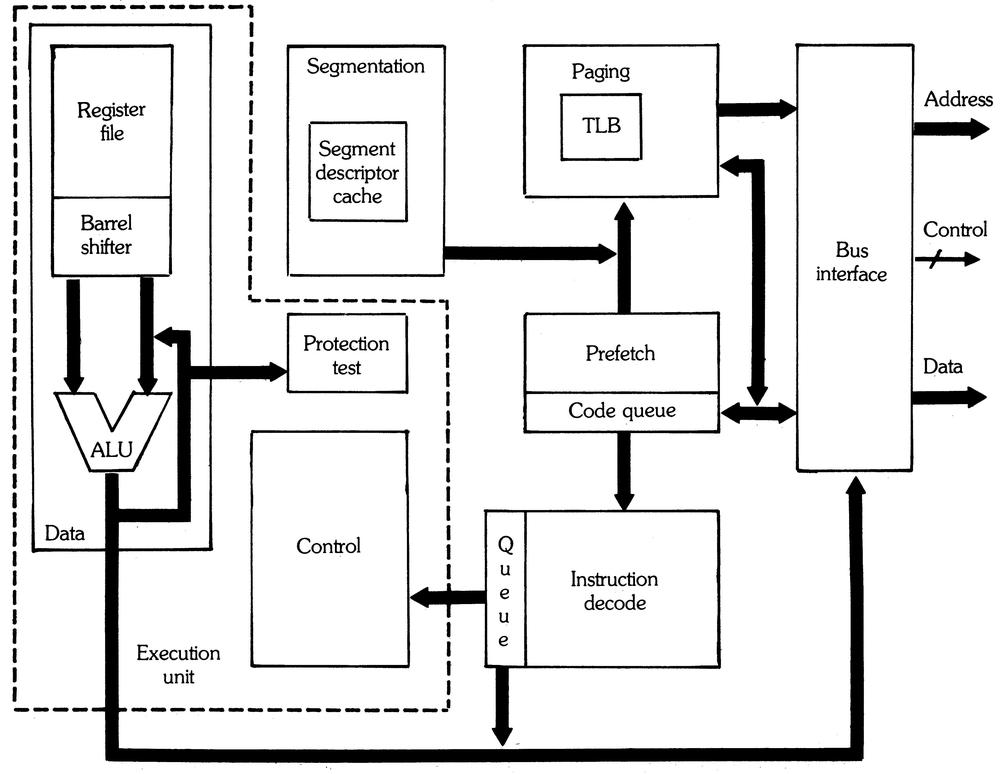

Since memory operations and instruction execution happen independently, the implementors of the 8086 split the chip into two processing units: the Bus Interface Unit (BIU) that handles memory accesses, and the Execution Unit (EU) that executes instructions, as shown below.4 The Bus Interface Unit contains the 6-byte instruction prefetch queue; it supplies instructions to the Execution Unit via the Q (queue) bus. The adder (Σ) performs address calculation, adding the segment register base to an address offset, among other things. The Execution Unit is what comes to mind when you think of a processor: it has most of the registers, the arithmetic/logic unit (ALU), and the microcode that implements instructions. The address adder and the ALU are independent arithmetic units. The segment registers (CS, DS, SS, ES) and the Instruction Pointer (IP) are in the Bus Interface Unit since they are directly involved in memory accesses, while the general-purpose registers are in the Execution Unit.

The 8086's segment registers play an important part in this architecture, so I'll review them quickly. One of the challenges of the 8086 was how to support more than 64K of memory with 16-bit registers. The much-reviled solution was to create a 1-megabyte (20-bit) address space consisting of 64K segments, with segment registers indicating the start of each segment. Specifically, a memory address was specified by a 16-bit offset address along with a particular segment register selecting a segment (Code Segment, Data Segment, Stack Segment, or Extra Segment). The segment register's value was shifted by 4 bits to give the segment's 20-bit base address. The 16-bit offset address was added, yielding a 20-bit memory address. This gave the processor a 1-megabyte address space, although only 64K could be accessed without changing a segment register.

It may seem inefficient for the Bus Interface Unit to have its own adder instead of using the ALU, but there are a couple of reasons for the separate adder. First, every memory access uses the adder at least once to add the segment base and offset. The adder is also used to increment the PC or index registers. Since these operations are so frequent, they would create a bottleneck if they used the ALU. Second, since the Execution Unit and the Bus Interface Unit run asynchronously with respect to each other, it would be complicated to share the ALU without causing delays and conflicts.

Prefetching had another major but little-known effect on the 8086 architecture: the designers were considering making the 8086 a two-chip microprocessor. Prefetching, however, required a one-chip design because the number of control signals required to synchronize prefetching across two chips exceeded the package pins available. This became a compelling argument for the one-chip design that was used for the 8086.3 (The unsuccessful Intel iAPX 432, which was under development at the same time, ended up being a two-chip processor: one to fetch and decode instructions, and one to execute them.)

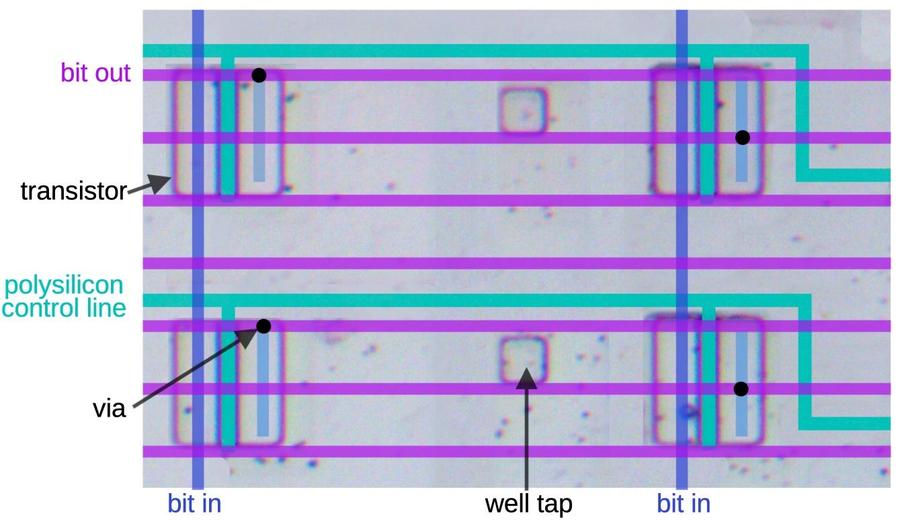

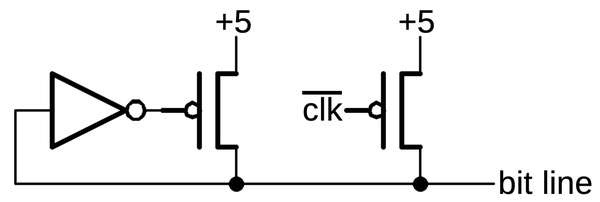

Implementing the queue

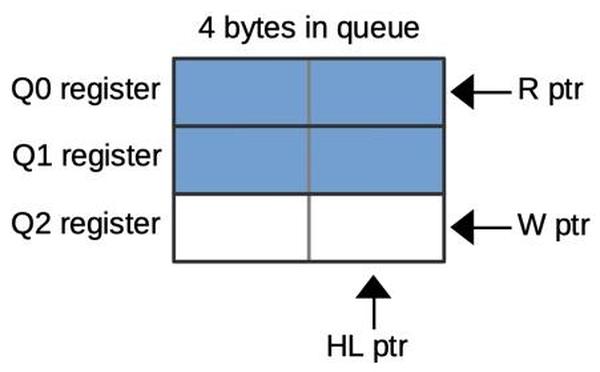

The instruction prefetch queue is implemented with three 16-bit queue registers along with two hardware pointers that keep track of the current position in the queue. One two-bit counter keeps track of the current read position from 0 to 3, i.e. the queue register that will provide the next instruction. The second counter keeps track of the current write position, i.e. the queue register that will receive the next instruction from memory. As words are fetched from the queue, the read pointer advances. As words are added to the queue, the write pointer advances. Because the queue registers hold words, while the prefetch circuitry provides bytes, another flip-flop keeps track of whether the high byte or the low byte of the word is being used. I call this the HL flip-flop. This causes the low byte to be provided first and then the high byte (since the 8086 is little-endian).

The diagram below shows an example queue configuration with four bytes. The first two queue registers (Q0 and Q1) hold data. The read pointer and HL pointer indicate that the next prefetched byte will come from the low byte of Q0. The write pointer indicates that the next prefetched word will go into Q2.

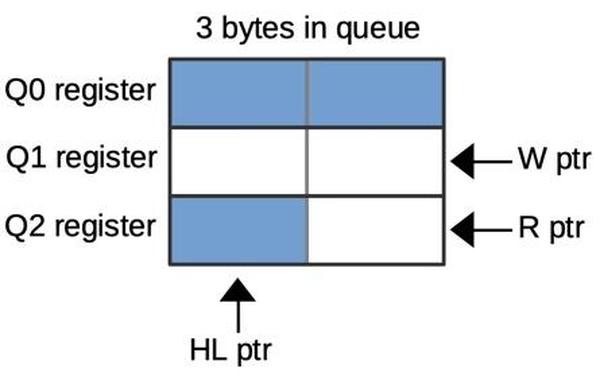

The diagram below shows how the queue pointers can wrap around. In this configuration, one byte has been used from Q2 so the next byte will be Q2's high byte. Q0 holds the next prefetched word. The next word to be prefetched will be stored in Q1, as indicated by the write pointer.

The relative positions of the write and read pointers indicate how much data is in the queue. If the write pointer is one position before the read pointer (modulo 3), the queue holds 3 or 4 bytes. If the write pointer is one position after the read pointer (modulo 3), the queue holds 1 or 2 bytes. But what about when the read pointer and write pointer indicate the same register? This can either indicate that the queue is empty or that the queue is full (5 or 6 bytes). To distinguish these cases, a flip-flop is set if the queue enters the empty state. This flip-flop generates a signal that Intel called MT (empty).

Another complication occurs if you jump to an odd address. Because of its 16-bit bus, the 8086 will prefetch a word from the even address one less. This loads one usable byte and one byte that needs to be discarded. The 8086 handles this by setting the HL flip-flop high, using a handful of gates to detect this case. As in the diagram above, the unwanted low byte will be skipped.

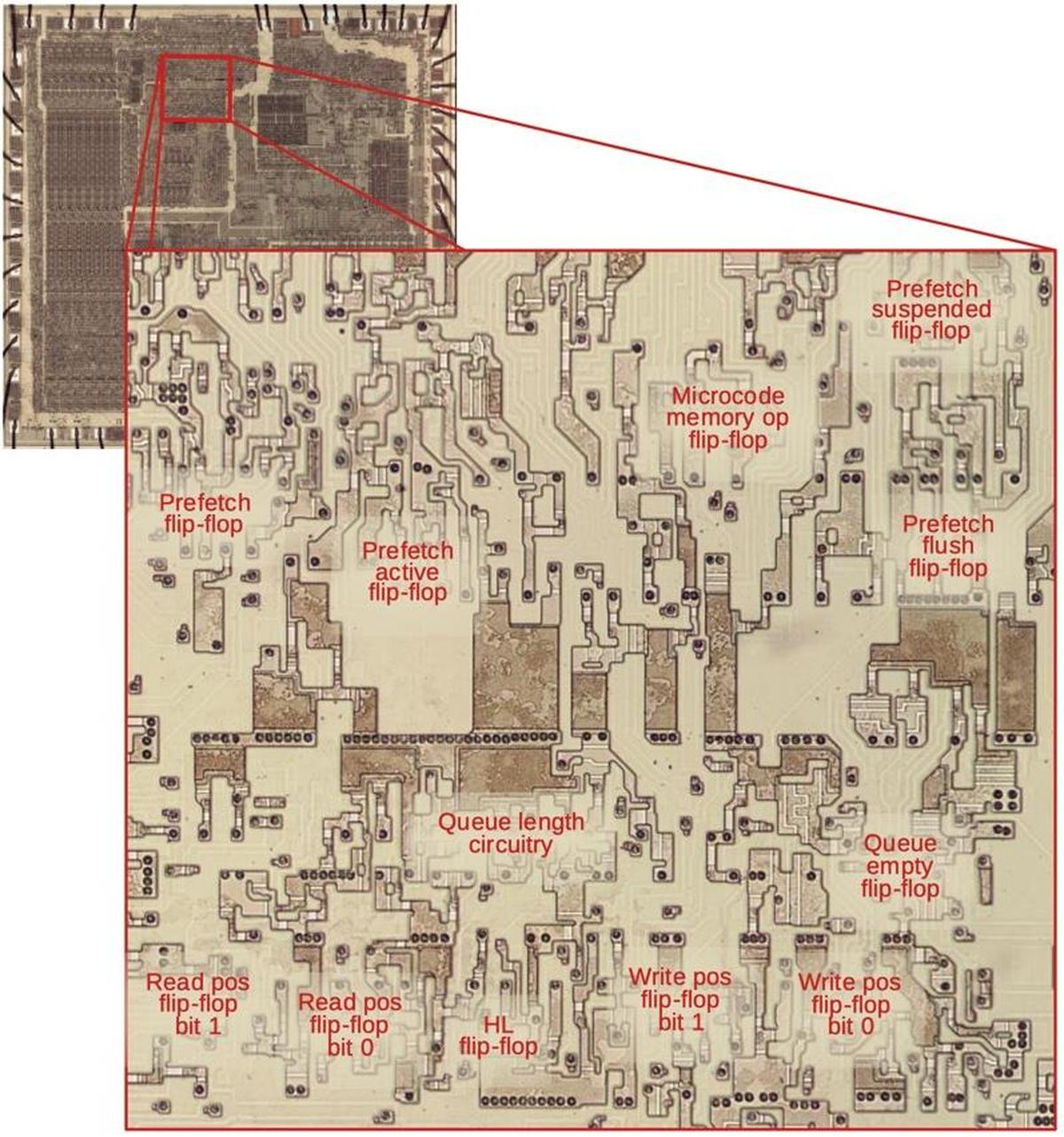

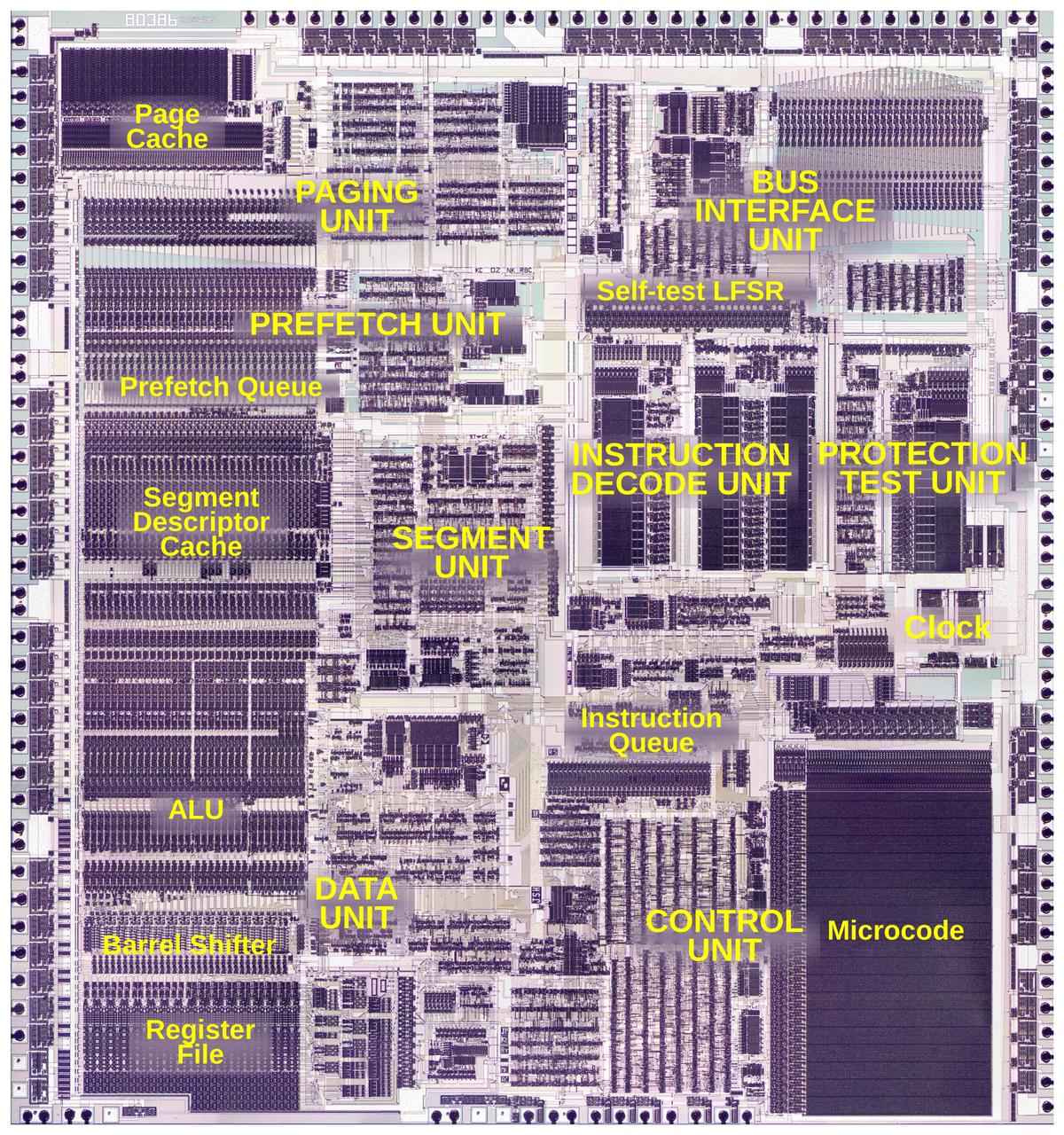

The diagram below zooms in on the prefetch and queue control circuitry on the die, with the main flip-flops and circuitry labeled. The lower half manages the queue, keeping track of the read and write positions and computing the queue length. The upper circuitry controls prefetch operations and interacts with the rest of the memory cycle circuitry.

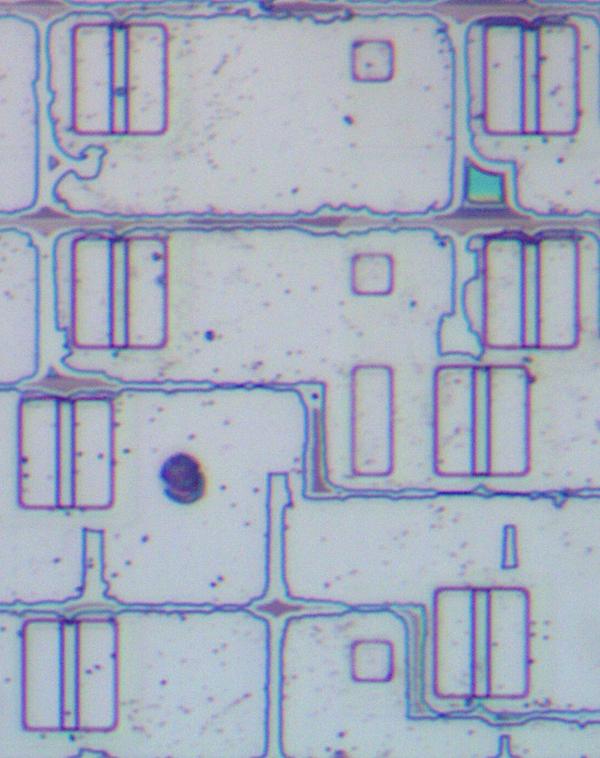

Even though there is not a lot of circuitry involved (about a dozen flip-flops and associated logic gates), this circuitry occupies a substantial part of the 8086 die. (The relatively small amount of circuitry did not make this easy to reverse-engineer, however!) Compared to modern chips, the density of the 8086 is very low; you can almost see the flip-flops with the naked eye. This diagram only shows the circuitry directly involved in prefetching. Additional circuitry is scattered through the memory cycle control circuitry to deal with prefetching, and the queue registers take up a substantial part of the register file. Thus, prefetching was a moderately expensive feature for the 8086, as far as die area.

The loader

To decode and execute an instruction, the Execution Unit must get instruction bytes from the Bus Interface Unit, but this is not entirely straightforward. The main problem is that the queue can be empty, in which case instruction decoding must block until a byte is available from the queue. The second problem is that instruction decoding is relatively slow, so for maximum performance, the decoder needs a new byte before the current instruction is finished. A circuit called the "loader" solves these problems by providing synchronization between the prefetch queue and the instruction decoder. The loader uses a small state machine to efficiently fetch bytes from the queue at the right time and to provide timing signals to the decoder and microcode engine.

In more detail, as the loader requests the first two instruction bytes from the prefetch queue, it generates two timing signals that control the microcode execution. The FC (First Clock) indicates that the first instruction byte is available, while the SC (Second Clock) indicates the second instruction byte. Note that the First Clock and Second Clock are not necessarily consecutive clock cycles because the prefetch queue could be empty or contain just one byte, in which case the First Clock and/or Second Clock would be delayed. The instruction decoding circuitry and the microcode engine are controlled by the First Clock and Second Clock signals, so they remain synchronized with the bytes supplied by the prefetch queue.

At the end of a microcode sequence, the Run Next Instruction (RNI) micro-operation causes the loader to fetch the next machine instruction. However, fetching and decoding the next instruction is a bit slow so microcode execution would be blocked for a cycle. In many cases, this slowdown can be avoided: if the microcode knows that it is one micro-instruction away from finishing, it issues a Next-to-last (NXT) micro-operation so the loader can start loading the next instruction. This achieves a degree of pipelining in most cases; fetching the next instruction is overlapped with finishing the execution of the previous instruction.

The diagram above shows the state machine for the loader. I won't explain it in detail, but essentially it keeps track of whether it is waiting for a First Clock byte or a Second Clock byte, and if it is performing a fetch in advance (NXT) or at the end of an instruction (RNI). The state machine is implemented with two flip-flops to support its four states.

Other memory accesses

The loader takes care of fetching an instruction that consists of an opcode byte and a Mod R/M (addressing mode) byte. However, many instructions have additional bytes or don't follow this format For example, an opcode such as "ADD AX" can be followed by an 8- or 16-bit immediate value, adding that value to the AX register. Or a "move memory to AX" instruction can be followed by a 16-bit memory address The microcode uses a separate mechanism for fetching these instruction bytes from the queue. Specifically, each micro-instruction contains a source register and a destination register that specify a data move. By specifying "Q" (the queue) as the source, a byte is fetched from the prefetch queue.

A third path is used for arbitrary memory reads and writes, such as when an instruction stores a register's contents to memory. In this case, the microcode puts the memory address in the IND (indirect) register. The microcode then issues a read or write micro-operation which causes the memory contents to be read into the OPR (operand register) or written from the OPR. (The IND and OPR registers are internal 8086 registers that are not visible to the programmer.) In the 8086, a memory cycle takes at least four clock cycles (called T1 through T4), including adding the segment register to compute the memory address. An "unaligned" memory access takes twice as long, though, because the 8086 has a word-based 16-bit data bus. Thus, if you try to access a word from an odd address, two memory accesses are required, one for the first byte and one for the second byte.5

As you can see, a memory access is a fairly complex operation. In the 8086, all these steps are done by hardware in the Bus Interface Unit, rather than being performed by microcode. (I'll discuss the complex memory control circuitry in detail in a future post.) After issuing a memory read or write, the microcode engine is blocked until the memory request completes.

Microcode instructions and the correction circuitry

The microcode interacts with prefetching in several ways. In addition to requesting a byte from the queue (as discussed above), microcode can perform three micro-instructions that involve prefetching: SUSP, FLUSH, and CORR. The SUSP (suspend) micro-instruction stops prefetching, typically before a change to execution flow. The FLUSH micro-instruction flushes the prefetch queue and resumes prefetching. To implement these, the prefetching circuitry has a flip-flop to keep track of the suspended state, and logic to reset the queue pointer counters to flush the queue.

The CORR (correct) micro-instruction corrects the Instruction Pointer to point to the next execution position. This is an interesting and more complicated micro-instruction. Like most processors, the 8086 has a program counter (PC) to keep track of what instruction to execute; the 8086 calls this the Instruction Pointer (IP). In the programmer's view, the Instruction Pointer points to the memory address of the next instruction to execute. However, in the hardware, the Instruction Pointer points to the next instruction to be fetched, which is generally several bytes after the next instruction to be executed.6

For the most part, this doesn't matter; the queue provides instructions in the order they were fetched and it doesn't matter if the Instruction Pointer runs ahead. However, there are a few cases where the "real" Instruction Pointer address is needed. For example, a relative jump instruction causes execution to jump to an address relative to the current instruction. When performing a subroutine call, the return address must be pushed on the stack. The correct Instruction Pointer value is also needed for an interrupt. Thus, the 8086 needs a mechanism to compute the real Instruction Pointer value from the value in the Instruction Pointer register.

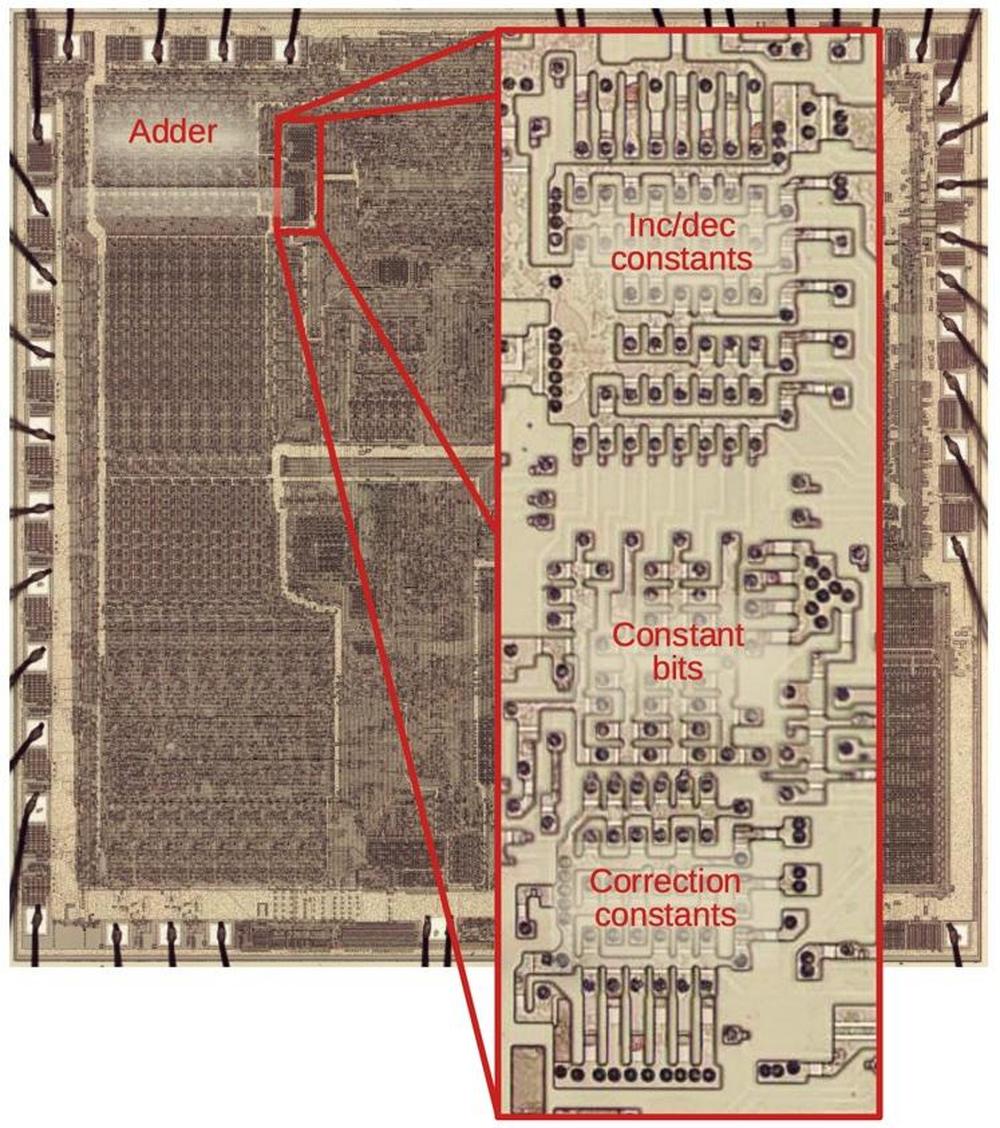

The solution is the CORR micro-instruction, which corrects the Instruction Pointer value by subtracting the prefetch queue length, so the Instruction Pointer holds the "true" value. For instance, if there are 4 bytes in the queue, then the address in the Instruction Pointer register is four more than the desired Instruction Pointer address. The Bus Interface Unit performs this subtraction by using the addressing adder and a small table of constants called the Constant ROM.7

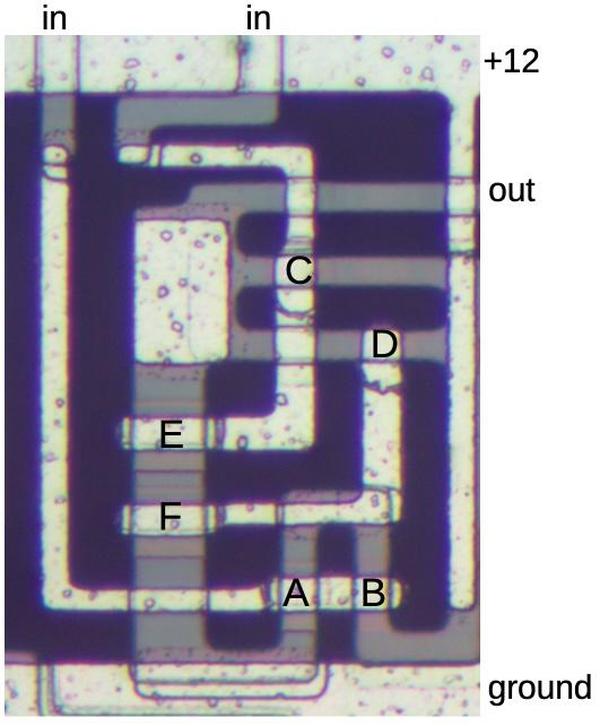

The diagram below zooms in on the Constant ROM, located next to the adder. The Constant ROM is implemented as a PLA (programmable logic array), a two-level structured arrangement of gates. The first level (bottom) selects the desired correction constant, while the second level (middle) generates the bits of the constant: three bits plus a sign bit. The necessary correction constant is selected based on the length of the queue in words, the HL pointer, and the empty (MT) flag.

The Constant ROM is used for more than just address correction. For example, it is also used to increment the Instruction Pointer by 2 after a prefetch. Other constants are used for the 8086's string operations, which act on a block of memory. The index registers are incremented or decremented by 1 for bytes or 2 for words. When popping a value from the stack, the stack pointer is decremented, which uses the constant -2. Additional constants are required to increment and decrement the IND register when accessing words from unaligned (odd) addresses. These increment/decrement values are selected in the upper part of the Constant ROM. In total, the Constant ROM holds values from -6 to +2.

Policy

There are some "policy" decisions on prefetching, and it's interesting to see how the 8086 implements them. Prefetching is not free: there is a tradeoff when performing a prefetch between saving time later versus delaying memory accesses from an executing instruction. Moreover, if a jump operation takes place, the prefetch queue is discarded and the memory cycles were wasted. Thus, the length of the queue is an "extremely tricky design problem, because performance can deteriorate if the queue is too long as well as if it is too short."3

Intel performed simulations to determine the best queue length. A 4-byte queue provided a large benefit, while a 6-byte queue (which they chose) was slightly better. The designers were surprised to find that performance flattened out after that; they expected a much longer queue would be necessary. The 8088 process has only a 4-byte prefetch queue because its 8-bit bus changes the tradeoffs.8

The basic prefetch policy is that if a memory access and a prefetch are requested at the same time, the memory access "wins", since it is guaranteed to be useful while the prefetch is just speculative. If the queue holds 0 to 2 bytes, prefetch happens during the next free memory cycle. If the queue holds 5 or 6 bytes, no prefetch can happen, since prefetch happens a word at a time. However, if the queue holds 3 or 4 bytes, prefetch is delayed for two clock cycles, which is an interesting choice. This gives an instruction more opportunities to perform a memory operation without being delayed by a prefetch. There is a tradeoff because maybe delaying the prefetch will waste two cycles of memory bandwidth, but performing the prefetch might waste four cycles of memory bandwidth. The motivation for this delay is that the last two bytes in the queue are less valuable because they are more likely to be discarded.

Another policy decision is how to handle a change in execution flow, such as a jump or subroutine call. The 8086 simply discards the prefetch queue and starts fetching from the new address. The 8086 designers considered better ways of handling jumps, but it wasn't practical to implement at the time. There is no intelligence if the instructions are already in the queue (e.g. jumping forward a couple of bytes). There is also no branch prediction; prefetching proceeds linearly regardless of branch instructions.

The 8086 does nothing to ensure consistency between the prefetch queue and memory if a prefetched instruction is modified in memory.9 In this case, the "stale" instruction in the queue is executed. This situation may seem contrived, but self-modifying code used to be fairly popular, where a program would change its own instructions.10

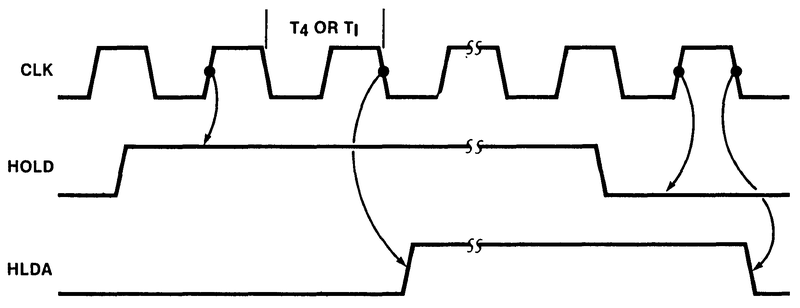

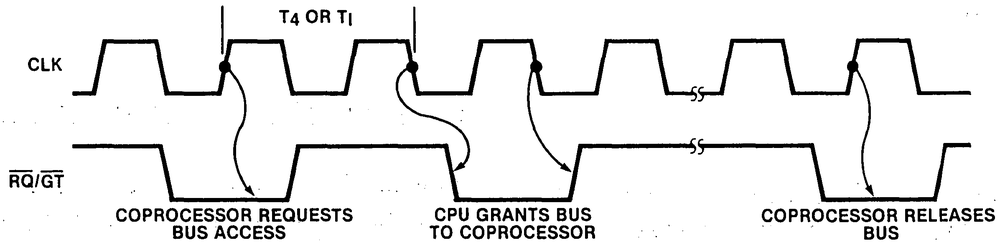

Prefetching and the 8087 coprocessor

One feature of the 8086 microprocessor is that it supports coprocessors such as the 8087 floating point chip.11 The 8087 implements high-performance floating-point computation, performing arithmetic and transcendental computations up to 100 times faster than the 8086. The 8087 gets instructions in an interesting fashion, executing floating-point instructions from the 8086's instruction stream. Specifically, an "ESCAPE" opcode indicates an instruction that is performed by the 8087 rather than the 8086. However, prefetching adds a lot of complexity to the coprocessor because the 8087 monitors the bus to determine when it should execute an instruction. With prefetching, the instruction on the bus doesn't match the instruction being executed. An instruction may be executed many cycles after it was fetched over the bus. A prefetched instruction may even be discarded and never executed.

To solve this problem, the 8087 manages its own copy of the prefetch queue to determine when the 8086 would be executing a floating-point instruction. The 8087 watches the bus to see when instructions are prefetched. The 8086 provides queue status signals (QS0 and QS1) to indicate when it takes bytes from the queue or flushes the queue. These signals allow the 8087 coprocessor to keep track of the 8086's queue state so it can tell what instruction the 8086 is executing. In other words, the 8086 doesn't tell the 8087 coprocessor what to do; instead, the two chips process the instruction stream in parallel. Another complication is the 8087 coprocessor can be used with the 8088 processor chip, which has a smaller 4-byte queue. Thus, the 8087 coprocessor must detect whether it is connected to an 8086 or an 8088 and maintain its queue appropriately.

Brief history

Caching and prefetching were used in mainframe computers dating back to the 1960s. For instance, the IBM System/360 Model 91 (1966) had a cache with prefetching. Minicomputers such as the VAX 11/780 (1977) later used caching and prefetching. However, these features took a while to trickle down to microprocessors. The Motorola 68000 (1980) had a 4-byte prefetch queue. As far as I can tell, the 8086 was the first microprocessor with a prefetch queue.

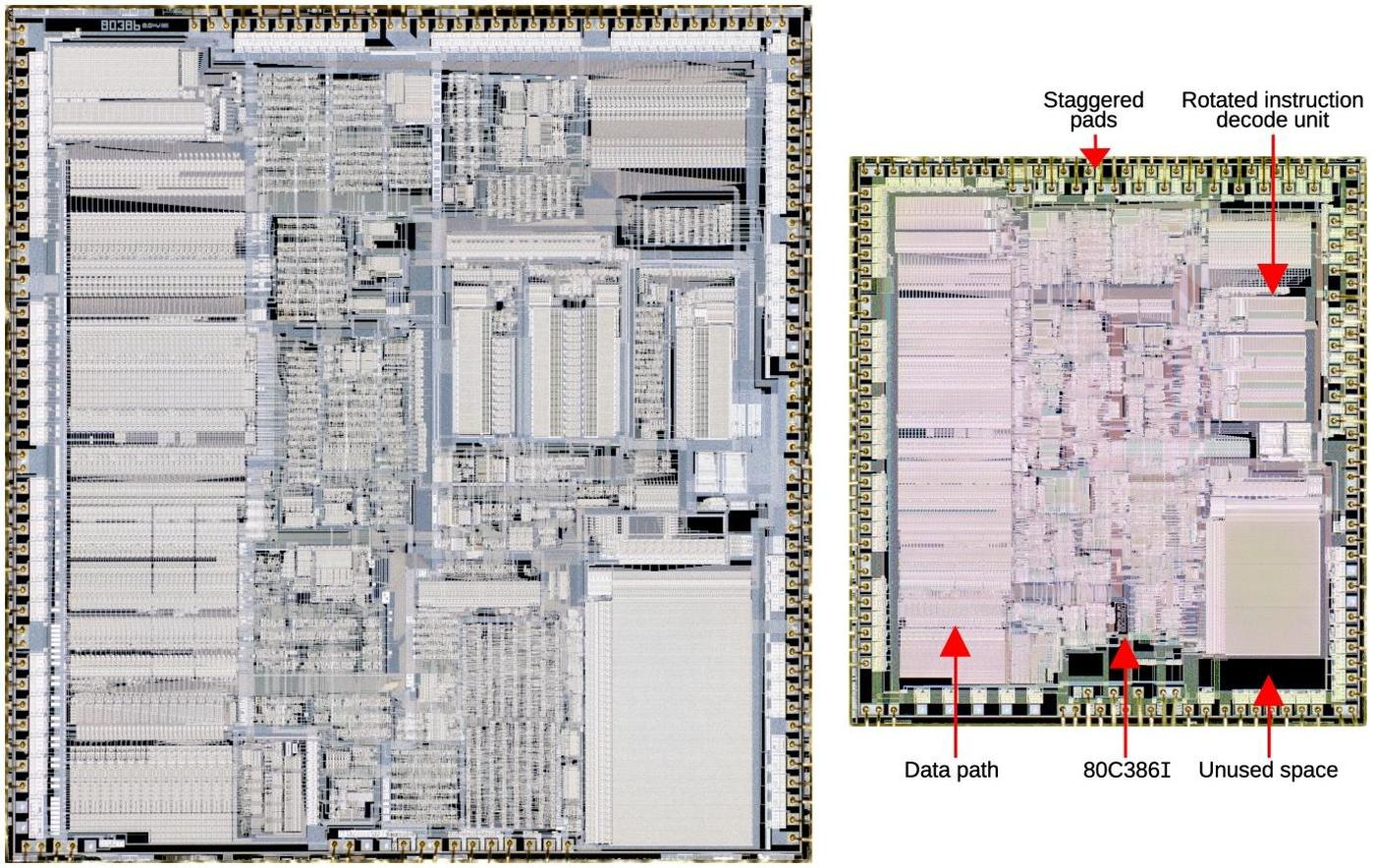

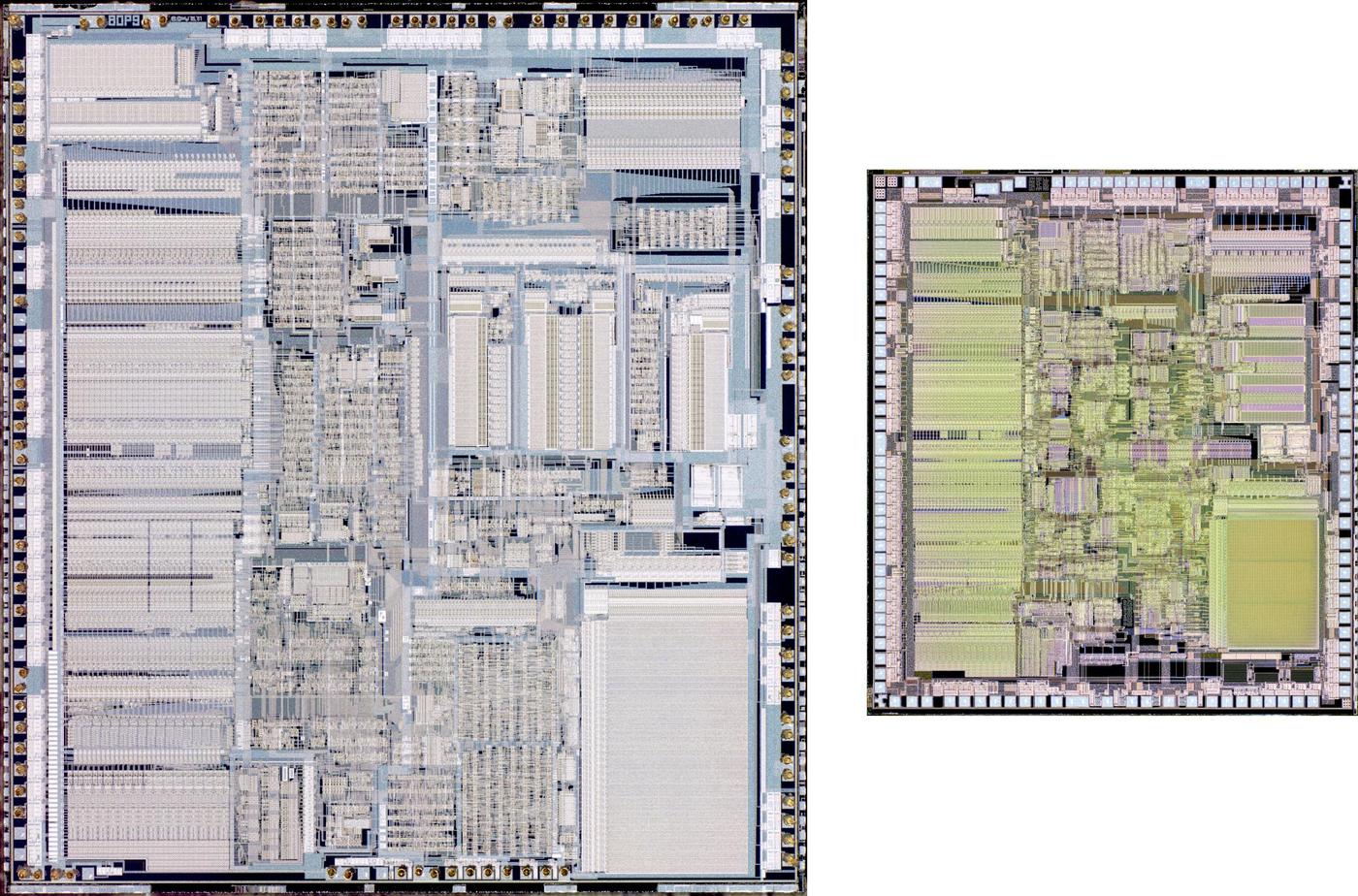

We can view the 8086 as a stepping-stone towards the large caches first used externally in the 80386 and internally in the 486. The 80186 and 80286 kept the 6-byte prefetch buffer size of the 8086. The 80386 has a 16-byte prefetch buffer, although apparently due to a bug it was shrunk to 12 bytes in later revisions. As well as the prefetch queue, the 80386 supported an external cache.

Early microprocessors such as the 6502 or Z80 could fetch the next instruction while they were finishing the previous instruction. This minimal two-stage pipelining improved performance, but was much more limited than 8086-style prefetching. An Intel study found that this simple overlap provides a 35% performance increase with 15% more hardware, while implementing prefetching provided an additional 11% gain with 14% more hardware.3 This illustrates how the increasing transistor counts from Moore's law opened up new opportunities to improve performance. But it also shows diminishing returns as performance increases become smaller and more expensive.

Conclusions

Well, this was supposed to be a quick post about the prefetch queue, but the topic turned out to have a lot more complexity than I expected. A six-byte prefetch queue may seem like a simple feature to add to a processor, but it affects many parts of the system. Prefetching is tied closely to the memory access circuitry, of course, but it also required a Constant ROM to handle the difference between the execution address and the prefetch address. Prefetching also impacted the microcode, with three micro-instructions to support prefetching.

Prefetching also illustrates some of the ways that each feature and corner case of a processor like the 8086 leads to more complexity. For instance, byte-aligned (rather than word-aligned) instructions require a mechanism to fetch bytes as well as words. Supporting multiple instruction formats (1-byte opcodes, an opcode byte followed by a Mod R/M byte, multi-byte instructions) resulted in the loader state machine. The segment registers required an adder to compute the memory address for every access. Looking at the 8086 internals makes it easier to understand the motivation behind RISC processors, discarding the complexity and corner cases to create a simpler but faster processor.

I plan to continue reverse-engineering the 8086 die so follow me on Twitter @kenshirriff or RSS for updates. I've also started experimenting with Mastodon recently as @oldbytes.space@kenshirriff. If you're interested in the 8086, I wrote about the 8086 die, its die shrink process and the 8086 registers earlier.

Notes and references

-

Steve Furber, co-creator of the ARM chip, mentions that "The first integrated CPUs were coincidentally quite well matched to semiconductor memory speeds, and were therefore built without caches. This can now be seen as a temporary aberration." See VLSI Risc Architecture and Organization p77. To make this concrete, the Apple II (1977) used a MOS 6502 processor running at about 1 megahertz while its 4116 DRAM chips could perform an access in 250 nanoseconds (4 times the clock speed). The 8086 processor ran at 5-10 MHz which meant that 250 ns DRAM chips were slower than the clock speed. Nowadays, processors run at 4 GHz but DRAM access speed is about 50 nanoseconds (1/200 the clock speed). ↩

-

Modern processors use caches to improve memory performance; caches are often megabytes in size. Accessing data from a cache is faster than accessing it from main memory, but the tradeoff is that caches are smaller. The 8086's prefetch queue is similar to a cache in some ways, but there are some key differences. First, the prefetch queue is strictly sequential. If you jump ahead two bytes, even if the prefetch queue has those instruction bytes, the processor can't use them. Second, the prefetch queue can't reuse bytes. If you have a 6-byte loop, even though all the code fits in the prefetch queue, it will be reloaded every time. Third, the prefetch queue doesn't provide any consistency. If you modify an instruction in memory a couple of bytes ahead of the PC, the 8086 will run the old instruction if it's in the queue. ↩

-

The design decisions for the 8086 prefetch cache (and many other aspects of the chip) are described in: J. McKevitt and J. Bayliss, "New options from big chips," in IEEE Spectrum, vol. 16, no. 3, pp. 28-34, March 1979, doi: 10.1109/MSPEC.1979.6367944. ↩↩↩↩

-

A detailed block diagram of the 8086 is provided in the patent. Conveniently, the layout of the diagram is close to the physical layout of the chip.

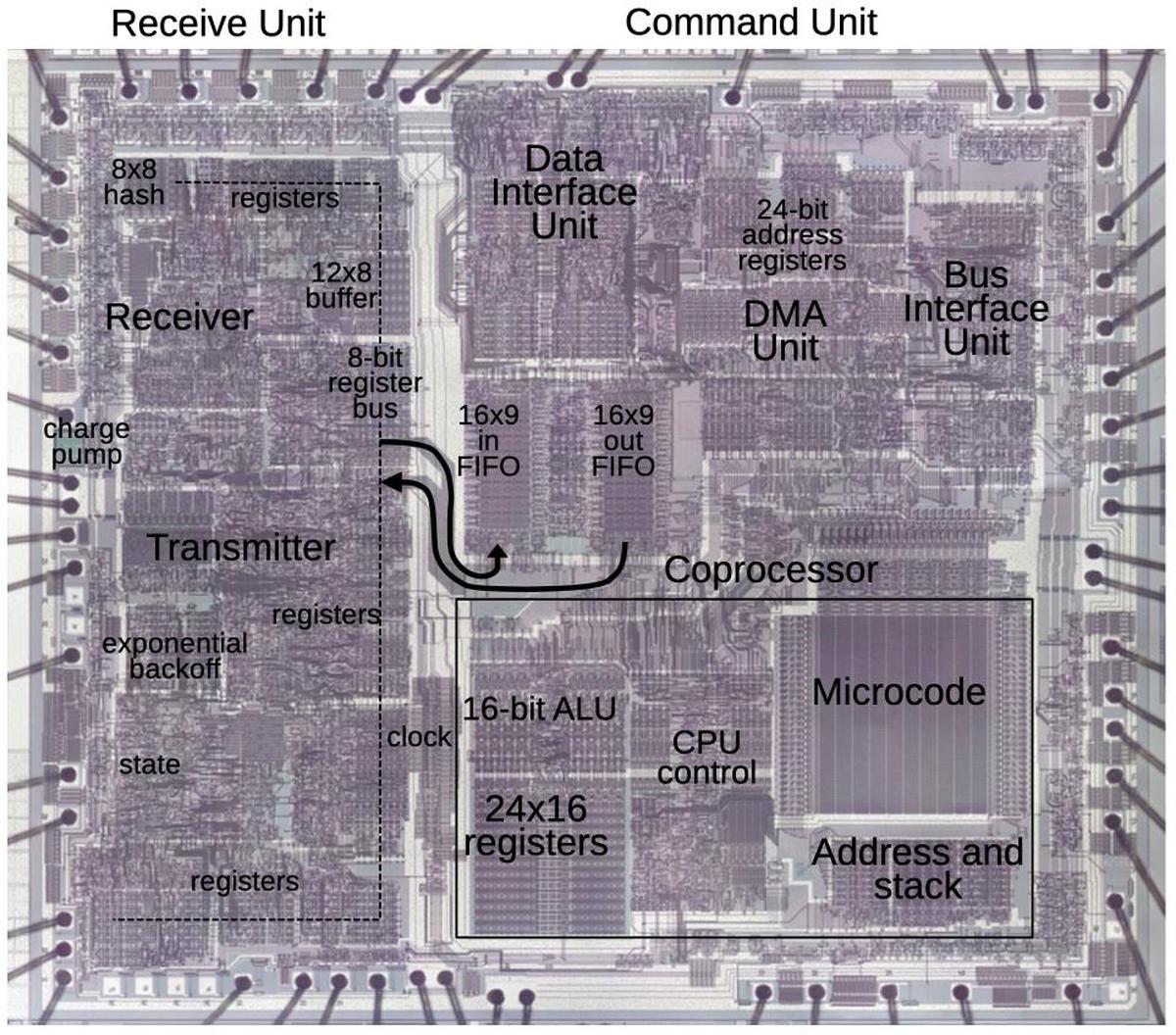

Detailed block diagram of the 8086, based on patent US4449184. I have modified the register names to match the common naming.I won't discuss this block diagram in detail here, but I'll point out the Q (queue) control logic in the upper center, with its associated read and write pointers. The Q bus connects the queue to various parts of the instruction decoding circuitry.

-

Supporting misaligned memory accesses (i.e. accessing a word at an odd address) adds complexity to the 8086. It's not surprising that many RISC chips prohibit unaligned accesses. On SPARC, for instance, an unaligned access fails with a "bus error", which the Sun programmers out there probably recognize. ARM processors before ARMv7 didn't support unaligned accesses. RISC-V supports misaligned data accesses but not misaligned instructions. ↩

-

The 8086 patent describes how the program counter in the 8086 does not hold the "real" value:

PC is not a real or true program counter in that it does not, nor does any other register within CPU, maintain the actual execution point at any time. PC actually points to the next byte to be input into queue. The real program counter is calculated by instruction whenever a relative jump or call is required by subtracting the number of accessed instructions still remaining unused in queue from PC.

↩ -

The CORR correction operation adds more complexity to the system than you might expect, with synchronization between the Bus Interface Unit and the Execution Unit. Because the correction computation uses the addressing adder, the correction operation must be synchronized with memory accesses that also use the adder. To accomplish this, the Bus Interface Unit waits until any memory operation is finished and then generates two clock cycles of "fake" memory operation, keeping the adder free for the CORR instruction. As a result, the memory control circuitry needs logic to implement this memory cycle. Meanwhile, the microcode engine is stopped until the CORR instruction completes, requiring synchronization circuitry. ↩

-

The 8088 is famous as the processor in the original IBM PC. The 8088 processor is essentially the same as the 8086 except that it has an 8-bit data bus instead of a 16-bit data bus, so it performs memory accesses a byte at a time instead of a word at a time. Internally, the 8088 is nearly identical to the 8086 but there are a few differences in microcode and in the bus circuitry. The most visible difference is that the 8088 has a 4-byte prefetch queue instead of a 6-byte prefetch queue. Simulations showed that a 4-byte queue was sufficient for the 8088. Because it fetches one byte at a time instead of two bytes, the 8088 fills the prefetch queue more slowly and wouldn't get much benefit from the larger queue. I haven't looked at the 8088's prefetch circuitry in detail, so I can't describe it exactly. ↩

-

At some point, Intel implemented consistency between cached instructions and memory. This ensures that self-modifying code will run the latest version of an instruction rather than a stale instruction in the cache. I couldn't determine exactly when this was implemented; various sources say the 486, the Pentium, or the Pentium Pro. (If you have a definitive answer, please let me know.) ↩

-

Self-modifying code can be used as a way to distinguish between the 8086 and 8088 chips in software. Since the 8086 has a 6-byte queue and the 8088 has a 4-byte queue, you can create self-modifying code that will run a prefetched instruction on the 8086 but run the modified instruction on the 8088. ↩

-

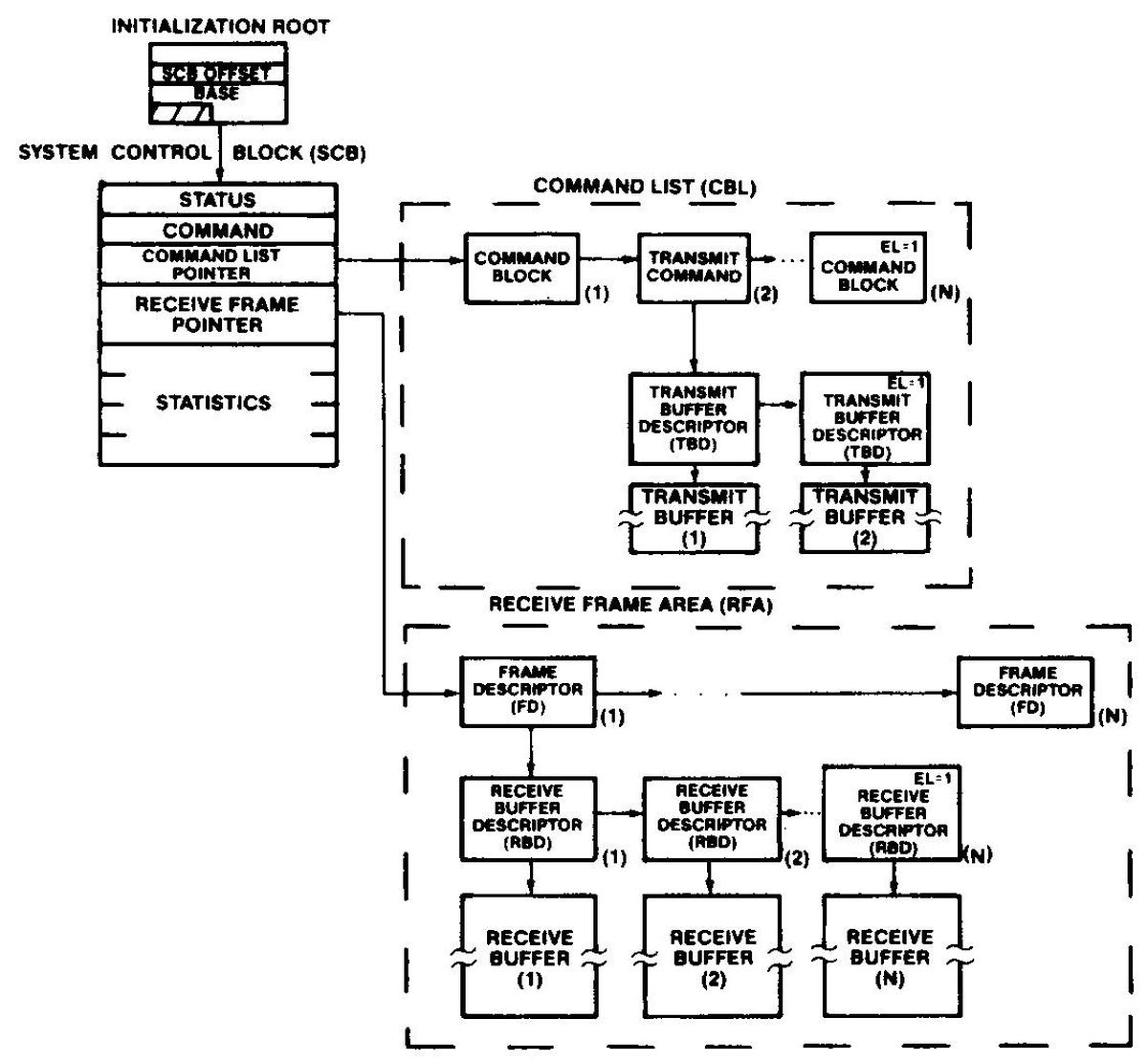

Although the 8087 is the most well-known coprocessor for the 8086, it was not the only coprocessor. The Intel 8089 input/output coprocessor provided mainframe-style I/O channels, offloading I/O processing from the 8086. More than just a DMA engine, the 8089 was a separate processor with its own instruction set. Unlike the 8087, the 8089 didn't take instructions from the 8086's instruction stream so it didn't interact with prefetching; instead, the 8086 sent a Channel Attention signal to the 8089 and the 8089 read instructions from shared memory. The 8089 was complex and expensive and wasn't very popular. The Intel 82586 Ethernet coprocessor used a similar Channel Attention scheme. ↩

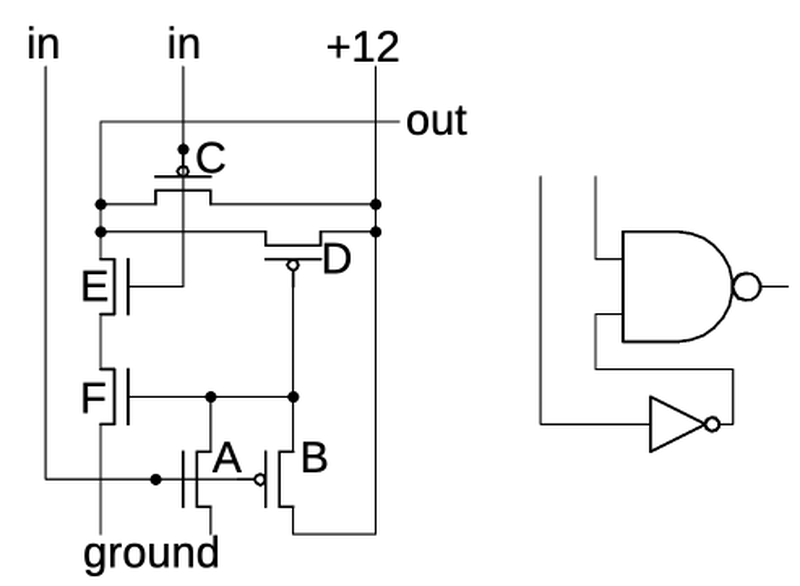

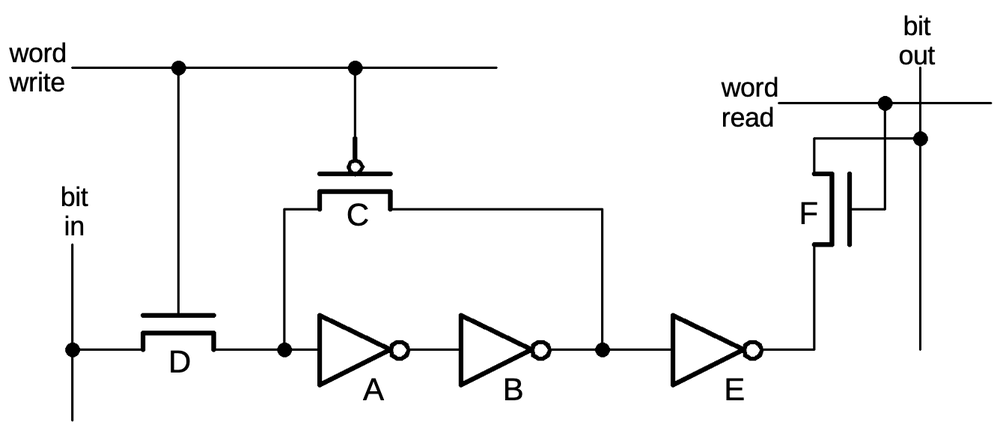

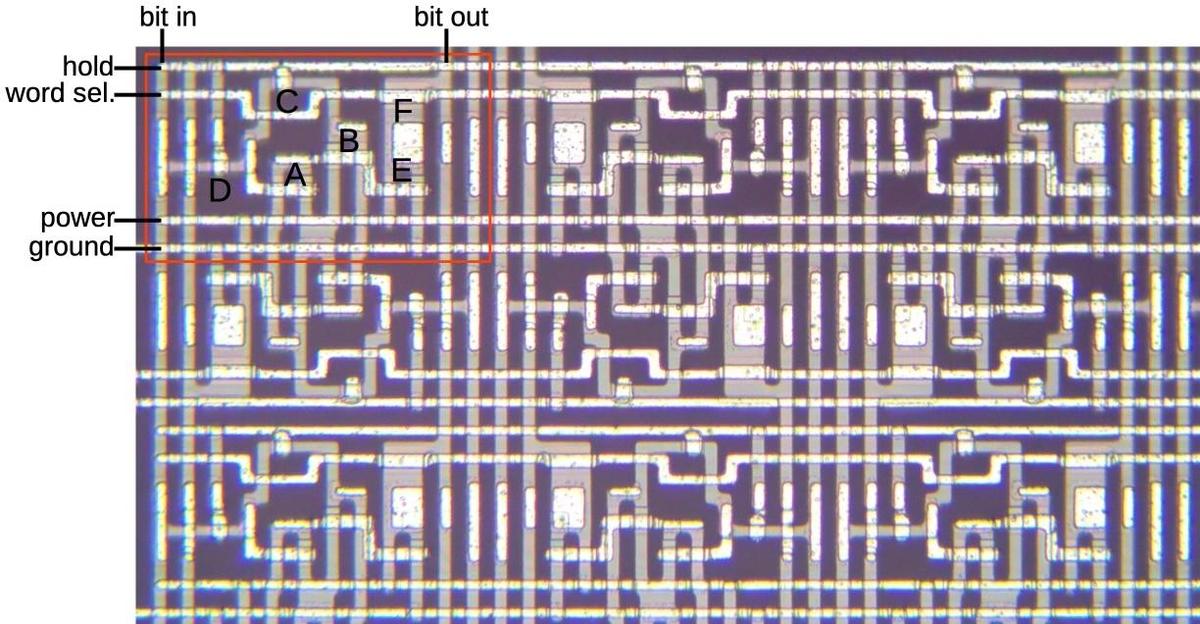

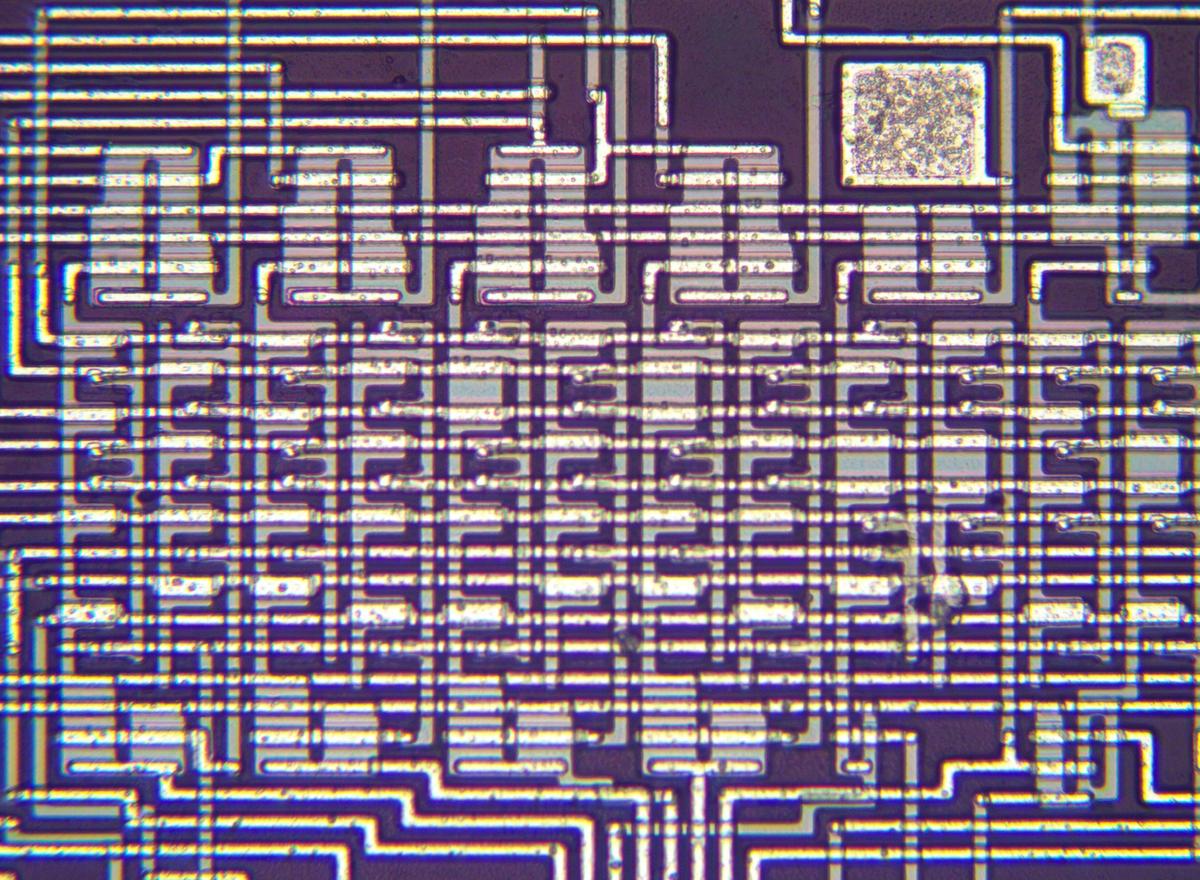

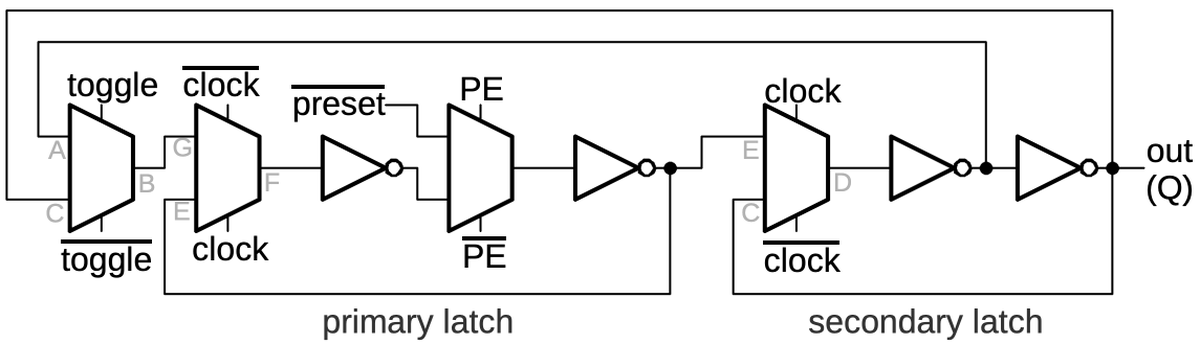

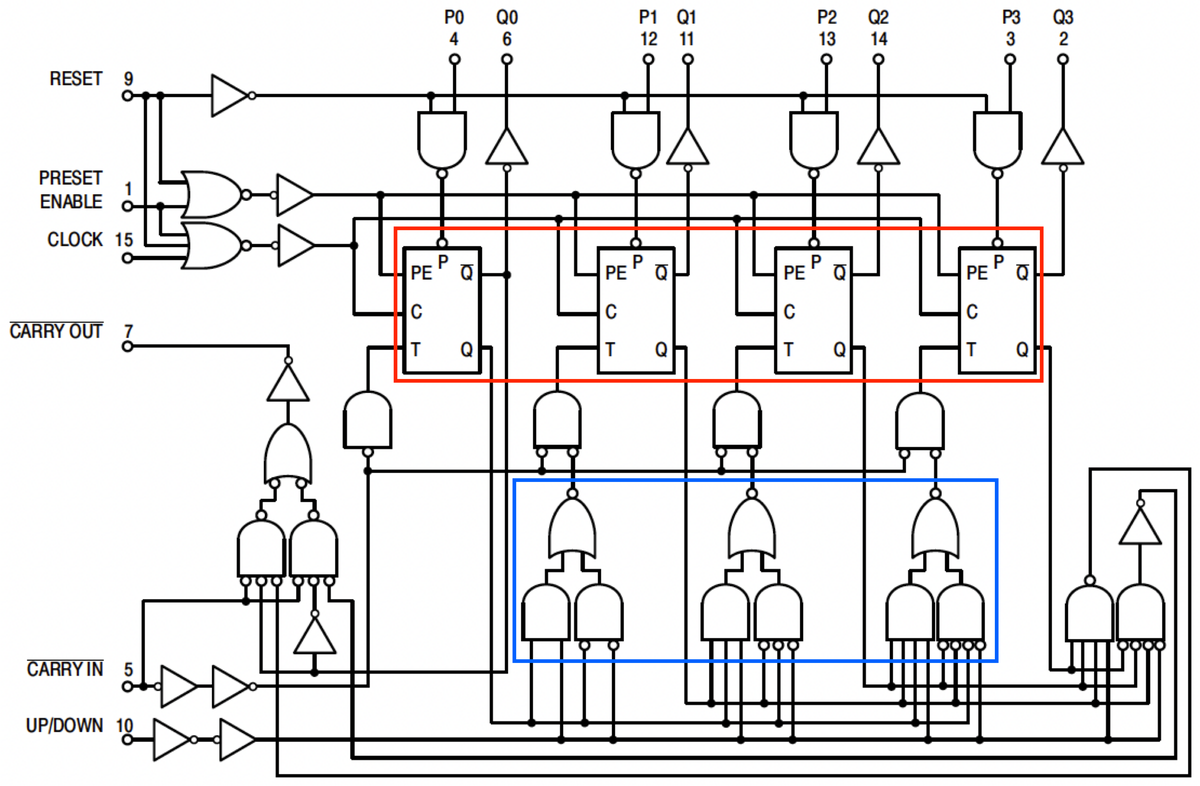

Subject: How the 8086 processor's microcode engine works

The 8086 microprocessor was a groundbreaking processor introduced by Intel in 1978. It led to the x86 architecture that still dominates desktop and server computing. The 8086 chip uses microcode internally to implement its instruction set. I've been reverse-engineering the 8086 from die photos and this blog post discusses how the chip's microcode engine operated. I'm not going to discuss the contents of the microcode1 or how the microcode controls the rest of the processor here. Instead, I'll look at how the 8086 decides what microcode to run, steps through the microcode, handles jumps and calls inside the microcode, and physically stores the microcode. It was a challenge to fit the microcode onto the chip with 1978 technology, so Intel used many optimization techniques to reduce the size of the microcode.

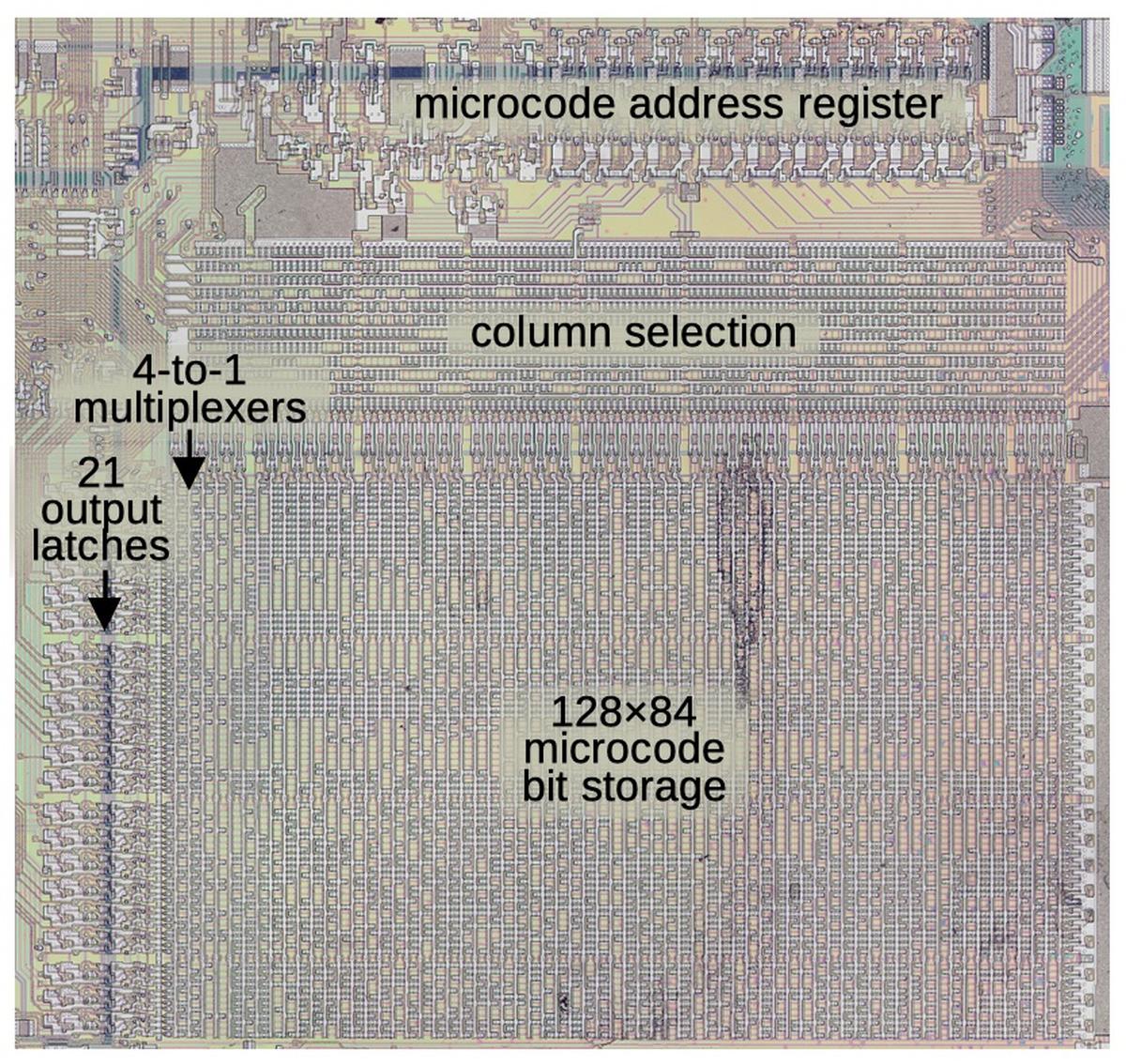

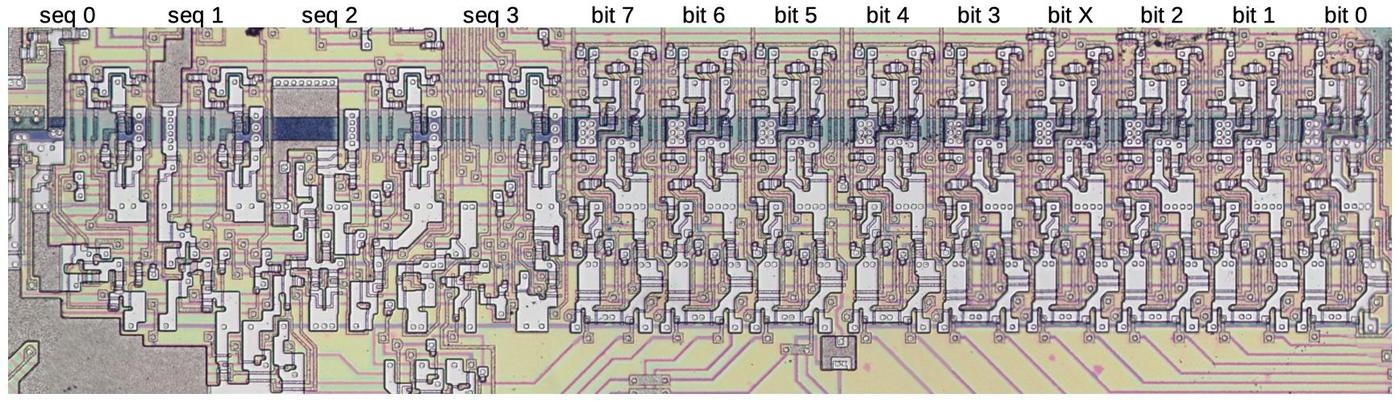

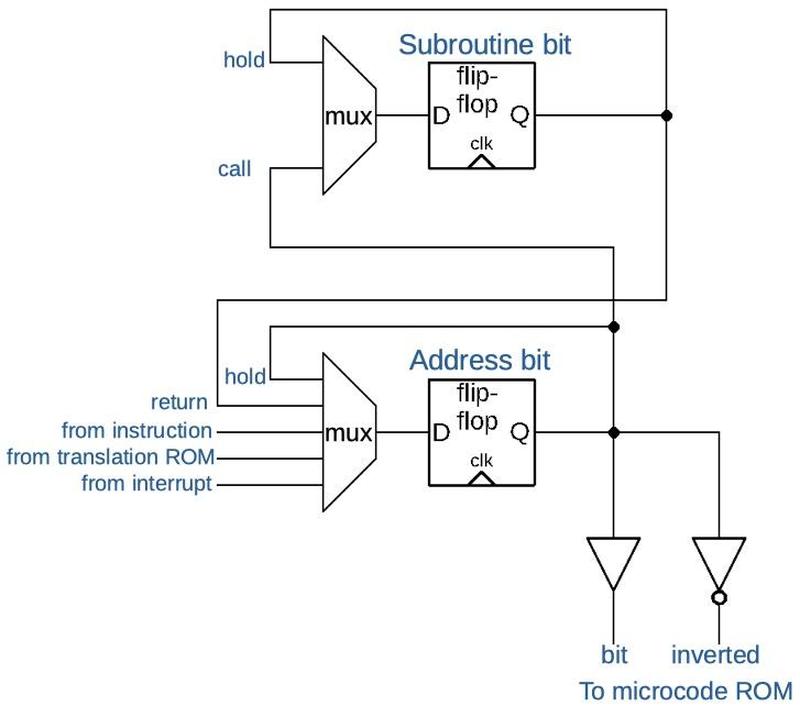

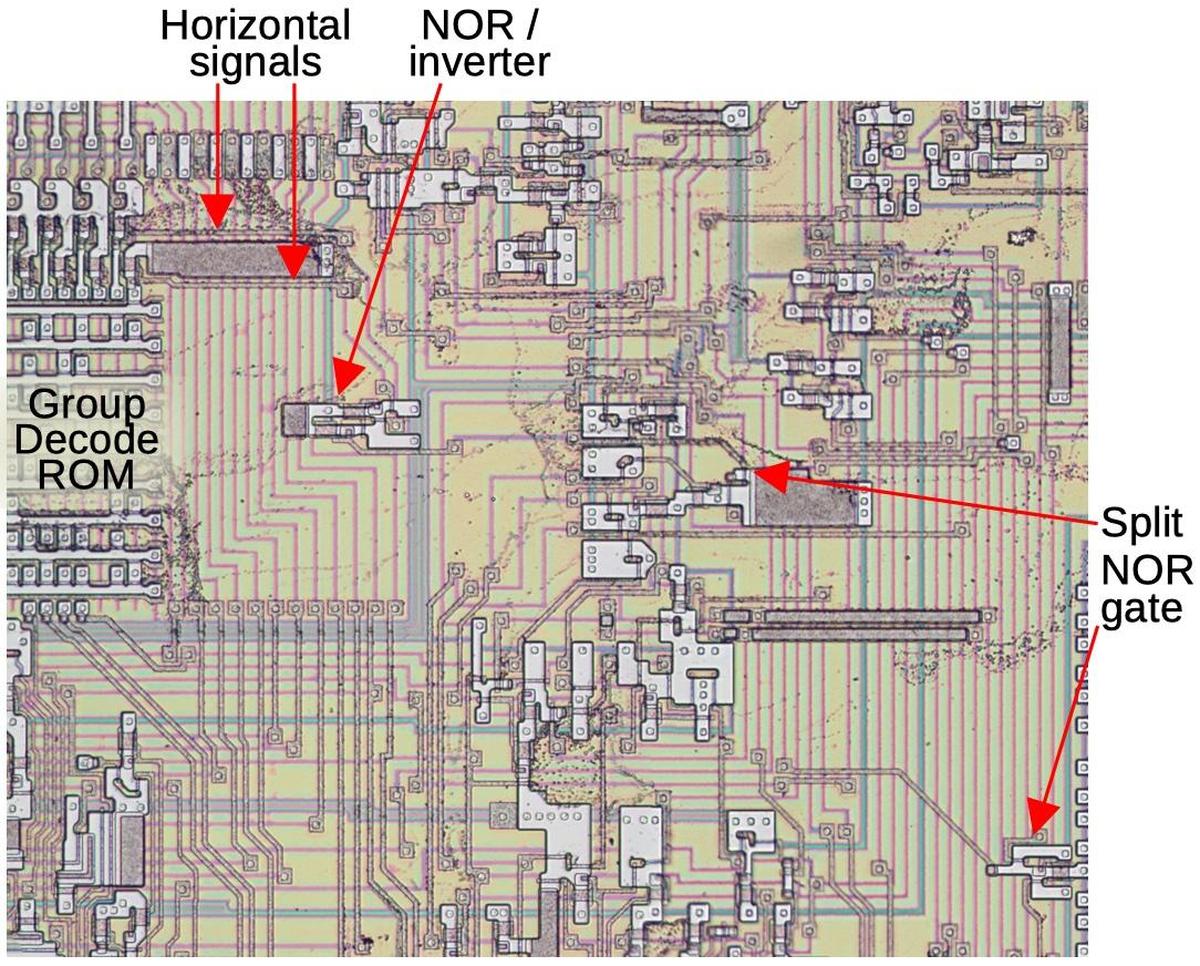

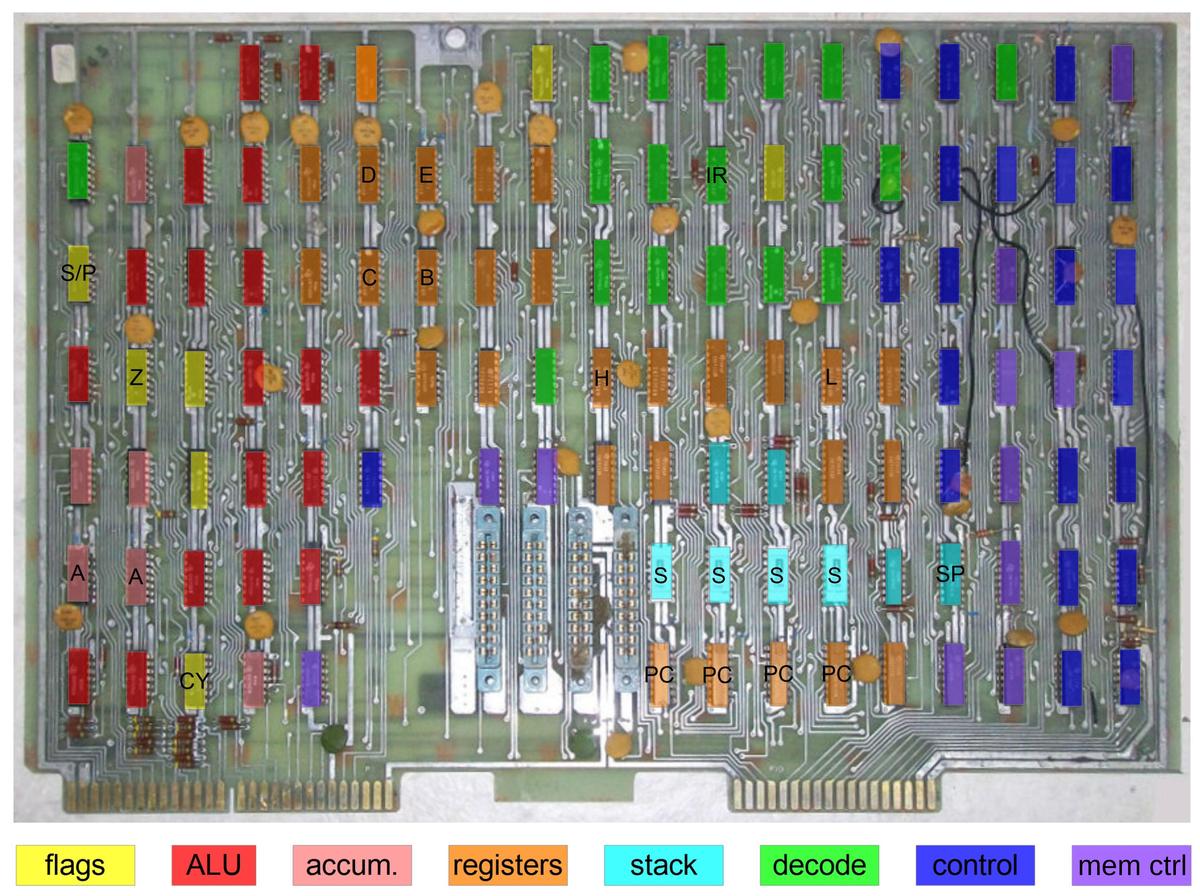

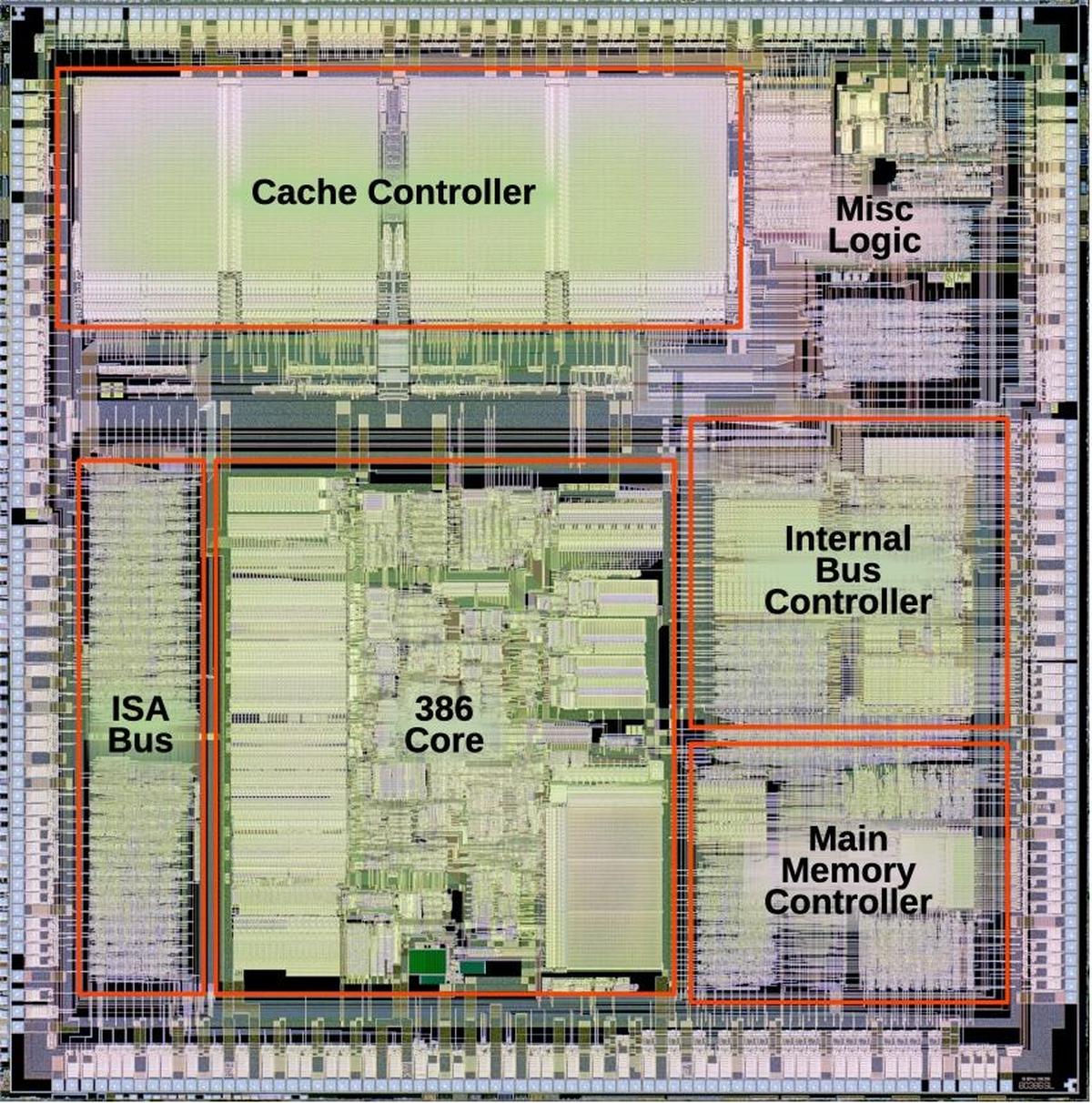

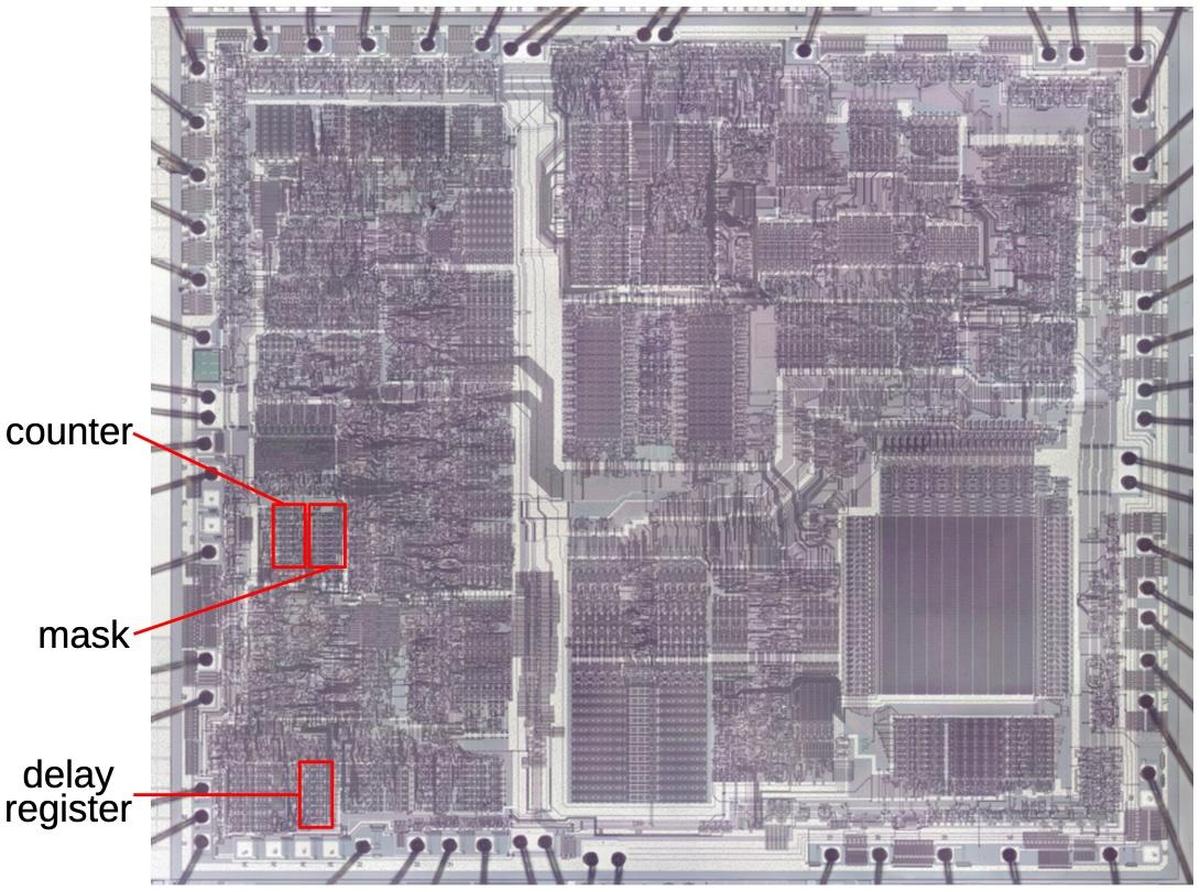

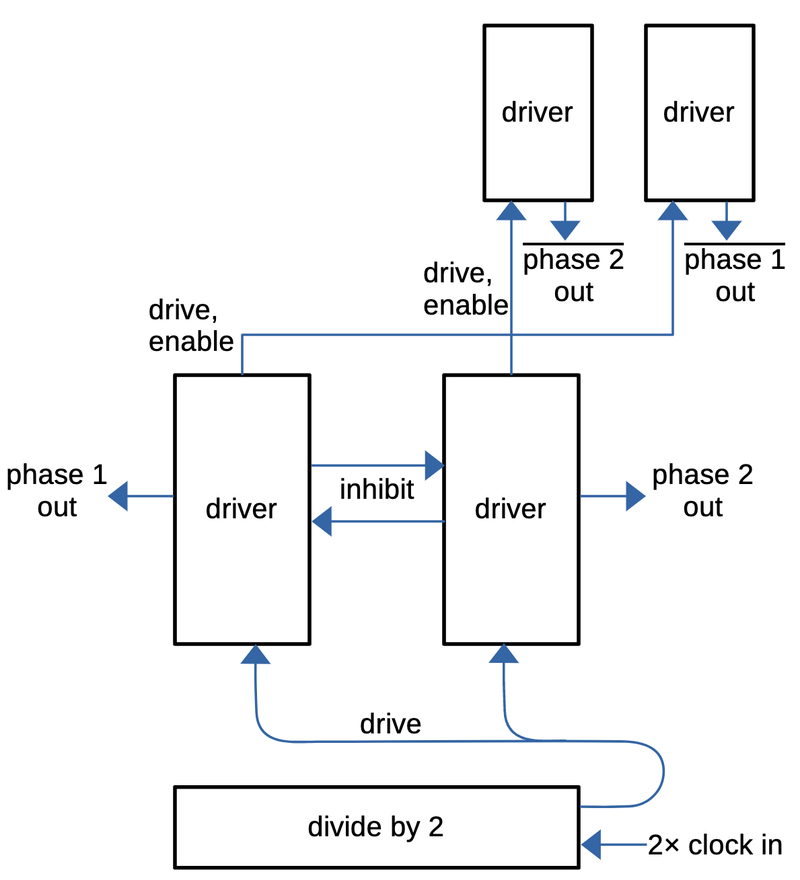

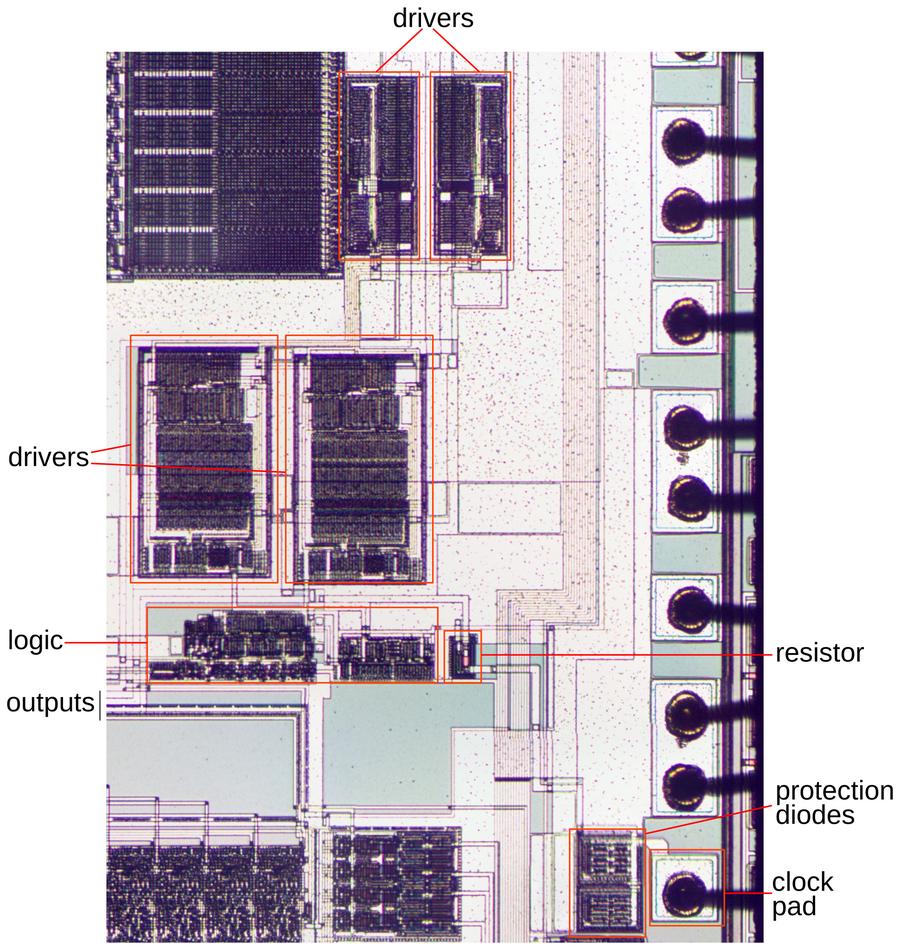

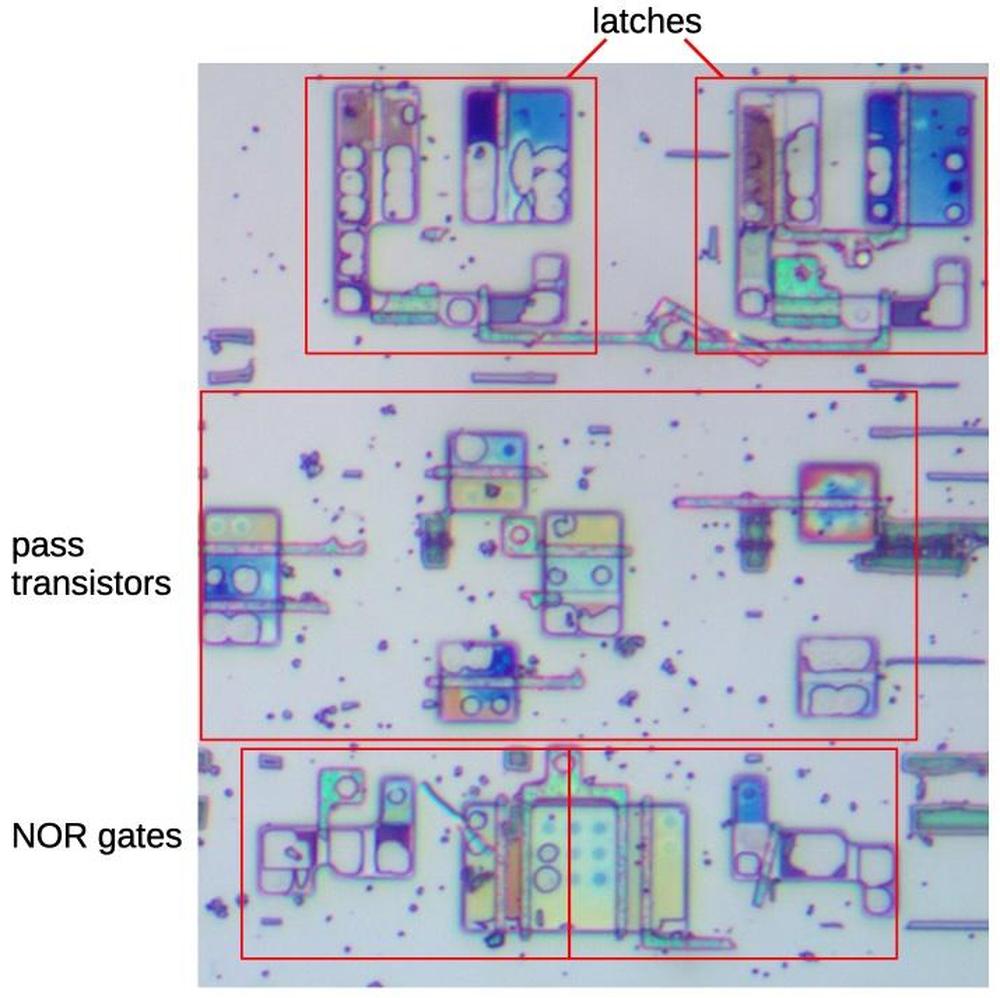

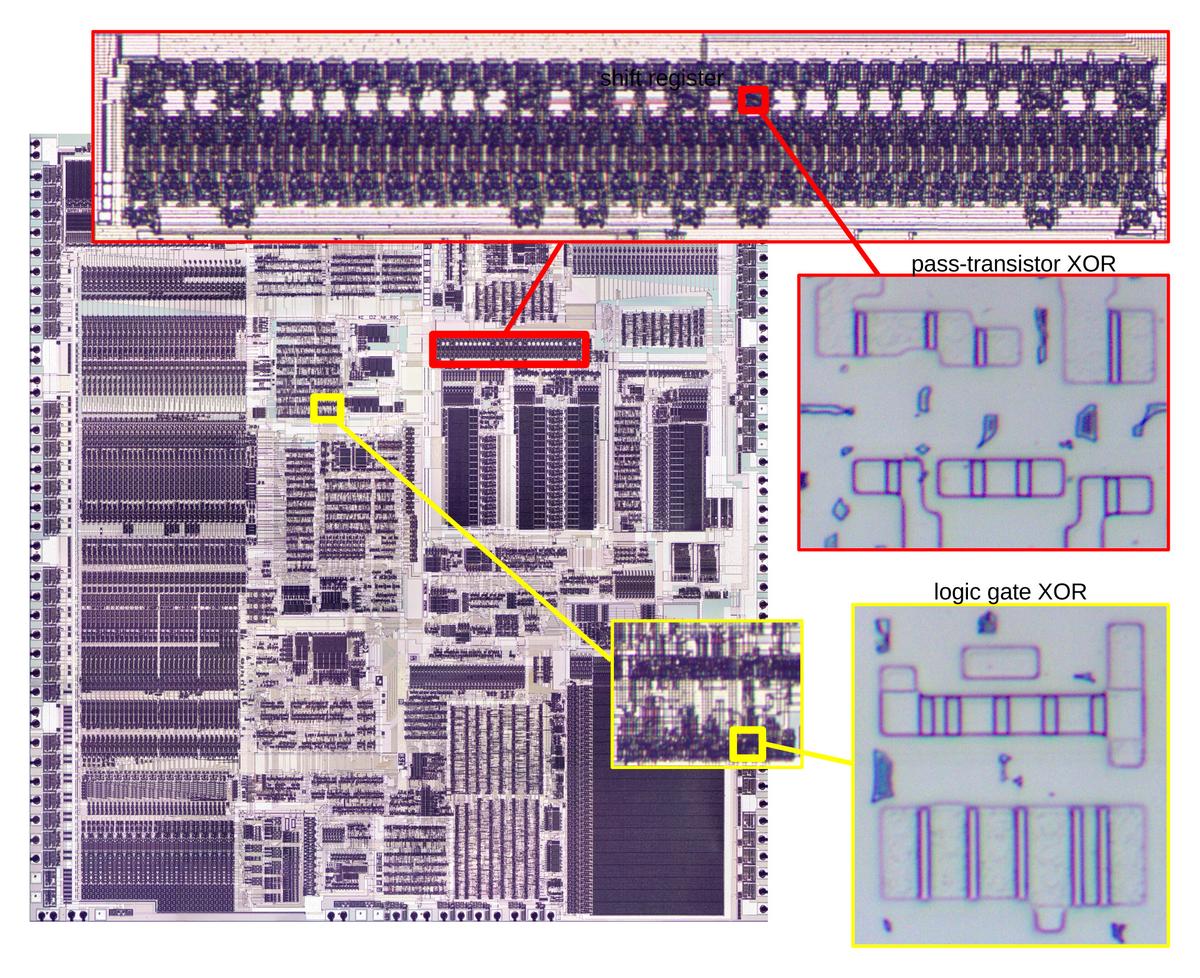

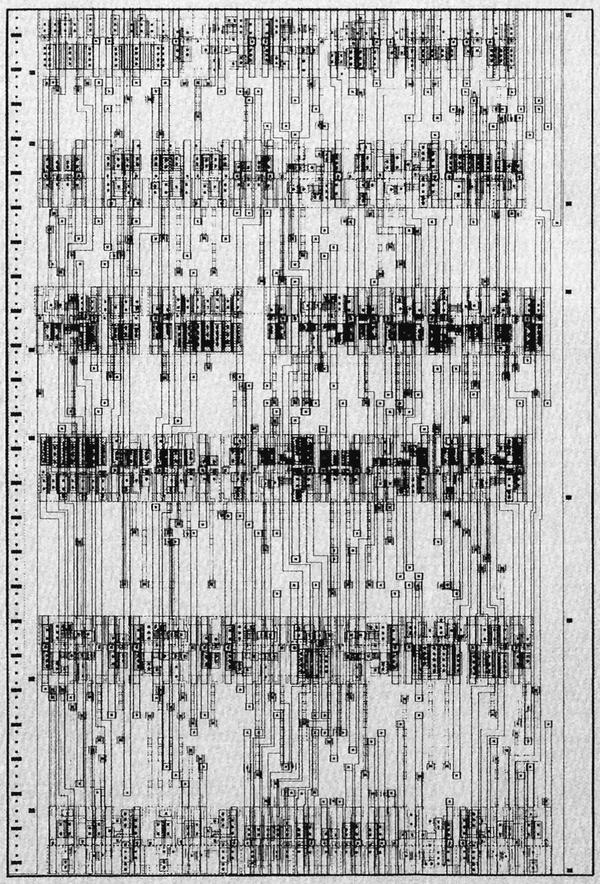

In brief, the microcode in the 8086 consists of 512 micro-instructions, each 21 bits wide. The microcode engine has a 13-bit register that steps through the microcode, along with a 13-bit subroutine register to store the return address for microcode subroutine calls. The microcode engine is assisted by two smaller ROMs: the "Group Decode ROM" to categorize machine instructions, and the "Translation ROM" to branch to microcode subroutines for address calculation and other roles. Physically, the microcode is stored in a 128×84 array. It has a special address decoder that optimizes the storage. The microcode circuitry is visible in the die photo below.

What is microcode?

Machine instructions are generally considered the basic steps that a computer performs. However, each instruction usually requires multiple operations inside the processor. For instance, an ADD instruction may involve computing the memory address, accessing the value, moving the value to the Arithmetic-Logic Unit (ALU), computing the sum, and storing the result in a register. One of the hardest parts of computer design is creating the control logic that signals the appropriate parts of the processor for each step of an instruction. The straightforward approach is to build a circuit from flip-flops and gates that moves through the various steps and generates the control signals. However, this circuitry is complicated and error-prone.

In 1951, Maurice Wilkes came up with the idea of microcode: instead of building the control circuitry from complex logic gates, the control logic could be replaced with another layer of code (i. e. microcode) stored in a special memory called a control store. To execute a machine instruction, the computer internally executes several simpler micro-instructions, specified by the microcode. In other words, microcode forms another layer between the machine instructions and the hardware. The main advantage of microcode is that it turns the processor's control logic into a programming task instead of a difficult logic design task. Microcode also permits complex instructions and a large instruction set to be implemented without making the processor more complex (apart from the size of the microcode). Finally, it is generally easier to fix a bug in microcode than in circuit logic.

Early computers didn't use microcode, largely due to the lack of good storage technologies to hold the microcode. This changed in the 1960s; for example IBM made extensive use of microcode in the System/360 (1964). (I've written about that here.) But early microprocessors didn't use microcode, returning to hard-coded control logic with logic gates.3 This logic was generally more compact and ran faster than microcode, since the circuitry could be optimized. Since space was at a premium in early microprocessors and the instruction sets were relatively simple, this tradeoff made sense. But as microprocessor instruction sets became complex and transistors became cheaper, microcode became appealing. This led to the use of microcode in the Intel 8086 (1978) and 8088 (1979) and Motorola 68000 (1979), for instance.2

The 8086's microcode

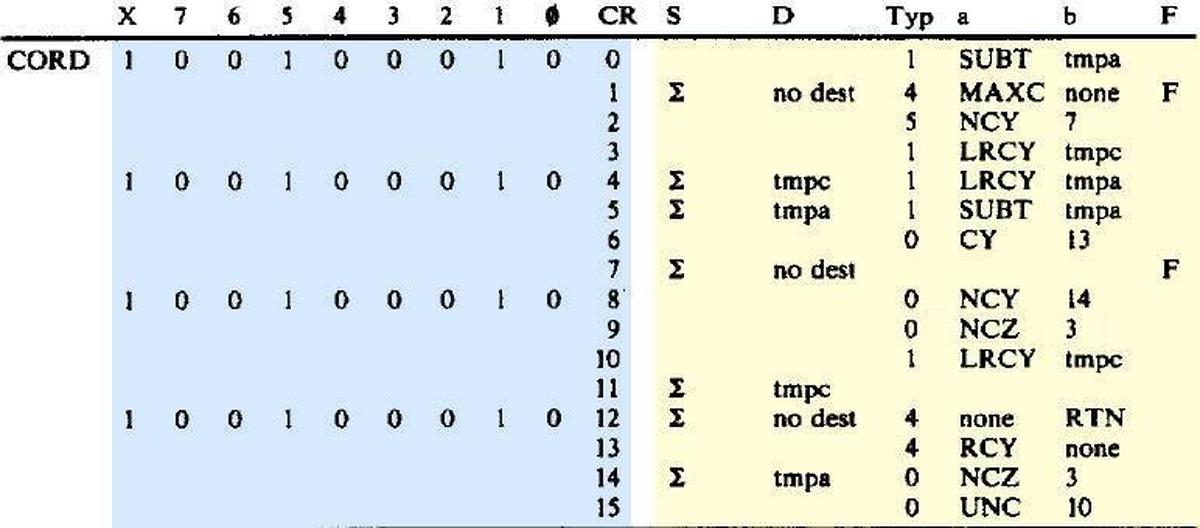

The 8086's microcode is much simpler than in most processors, but it's still fairly complex. The code below is the microcode routine from the 8086 for a routine called "CORD", part of integer division, consisting of 16 micro-instructions. I'm not going to explain how this microcode works in detail, but I want to give a flavor of it. Each line has an address on the left (blue) and the micro-instruction on the right (yellow), specifying the low-level actions during one time step (i.e. clock cycle). Each micro-instruction performs a move, transferring data from a source register (S) to a destination register (D). (The source Σ indicates the ALU output.) For parallelism, the micro-instruction performs an operation or two at the same time as the move. This operation is specified by the "a" and "b" fields; their meanings depend on the type field. For instance, type 1 indicates an ALU instruction such as subtract (SUBT) or left-rotate through carry (LRCY). Type 4 selects two general operations such as "RTN" which returns from a microcode subroutine. Type 0 indicates a jump operation; "UNC 10" is an unconditional jump to line 10 while "CY 13" jumps to line 13 if the carry flag is set. Finally, the "F" field indicates if the condition code flags should be updated. The key points are that the micro-instructions are simple and execute in one clock cycle, they can perform multiple operations in parallel to maximize performance, and they include control-flow operations such as conditional jumps and subroutines.

Each instruction is stored at a 13-bit address (blue) which consists of 9 bits shown explicitly and a 4-bit sequence counter "CR". The eight numbered address bits usually correspond to the machine instruction's opcode. The "X" bit is an extra bit to provide more address space for code that is not directly tied to a machine instruction, such as reset and interrupt code, address computation, and the multiply/divide algorithms.

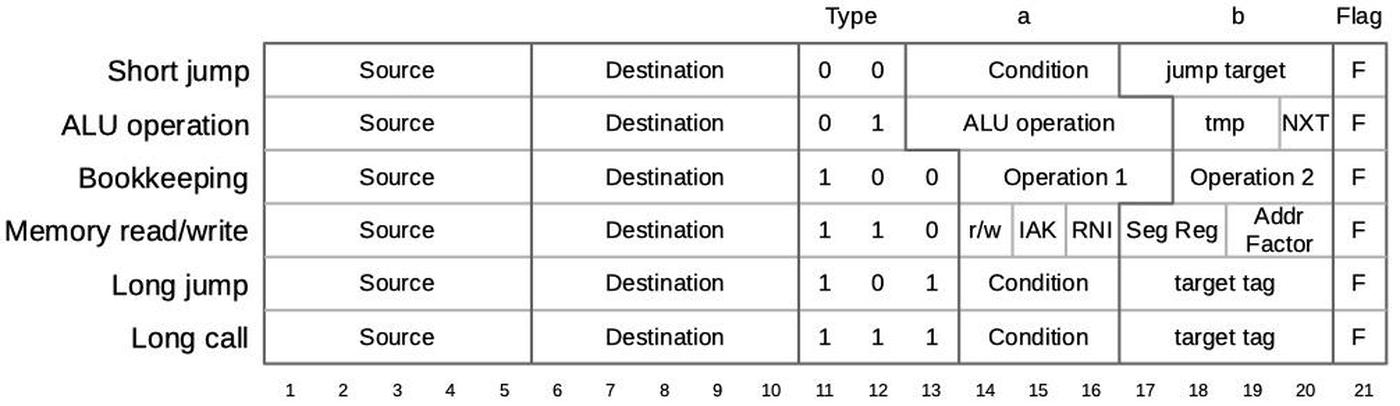

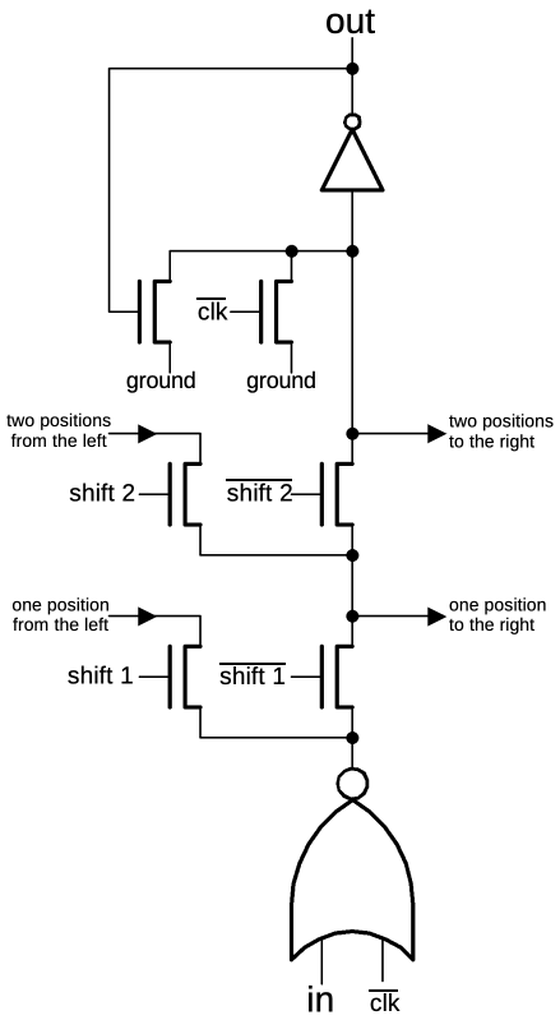

A micro-instruction is encoded into 21 bits as shown below. Every micro-instruction contains a move from a source register to a destination register, each specified with 5 bits. The meaning of the remaining bits is a bit tricky since it depends on the type field, which is two or three bits long. The "short jump" (type 0) is a conditional jump within the current block of 16 micro-instructions. The ALU operation (type 1) sets up the arithmetic-logic unit to perform an operation. Bookkeeping operations (type 4) are anything from flushing the prefetch queue to ending the current instruction. A memory read or write is type 6. A "long jump" (type 5) is a conditional jump to any of 16 fixed microcode locations (specified in an external table). Finally, a "long call" (type 7) is a conditional subroutine call to one of 16 locations (different from the jump targets).

This "vertical" microcode format reduces the storage required for the microcode by encoding control signals into various fields. However, it requires some decoding logic to process the fields and generate the low-level control signals. Surprisingly, there's no specific "microcode decoder" circuit. Instead, the logic is scattered across the chip, looking for various microcode bit patterns to generate control signals where they are needed.

How instructions map onto the ROM

One interesting issue is how the micro-instructions are organized in the ROM, and how the right micro-instructions are executed for a particular machine instruction. The 8086 uses a clever mapping from the machine instruction to a microcode address that allows machine instructions to share microcode.

Different processors use a variety of approaches to microcode organization. One technique is for each micro-instruction to contain a field with the address of the next micro-instruction. This provides complete flexibility for the arrangement of micro-instructions, but requires a field to hold the address, increasing the number of bits in each micro-instruction. A common alternative is to execute micro-instructions sequentially, with a micro-program-counter stepping through each micro-address unless there is an explicit jump to a new address. This approach avoids the cost of an address field in each instruction, but requires a program counter with an incrementer, increasing the hardware complexity.

The 8086 uses a hybrid approach. A 4-bit program counter steps through the bottom 4 bits of the address, so up to 16 micro-instructions can be executed in sequence without a jump. This approach has the advantage of requiring a smaller 4-bit incrementer for the program counter, rather than a 13-bit incrementer. The microcode engine provides a "short jump" operation that makes it easy to jump within the group of 16 instructions using a 4-bit jump target, rather than a full 13-bit address.

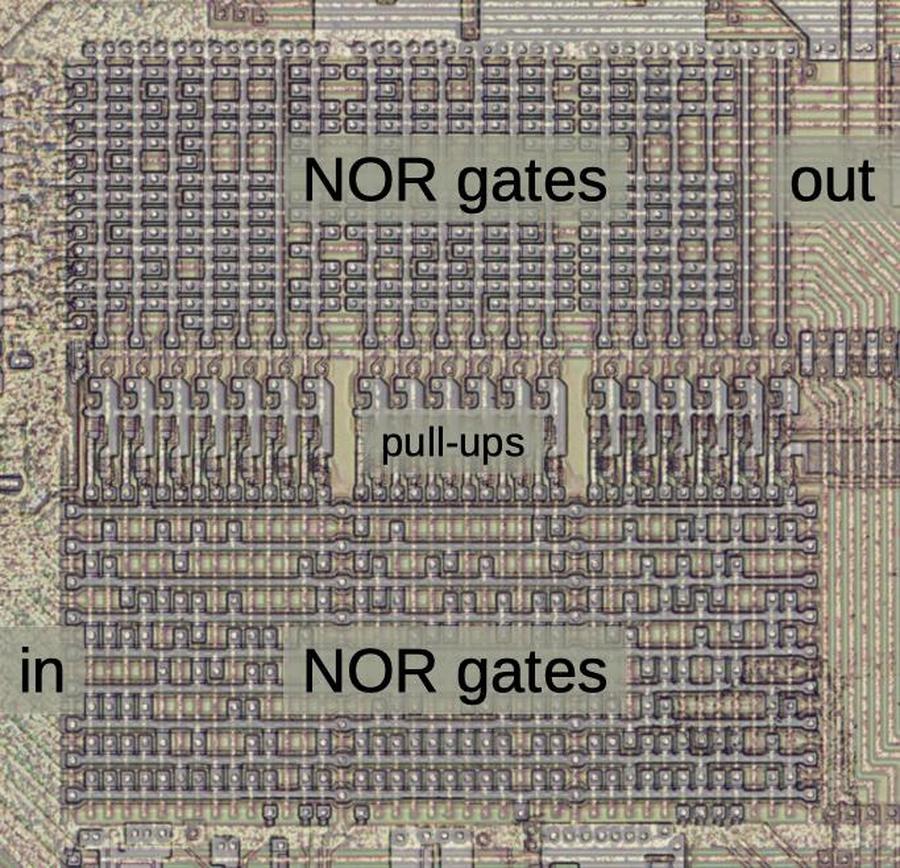

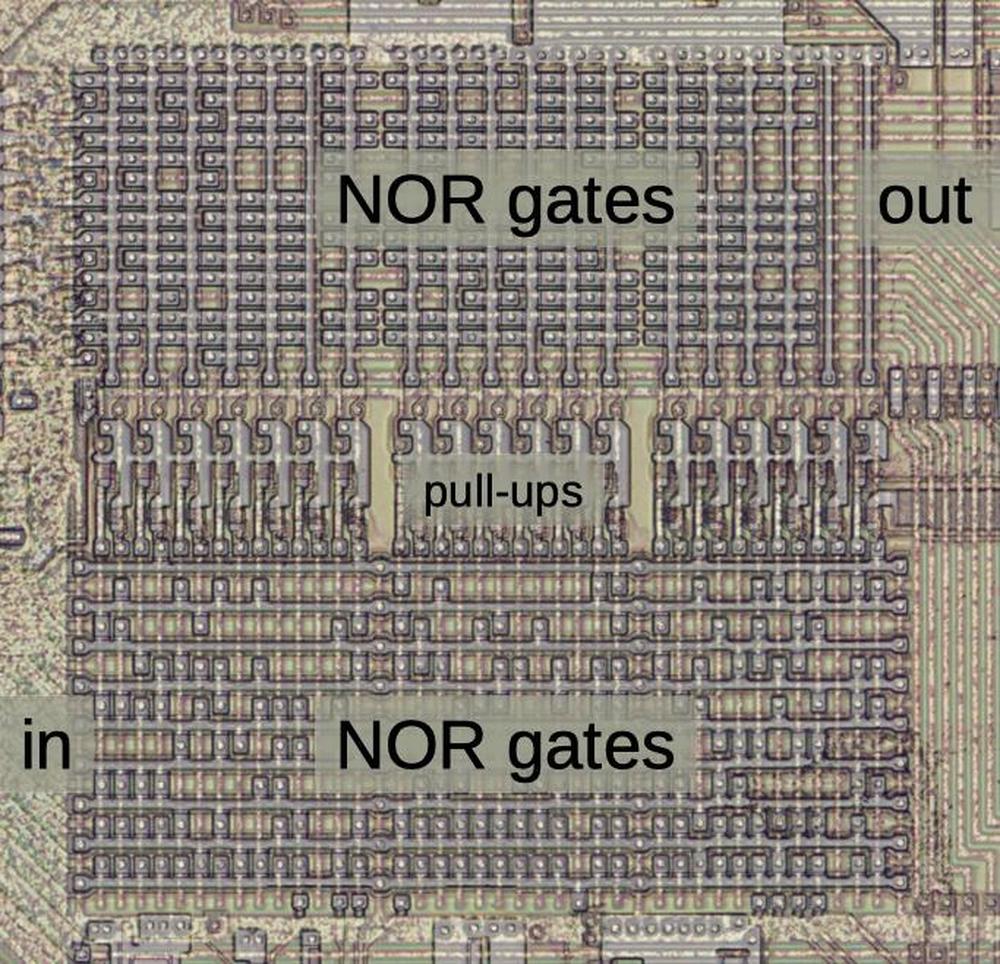

Another important design decision in microcode is how to determine the starting micro-address for each machine instruction. In other words, if you want to do an ADD, where does the microcode for ADD start? One approach is a table of starting addresses: the system looks in the table to find the starting address for ADD, but this requires a large table of 256 entries. A second approach is to use the opcode code value as the starting address. That is, an ADD instruction 0x05 would start at micro-address 5. This approach has two problems. First, you can't run the microcode sequentially since consecutive micro-instructions belong to different machine instructions. Moreover, you can't share microcode since each instruction has a different address in the microcode ROM.

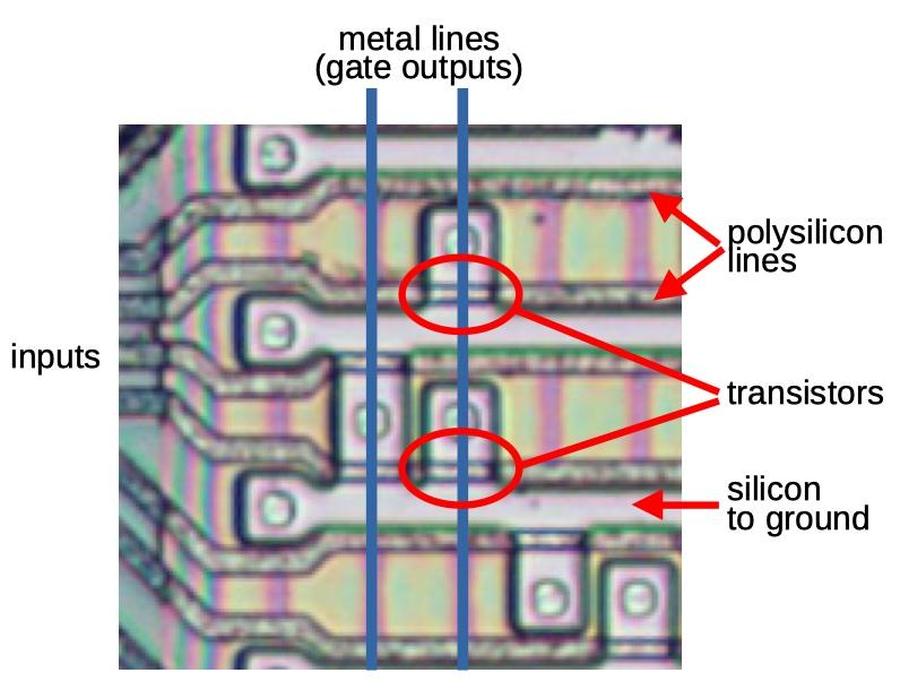

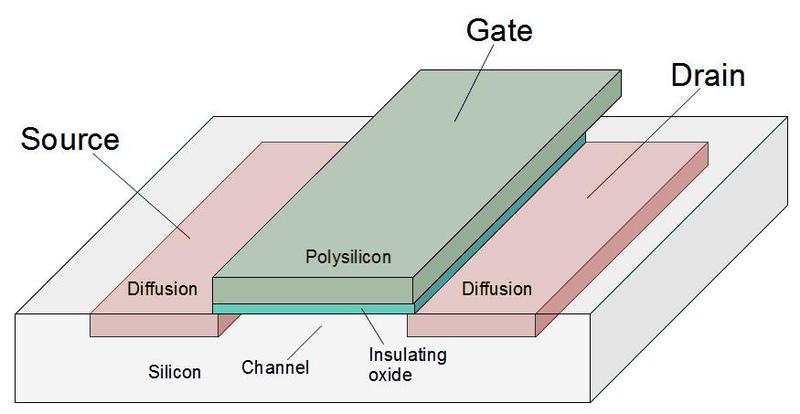

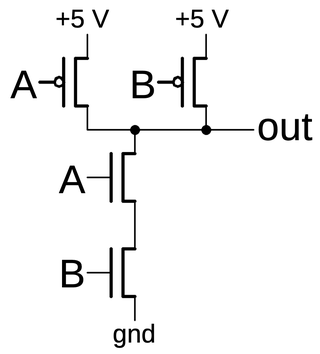

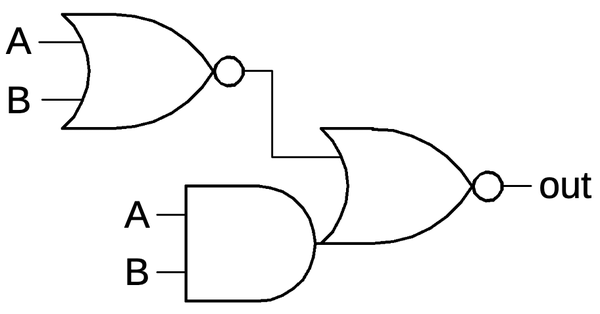

The 8086 solves these problems in two ways. First, the machine instructions are spaced sixteen slots apart in the microcode. In other words, the opcode is multiplied by 16 (has four zeros appended) to form the starting address in the microcode ROM, so there is plenty of space to implement each machine instruction. The second technique is that the ROM's addressing is partially decoded rather than fully decoded, so multiple micro-addresses can correspond to the same physical storage.4